This week, I worked on robust-ifying the Computer vision LED-Robot detection.

As a quick recap, the way the computer vision localization works is that the program applies an image mask to the image captured to isolate the LEDs from the appropriate robot. After this, the algorithm does the inverse affine transform, scaling etc.

However, due to manufacturing inaccuracies in our LEDs, changing ambient lighting conditions, camera inaccuracies, off-axis image shift etc, using a hardcoded LUT for which robot color is which is simply not robust and has been giving us a lot of issues.

As such, I refactored the computer vision algorithms to use K-nearest-neighbors to run an unsupervised clustering classification algorithm at runtime to figure out which LED is which. This adds practically no extra latency/compute overhead during runtime since all the algorithm does is a distance calculation to the centroids of each of the clusters.

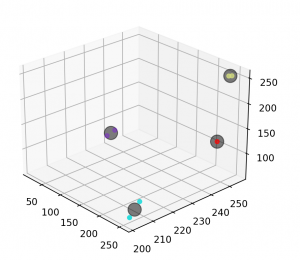

Included below is a visualization of 4 robot colors (Orange, 2 types of blue, Green) and also subsequently the clustering centroids and the detected pixels. As you can see, often 2 types of LEDs with the same color have enough noise/discrepancy in the detection that the clustering approach is definitely the right approach to this problem.