This week I spent time doing testing, and finding and fixing bugs in our state machine code. I also spent time with Colin preparing for what we plan to show for the demo. We will be doing a integrated test run on our fake route tomorrow to prepare for our demo on Monday, and also plan a separate fake route to show off our rerouting capability if time (and space) allows. After the demo, the remaining week will be spent on working on the final video and final report.

Team status report 12/10/2022

This week we spent time preparing our final presentation. This entailed fine-tuning our path tracking algorithms and gathering the data regarding our use-case requirements to see if we made them or not. We also spent time figuring out exactly what we want to show at the demo and we decided that we want to show the device giving feedback for a pre-configured route outside of Hamerschlag. We also want to show some slides on a laptop with some route data to give a visual representation of how the device operates.

Next week after the demo we plan on incorporating our snap-to-sidewalk feature that will allow us to re-route on the go for our final video/report.

Colin’s status report for 12/10/2022

This week I spent some time preparing for the final presentation and gathering the hardware info needed to determine if we met our use cases. I also worked on the software needed for our final demo. We plan to show the finite state machine that Zach has been working on by walking a route outside of Hamerschlag and providing feedback to the users. This involved gathering the location data of the turns that we will be making in the demo and making some feedback. I created a pseudo-route for the demo that imitates the routes given back by the HERE API so that Zach’s interpreter code can provide feedback like a normal route. We are also trying to figure how to add a re-routing aspect into the demo using another pre-configured route.

Next week after the demo Zach and I will be working on our final report and video. We hope to be able to develop some snap-to-sidewalk code before the final report so that we can have a full re-routing aspect to project that coordinates with the HERE API.

Colin’s status report for 12/3/2022

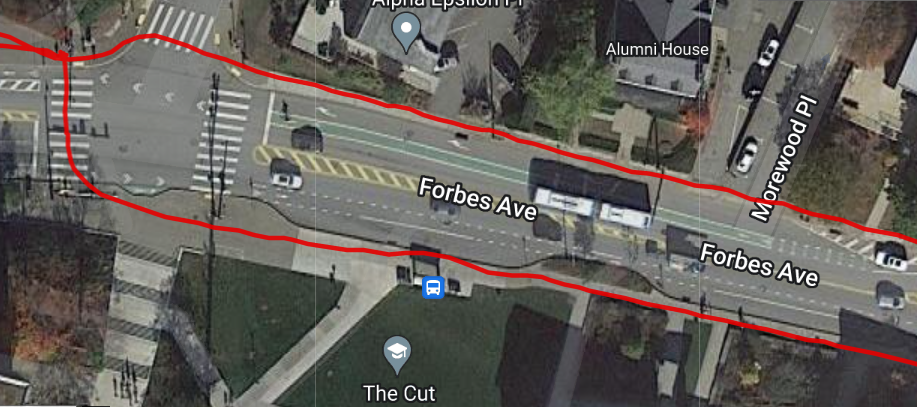

This week I spent most of my time fine-tuning the location accuracy of the device, by changing configuration settings and experimenting with which ones provide the best accuracy. We chose a GPS device with an integrated inertial measurement unit to be able to attempt to get better location accuracy. After a lot more research and testing, I came to realize that the device we are using is not meant for pedestrian use, and is really meant for uses where wheels are involved and the speed of the wheels can be measured separately and fed into the device for dead-reckoning purposes. The IMU needs to be calibrated and the calibration tests are different depending on the mode of operation that the device is in. Most of the modes require higher speed for more accurate results, and the modes that do not require higher speeds require wheel-tick sensors, which we do not have because of our pedestrian use-case. This results in the IMU providing highly inaccurate data after a couple of minutes of walking, so I found that just using the location data from the satellites provides fairly good accuracy as shown in the picture below. For some configurations like the one I was using, the device uses a low-pass filter on the location data to get better accuracy. It can be observed that I was on the sidewalk the entire time.

The problems start to come in when the connection to the satellites gets worse. The system works well when out in the open, providing enough accuracy to be able to make a decision as to what side of the street we are on, the average urban street width is ~3 meters (10 feet). With measured accuracy of about 1 meter out in the open, our device is accurate enough to determine what side of the street we are on. When connection counts to the satellites drops, we encounter a lot of noise, too much to be able to determine what side of the street we are on. In the photo below, I was walking on the south side of Winthrop St. up against a few tall buildings, which tricked the device into thinking that I was walking up the middle of the street, when in reality I was still walking up the same sidewalk on the south side of the street. This would be a great situation where dead-reckoning with the IMU would come into play, however due to our low speeds and our device not being rigid enough, the IMU data hurts us more than helps us.

The location problems may be able to be fixed by adding some hysteresis to the code that determines what side of the street we are on, however in a dense urban environment, more we need to figure out how to incorporate the IMU data better because we are not currently accurate enough for our use case requirement of 1 meter accuracy.

I also made some hard-coded routes for us to test with and potentially use in our final demo if we cannot figure out a way to route to sidewalks by the time the project is due.

Next week I will give one final shot at trying to get better accuracy using the IMU, and if I cannot get anything better, we will have to settle for what we have right now. Zach and I are also going to try to wrap the project up and come up with a good demo plan for the final presentation, possibly with a hard-coded route. I am also going to finalize our use-case measurements on the hardware side and see which ones we made and which ones we did not make.

Team status report 12/3/2022

This week we spent time refining our project, implementing our state transitions, and gathering test data (battery life, test routes). Additionally, we are also rescoping our project to be within the area of Pittsburgh. This helps us specify which unfriendly crosswalks we will be avoiding, instead of tailoring our algorithm to a more general case.

This upcoming week, we will be preparing for our final presentation, and doing testing and fine-tuning for our project. We expect both team members to present, with each presenting around half of the content.

Zachary’s status report 12/3/2022

This week I spent most of my time implementing the state transitions for routing the user, and testing that implementation. The state transitions are working correctly on our default test case of forbes-morewood to craig-fifth, but I will spend more time next week to do testing to make sure that it is correct. Tomorrow, I will be working with Colin on the final presentation, and I will be spending the next week performing testing, polishing our project, and getting ready for the final demo.

Colin’s Status Report for 11/19/2022

This week I focused on finishing up with the hardware for the project. I can interface with all of the necessary hardware to be able to gather/output everything that we need. I developed a simulation test bench so that Zach will be able to test his routing code with some pre-configured route data. We then hope to be able to take the device on a walk soon and be able to see the device giving feedback when necessary to be able to walk a full route.

Next week I plan to order the full battery that we plan on using, as well as adding a 3.5mm audio jack to the outside of the device so that users can plug their preferred audio devices into the box for feedback purposes. I also plan to work closely with Zach in order to incorporate re-routing capabilities as well as improving our accuracy as much as possible.

Team status report 11/19/2022

A hard problem we are facing this week is coming up with a heuristic to determine whether a crosswalk can be avoided or not. If a route has multiple blind unfriendly crosswalks, choosing to avoid or not avoid one can have consequences on subsequent unfriendly crosswalks as well. Currently, we think a flat rate distance may be the right option (ie. avoiding blind-unfriendly crosswalks should not increase trip length by X meters in total). If we do it by crosswalk instead of in total (avoiding blind unfriendly crosswalk should not increase trip length by more than X distance of percentage), this may cause the aggregate trip length to be very inflated, even if each individual crosswalk does not increase trip length by that much.

Next week we plan on doing more integration and testing as a group, as we need to make sure that the software and hardware components are working together well, and that we are covering edge cases properly. We hope to be able to run a full cached route with feedback by the end of next week.

Zachary’s status report 11/19/2022

This week i worked on finishing up code for how to direct users/verbal commands for users, and also outputting that feedback using a text-to-speech API (pyttsx3). I also wrote code to orient users at the start of the trip. This is to help users know which direction they need to rotate to begin the trip (left, slight left, right, slight right, turn around, no action). Tomorrow, I will meet with Colin to do integration and testing for our mini-demo on Monday.

Next week I want to start implementing two of the more advanced concepts, which are redirecting, and heuristics for whether to avoid an unfriendly crosswalk (distance based). I will also start doing testing by writing a suite of units tests for my code.

Zachary’s status report 11/12/2022

This week I spent some time setting up a basic communication protocol with Colin for the interim demo. We were able to get good feedback from the instructors about what issues need to be solved, which I’ll talk about at the end. I also continued working on the response request for the backend. particularly retuning a string to indicate how far the user has left till the next action (ie. turn). If the next action is <5m away, I instruct them to prepare to do the next action. Once their distance from the previous checkpoint is >5m, and their distance to the next checkpoint is decreasing, I increment my cache index, indicating that the user is on the next portion of the trip.

A critical piece of feedback we got this week was, how do we know which of the street we’re on, and if there is a crosswalk when we turn? I have been doing reading on the HERE API to address this problem, but have not been able to see a feasible solution so far, as most features on the API, such as this, are focused on car routing instead of pedestrian routing. Another alternative I’ve been considering is reviewing other APIs, such as Google Maps, to see if they potentially have functionality that can solve this issue. In the worst case, if we are unable to solve this, the most brute force way to solve this issue is to assume that on any checkpoint, the user may have to cross a crosswalk. That is, we would tell the user that they may have to cross an intersection, and to use their walking cane/other senses to determine if they actually need to.