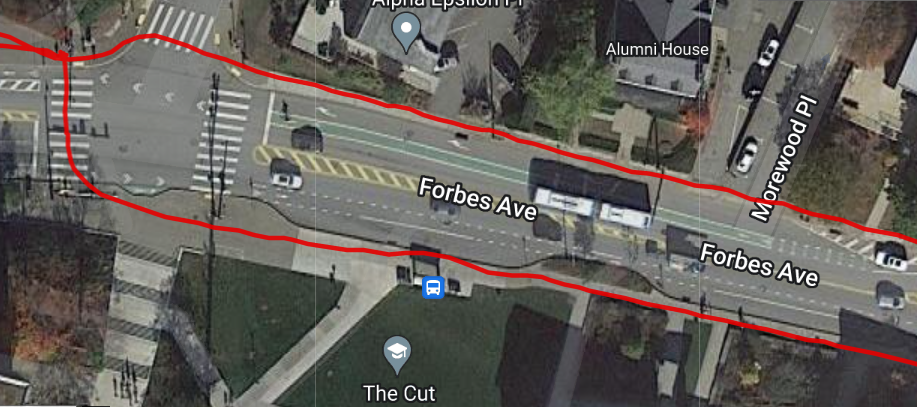

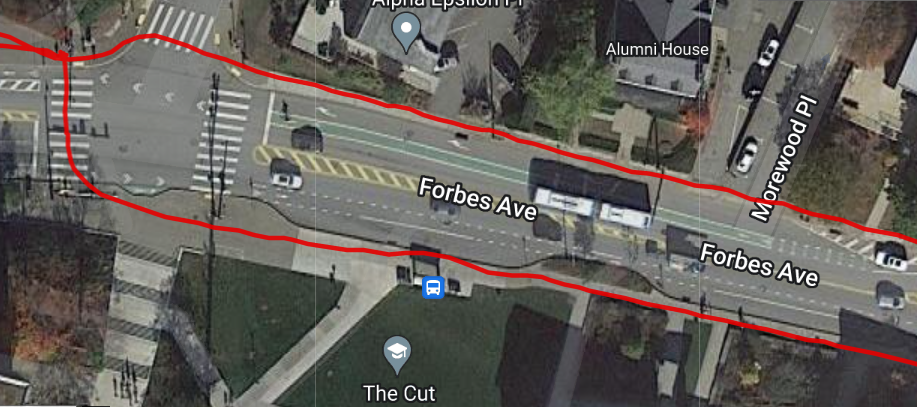

This week I spent most of my time fine-tuning the location accuracy of the device, by changing configuration settings and experimenting with which ones provide the best accuracy. We chose a GPS device with an integrated inertial measurement unit to be able to attempt to get better location accuracy. After a lot more research and testing, I came to realize that the device we are using is not meant for pedestrian use, and is really meant for uses where wheels are involved and the speed of the wheels can be measured separately and fed into the device for dead-reckoning purposes. The IMU needs to be calibrated and the calibration tests are different depending on the mode of operation that the device is in. Most of the modes require higher speed for more accurate results, and the modes that do not require higher speeds require wheel-tick sensors, which we do not have because of our pedestrian use-case. This results in the IMU providing highly inaccurate data after a couple of minutes of walking, so I found that just using the location data from the satellites provides fairly good accuracy as shown in the picture below. For some configurations like the one I was using, the device uses a low-pass filter on the location data to get better accuracy. It can be observed that I was on the sidewalk the entire time.

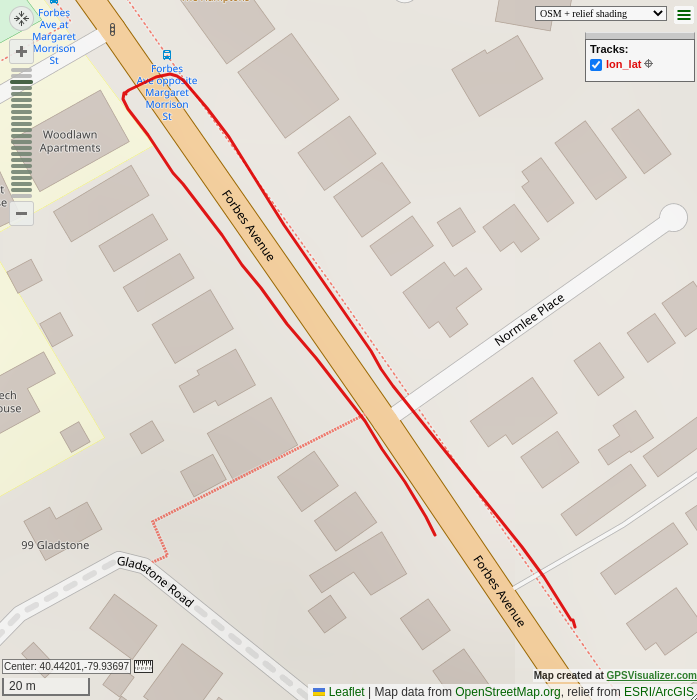

The problems start to come in when the connection to the satellites gets worse. The system works well when out in the open, providing enough accuracy to be able to make a decision as to what side of the street we are on, the average urban street width is ~3 meters (10 feet). With measured accuracy of about 1 meter out in the open, our device is accurate enough to determine what side of the street we are on. When connection counts to the satellites drops, we encounter a lot of noise, too much to be able to determine what side of the street we are on. In the photo below, I was walking on the south side of Winthrop St. up against a few tall buildings, which tricked the device into thinking that I was walking up the middle of the street, when in reality I was still walking up the same sidewalk on the south side of the street. This would be a great situation where dead-reckoning with the IMU would come into play, however due to our low speeds and our device not being rigid enough, the IMU data hurts us more than helps us.

The location problems may be able to be fixed by adding some hysteresis to the code that determines what side of the street we are on, however in a dense urban environment, more we need to figure out how to incorporate the IMU data better because we are not currently accurate enough for our use case requirement of 1 meter accuracy.

I also made some hard-coded routes for us to test with and potentially use in our final demo if we cannot figure out a way to route to sidewalks by the time the project is due.

Next week I will give one final shot at trying to get better accuracy using the IMU, and if I cannot get anything better, we will have to settle for what we have right now. Zach and I are also going to try to wrap the project up and come up with a good demo plan for the final presentation, possibly with a hard-coded route. I am also going to finalize our use-case measurements on the hardware side and see which ones we made and which ones we did not make.