This week I made a lot of progress regarding building the device and gathering our first bit of test data. I decided to build the first (and possibly final) iteration of the device out of a lightweight aluminum casing material. This material provides strong structural support while also allowing us to hit our use case weight requirement. The aluminum also acts as a ground plane for our antennas, and I took particular care to make the dimensions of the box work well with the GPS antenna. The main frequencies that we will be using are 1.2GHz – 1.5GHz, which is a wavelength of about 10 inches. I made the dimensions of the box 10 inches (wavelength) by 5 inches (wavelength / 2) to attempt to get a better resonance and help with noise. I will look further into seeing if it is possible if we can use a particular band that would resonate better with the case because 1.2GHz – 1.5GHz is a fairly large range and it is impossible to tune to all frequencies in that range.

The case with all electronics not including the battery weighs ~18 ounces and the battery that we plan on using weighs ~14 ounces. This gives us a total weight of 32 ounces, which is under our use case requirement of ~35 ounces (1kg).

I used a 4000 mAh battery that I had to test with, however it will not be the battery that we plan on using in the end. For the 1-2 hours that I was using the battery, it only used up about 1/3 of it’s power which is a good sign and means that with the 26800 mAh battery that we plan to use, we should be able to hit our use case requirement of 16 hours of battery life.

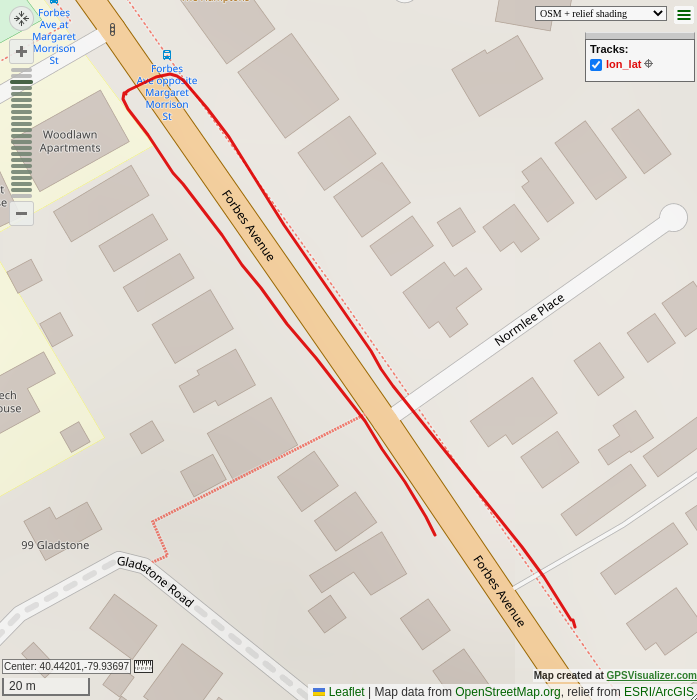

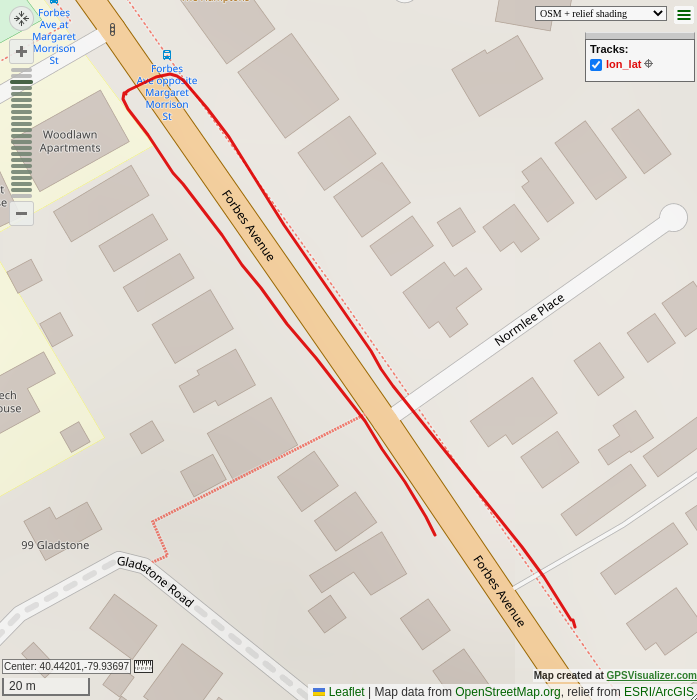

I walked a route with the device to see if it gathers somewhat accurate data. Below is the route I walked, I started going down Forbes Ave towards the soccer field and then crossed the road and walked back up Forbes Ave. I gathered a location point once every second and used gpsvisualizer.com to visualize the data. It is obvious that I was walking down one side of the street and back up the other side of the street which shows that we have fairly good location accuracy. There are more settings and ways to increase the accuracy on the ZED-F9R GPS unit that I have not had a chance to change but they should get us even better results.

(The dotted line paths on the map is not data gathered by us, they are markers on the map for other purposes)

This week I accomplished most of my goals of gathering test data and building an iteration of the physical device to allow us to start gathering data. I did not end up buying the battery that we plan on using because I found one lying around that I was able to use to test with, and I will buy the real battery later on because the one that I have right now works well for testing. Next week I would like to collaborate more with Zach so that we can start incorporating more of the routing code into the device. I would also like to do more analysis on the heading of the device and see how accurate it is. The GPS device has different modes depending on the application, and I believe that if I use a different mode our results could be more accurate for the purposes of walking, so I would like to experiment with the GPS unit more next week.