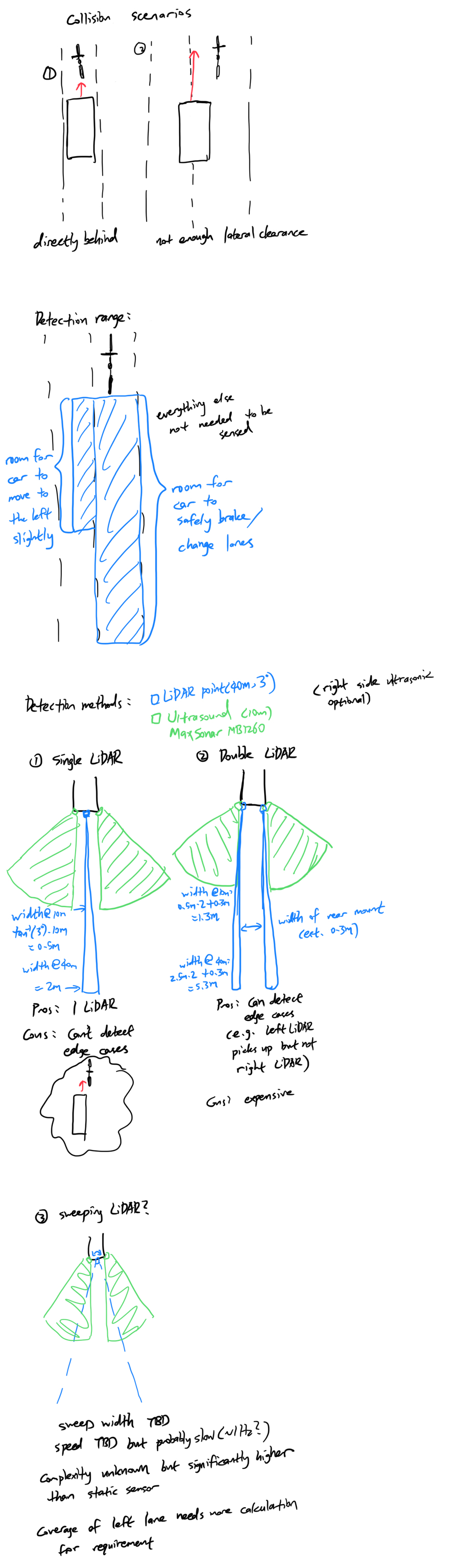

This week we’ve had a lot of hardware updates to our project idea based on the feedback that we received from our project proposal. We are looking into using a static sensor array rather than a single 360-degree LIDAR sensor. This will hopefully allow us to update the system of new inputs more often.

We are also looking into using both ultrasonic sensors and LIDAR. LIDAR gives us more detection distance (up to 40m in favorable conditions) yet is less reliable in bright lighting conditions and could be affected by our LED indicators on the back. The ultrasonic sensors can’t cover as much distance as the LIDAR sensors but aren’t affected by the LED indicators. We plan on using them as the primary short-range sensor, ~10m or less. That way, when we have the LED indicators on, we know that the sensing isn’t being adversely affected.

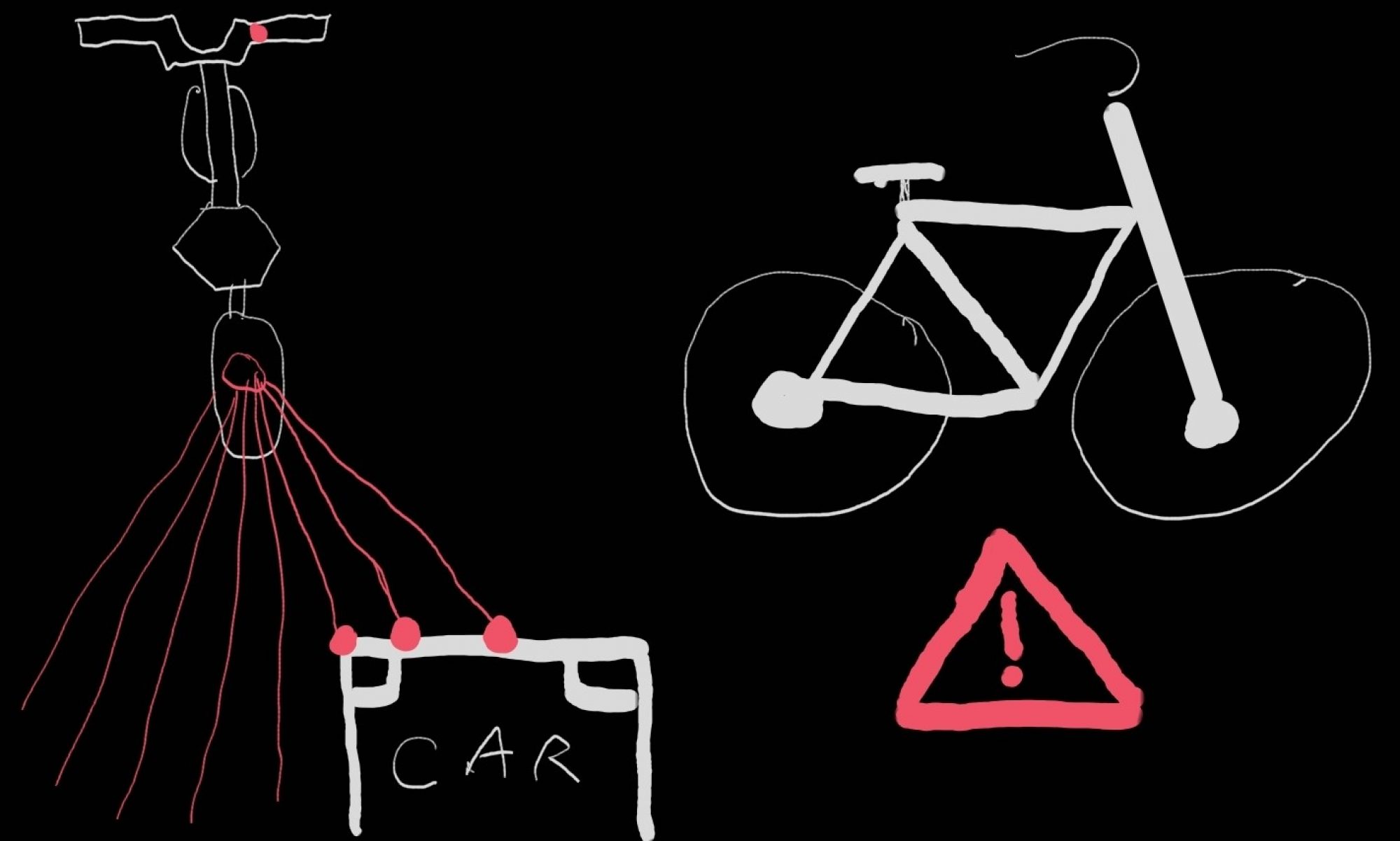

We had originally planned on using a LED strip because the 360-degree LIDAR would give us enough information to report where the nearby objects were with more granularity. However, especially since we are switching to static sensors, we decided that we aren’t going to attempt to provide that much information. This will help us not overload the cyclist with excess information: the cyclist doesn’t need to know the width of the object closing in behind them, they just need to know that there is something generally to the back left, the back right, or behind them. More to this point, we have decided to go with zone LED indicators-similar to what you’d see for the side indicators for a car.

We received some questions about how exactly we are going to communicate to the cyclist about the conditions behind them. We plan on using a system where a steady light in a zone indicates the presence of an object that is tracking behind them, while filtering out stationary objects. Then, when there is what we consider a danger, like something closing in fast or that is very close to the bike, we are going to flash the LED of that zone. We are cognizant that we need to pick out LEDs that are bright enough to work in daylight conditions, while also not blinding/distracting the cyclist, especially at night.

From here, we are going to research these changes and their practical brand options, and integrate them into our design.