This week I figured out how much capacity the battery for our system would need. To do so, I found the current draw from each of the components. In doing so, I learned about luminous efficiency and determined that the LEDs that we were planning on using were extremely inefficient. Considering that our system is run off of a battery, conserving power is very important, so I picked out another set of LEDs. I decided to go with addressable LED strips because they had a higher luminous efficiency (above 100 lumens/W, which is acceptable for red LEDs) and they would allow us to output more light over a wider area, as well as adjust their output if necessary.

Once I found the LEDs that we were going to use, I combined their current draw with that of the other components, giving us a maximum current draw of 5.5 A. This number is much higher than what our average current draw should be, considering that the lights won’t always be on and they won’t be outputting their full RGB spectrum. Additionally, we will have an on/off switch for the system overall, so we won’t need to power 5.5 A for 10 hours. Thus, with 1 hour of on time and 8 hours of standby/off, that should give us at least a 5Ah battery.

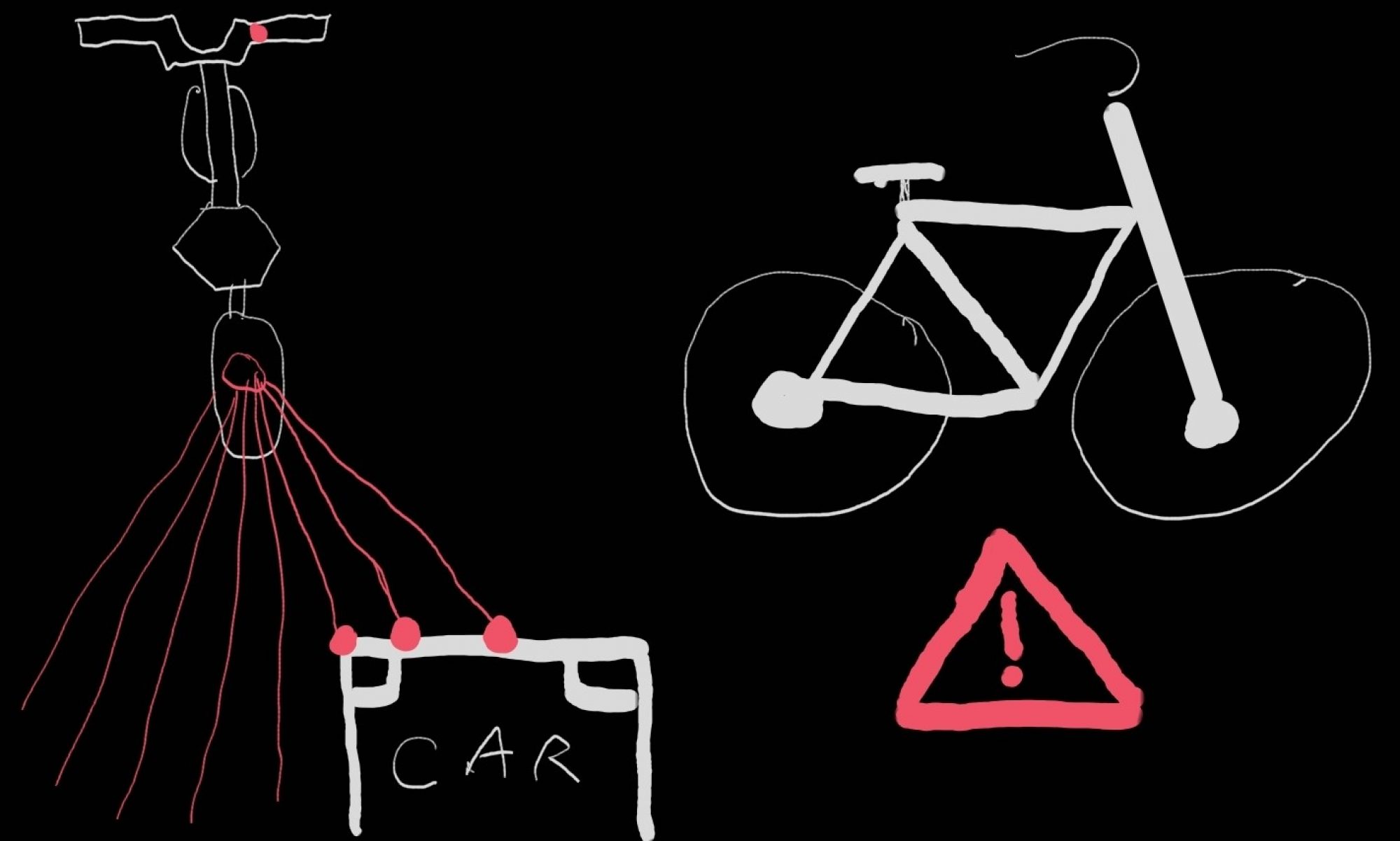

This week I also worked on the slides for the presentation. I updated the schedule, the overview drawing, and added some drawings. I also will be giving the presentation so I practiced presenting.