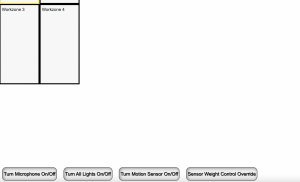

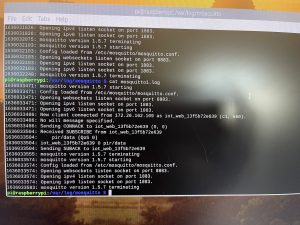

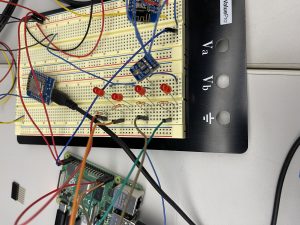

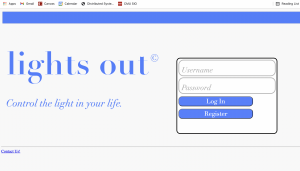

This week was the final presentations and I presented for our group on Monday. I spent most of the week working on the final presentation by putting together slides and meeting with my teammates over Thanksgiving break to outline and fill in our slides. Sunday mostly entailed testing the product in the lab and getting videos for our presentation (as shown below). I got a video of two work stations turning on when controlled from the web server as well as the work zones changing color on the web server’s GUI. I also got a video of the lights on the GUI changing colors when the lights turned on due to sensed movement rather than the web server sending a signal. We also performed testing to illustrate how our product has met our requirements in terms of latency, accuracy, and distance.

Wednesday entailed of watching more final presentations and filling out the peer review google forms. This week we plan on going into the lab on Wednesday to make our final video, do more testing, and have a full scale MVP to demo the final product. The web server is fully functional at this point and supports all the features previously mentioned such as authentication, controlling the individual lights, sensors, setting the weights of each sensor using user input, displaying the current weights, and a GUI to illustrate to the user exactly which zones are on.