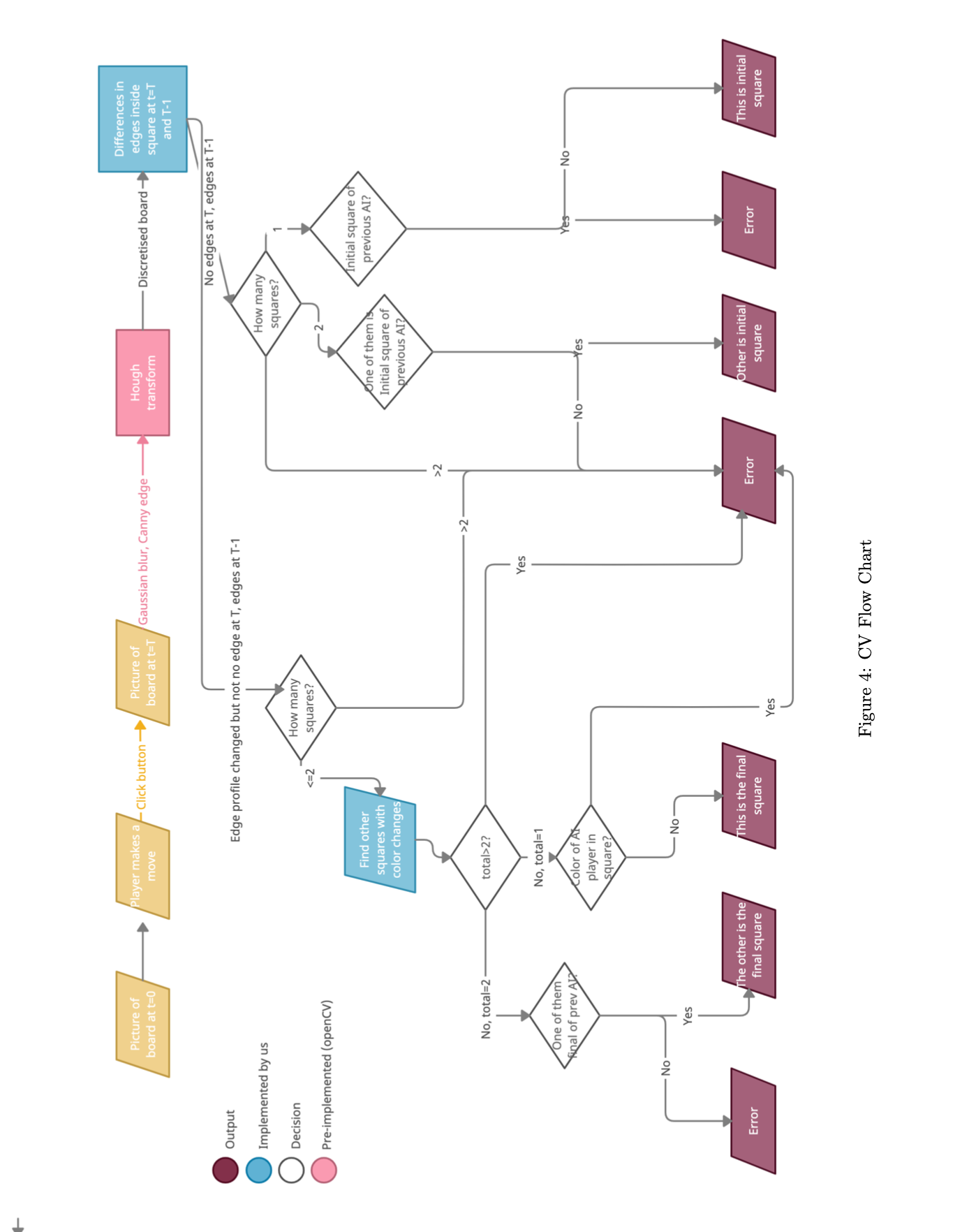

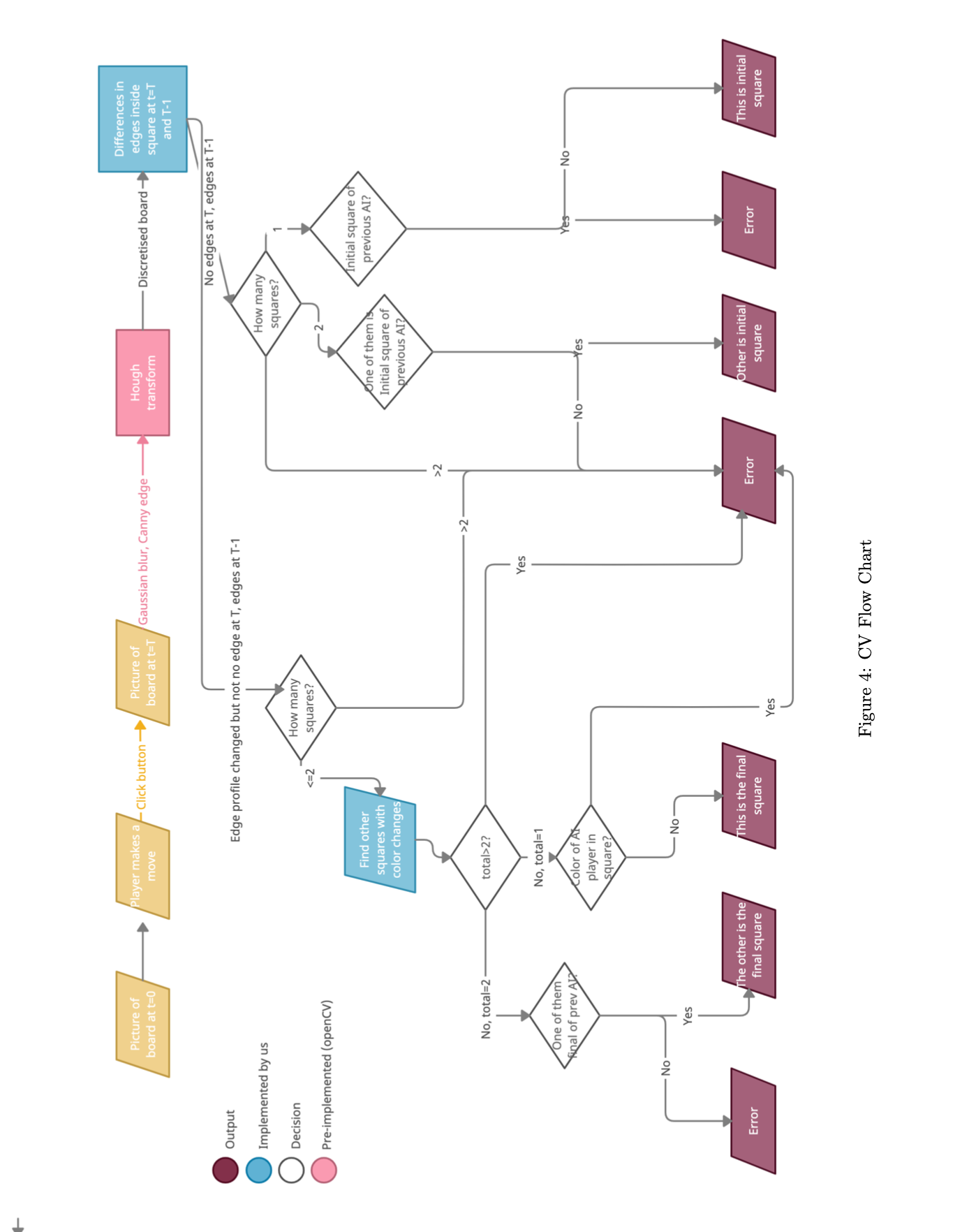

The week of 10/16 I mostly worked on the design report. I wrote the introduction, the design requirements (move detection), architecture overview, design trade studies for computer vision (edge detection and piece vs change detection) and system description (Move detection). I also worked on a flowchart representing that CV pipeline. This took a considerable amount of time because there were a lot of cases to consider and it still had to be as readable as possible.

I also spent time coming up with more design requirements that would help us measure the performance of the Computer Vision. For example, how far can the center of the piece be from the center of the square and still be detected correctly?

I spent a lot more time on the design report that I had predicted, and spent most of week 10/23 trying to catch up on the actual project.

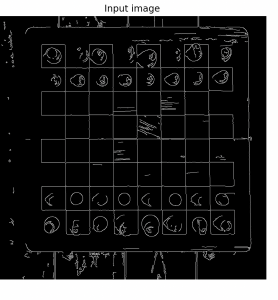

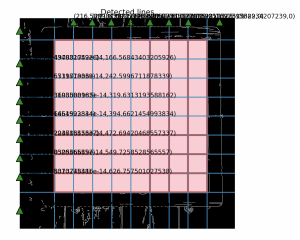

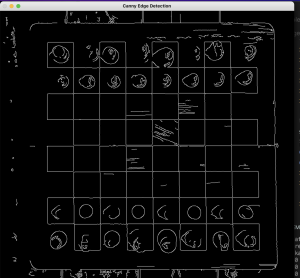

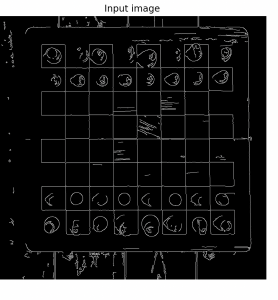

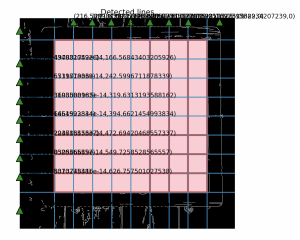

I spent the first 2 days trying to figure out the detected lines (grid) in the input image once we have detected edges. A picture is attached below of the input image and the detected lines that correctly form the grid.

The way I did this was first applying the hough transform and then iterating through the peaks. The peaks were of the form (angle, dist), and I got the coordinates of the point by:

(x0, y0) = dist * np.array([np.cos(angle), np.sin(angle)])

The slope of the line is the tangent of the angle.

Once I had this, I found the intersection points of these lines with either y=0 (for the vertical lines) and x=0 (for the horizontal lines). Then, for each vertical line I iterated through all the horizontal lines and formed rectangles with the coordinates:

[[this_line, this_hor_line], [next_line, this_hor_line],

[this_line, next_hor_line], [next_line, next_hor_line]]

Here this_line is the x axis intersection of the current vertical line and next_line is the next one.

Similarly, this_horizontal_line is the y intersection of the current horizontal line (as we iterate through the lines) and next_hor_line the next one.

One tricky part was ensuring that we didn’t end up with extra lines towards the left and right due to the edge of the chessboard being taken as an edge. We corrected for this in the following way:

- finding the middle 2 vertical lines and calculating the gap between them

- Go left and right from each of these 2 lines and +- the gap to get all the other lines.

- Stop when enough lines have been placed to ensure the edge of the board or any noise does not affect the output.

Once I had that working, I began work on actually determining the move. Once we had the grid, this surprisingly wasn’t as hard to implement. I did the following:

- Iterate through each rectangle

- Get the edges inside the square

- Apply the logic of the flowchart above

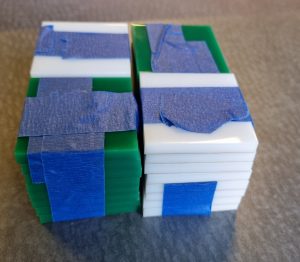

One issue I had however is that the chessboard I am testing on had a few lines on the square due to the pictures being of wooden chessboard. This is because the 64 squares we are making aren’t ready yet (we have 32). However, I confirmed with Demi that this will not be a problem for us because we don’t use wood and our pieces are smooth.

Now that I have most of the harder things working, I am going to test on the chessboard we purchased. From preliminary testing, this seems to be working a lot easier than on my original chessboard picture because it doesn’t have the wood problem. This is what I plan to do this week. Yoorae will be helping out as well as CV is a shared task due to complexity.

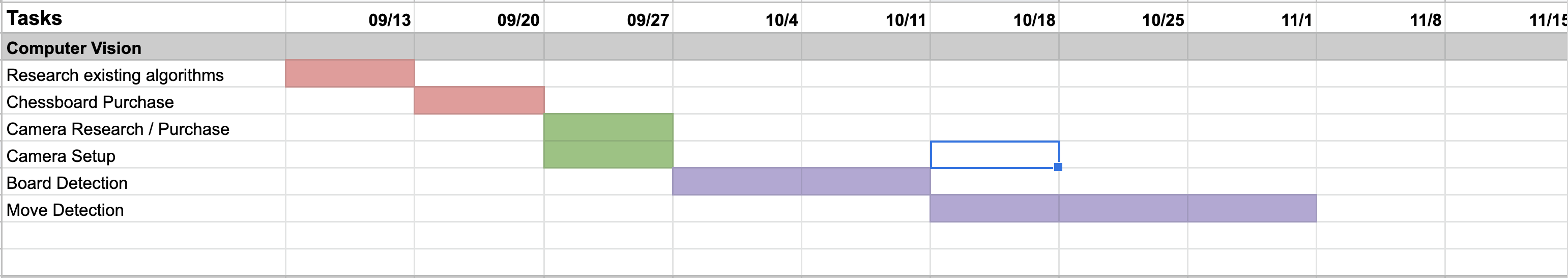

I’m behind schedule but I am confident I will catch up this week. Most of this was due to not allocating enough time for the design review. However, I have also been doing the “optimizing for speed” thing in parallel, so that is not a different task anymore. This week, I will be working on completing move detection and being ready to test when Demi finishes the board.

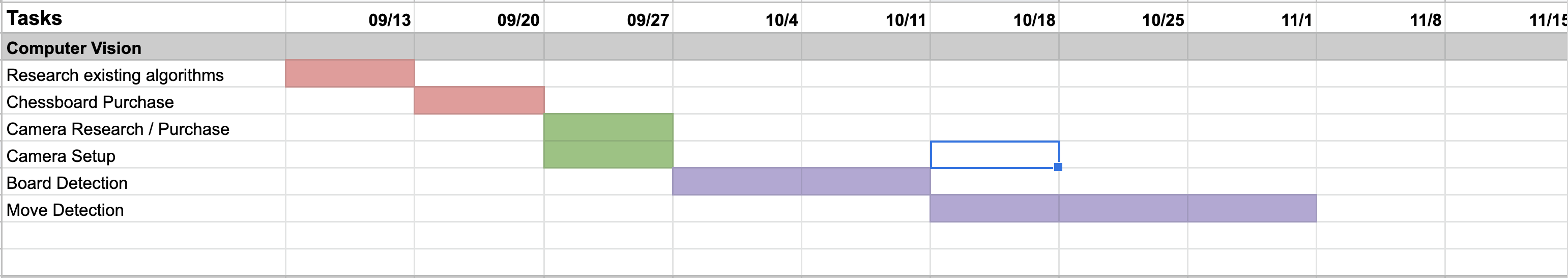

I updated the Gantt chart for myself to allow more time for move detection. I was expected to be done with it last week, but I have updated that to finish this week instead. The color for 11/1 is just for testing on the actual chessboard because I have to wait for Demi to finish it.

I plan to catch up by day 1-2 this week. I have also been doing some work on figuring out how we will integrate with Stockfish (eg how the moves will be sent to it), and that integration seems fairly simple. There shouldn’t be too many complex tasks left after this week.

Deliverable: Completed move detection, tested on at least the sample chess board. If I get the actual chessboard, I will also test on that. I will also calculate metrics such as accuracy, distance from the center etc., as described in the design report. I am to have preliminary testing reports out this week.