Team’s Status Report for 10/2/20

We looked through the feedback provided by our peers for our proposal presentation. As of now, there are no major changes to the project in terms of the design and the schedule. One thing that we discuss was whether we should market our project as more of a platform/framework rather than a final product to justify our design choices. We will continue to discuss this idea and see if there are any changes to made to the overall design of the project. In the meantime, we were able to finalize all of the parts that we need and have placed orders for the parts that could be a hindrance in the near future.

Vishal’s Status for 10/2/20

This week, I was able to accomplish a few things towards the completion of our capstone project. I started off with my most important task which was to design the UI Model. I started off with simple sketches for multiple scenarios. These scenarios included the home screen, choosing the difficulties and different types of focuses for the workout as well as designing the actual pages for when the workouts were taking place. I tried to solidify these and refine them compared to what was presented in the proposal but the final version will be dependent on. I also begin the basic pygame framework and have it setup on my laptop. I will need to further refine this to be applicable to our design as well as our project. I would like to make more progress with the pygame application as we do not have our webcam yet so I was not able to test out the image capture process fully. I have been working with the laptop webcam to see if the same scenario is feasible for capturing and displaying video. I will have to do a bit more testing to integrate that with pygame and see if multi-threading is feasible with pygame. In terms of my progress I am a little bit behind right now but I don’t see that being a problem because the schedule has a bit of slack for me. My plan for next week is to fully integrate the video feed with the pygame application and have images be captured periodically. I will also be trying to integrate recorded videos into the pygame application.

Albert’s Status Report for 10/2/20

This week, I finished downscaling an image to a 160×120 pixel image by using the Pillow library in Python. I researched on the OpenCV algorithms for color tracking, and I broke the library function calls to for loops on a 2D array of pixels (for future conversion to HDL). I implemented the conversion from RGB to HSV. I also collected the mask of the pixels that fit in the HSV range of a certain color. Then, it is put through erosion and then a dilation in order to get rid of noise. Then, using the algorithm to find the largest rectangle in a binary matrix, I found the center of the tracker which will be the reference for the joint of the posture analysis.

I am currently on par with the schedule. My plan for next week is to test the algorithm on the trackers that we bought from Amazon, and to determine the lower and upper bound of the HSV in order to recognize the trackers. Also, I would start researching on the Image Posture Analysis, and help Venkata with the hardware communication protocol.

Venkata’s Status Report for 10/2/20

This week was predominantly focused on set up and research related tasks. In terms of set-up tasks, I set up the website for the rest of the team and also picked up the Kintex-7 FPGA board from the Academic Center. In terms of the research related tasks, the primary goal of the past week was to learn the UART communication protocol. I was able to familiarize myself with the protocol and be able to understand how the FPGA and the host computer will interact with one another. I was able to find code snippets that use the python library pySerial to transmit data from a computer port with UART. On the other hand, I was also able to find Verilog code snippets that resemble a UART transmitter and receiver. So, I was able to finish the task for learning the protocol.

However, this week was also allocated for beginning to implement the logic. In order to do so, I tried to run the BIST (built-in self-test) on the Kintex-7 board but am currently running into issues because the board doesn’t appear to be transmitting the expected data to the host computer. Furthermore, programming the board requires Vivado, which is not available on MacOS, so I had to partition my MacBook and install bootcamp before I could install Vivado. For the upcoming week, I plan on resolving the issues and being able to successfully run the BIST and running through the UART option that is present. After which, I plan on finishing the implementation of the logic to communicate between the board and computer, which will allow me to stay on task with the schedule.

Team’s Status Report for 10/10/20

This week we discussed how we would be providing feedback to the users for our workouts. We found an optimal time interval to satisfy the requirements of the time it takes for the FPGA to perform the calculations with the live workout. We finally got most of our equipment at the end of the week, and we are still waiting on the camera. This has been a slight roadblock to our progress because a lot of our work depended on these materials. We spent a lot of time on the Design Presentation, because we had to create new graphs, flowcharts, and an updated schedule to reflect our work and progress.

Albert’s Status Report for 10/10/20

This week I wanted to test the code for the color tracking algorithm I coded up last week. I was hoping the trackers we ordered have arrived, but there was a miscommunication between us, the TA, and Quinn. I resolved the confusion by contacting everyone and successfully ordered the trackers, suit, as well as the camera. Since I was did not receive the colored bands this week, I had to create my own test cases for testing whether I can successfully filter out the specific color, remove some of the noise, as well as detect the center of the image. I created different drawings on my iPad and was successfully able to get the correct pixels. However, I would still need to test my algorithms on the actual trackers because the colors would need to be tuned for the upper and lower boundaries of the HSV value in order for the filters to work.

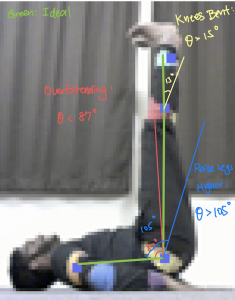

Along with testing the previous algorithms I wrote, I researched and thought over the leg raise, pushup, and lunges that we will be using as our workouts. I drew a diagram of all the joints and wrote down all the lines and angles that can be formed on a google doc. I wrote down the checks and feedback of each position as well. I was able to implement the leg raise posture analysis with some classes and methods in python. The specific thresholds will be fine tuned when we test it on the camera feed. I will still need to implement the pushup and lunges next week.

In terms of schedule, I am fairly on track. I was put slightly behind because of the miscommunication on the orders. I will be able to start tuning the values for the trackers. Next week, I will learn Vivado and HLS with Venkata as well as start determining the thresholds once the camera has arrived.

Venkata’s Status Report for 10/10/20

This week I ran into quite a few issues which I was able to resolve for the most part. I started off the week by continuing to try to run the BIST (built-in self-test mentioned last week) by reading through the documentation and trying to find a Vivado project that I could program to the board. However, I ran into IP issues and decided to forgo running the BIST and simply create a project that would allow me to test out the UART capabilities of the FPGA.

I started off by reading the forums to try and find existing projects that would allow us to use the UART protocol on the board and came across the AXI UARTLite IP block that allowed me to configure the baud rate and protocol. There was an example project for the IP block, which I decided to try. After fixing a multitude of issues such as licensing issues, driver issues, and compilation issues (Note: One of the compilation issues involved making non-recommended changes to the provided XDC constraints file, which I am looking into), I was finally able to transmit a character from the board to the host computer. After completing this, I wrote a Python snippet using the PySerial library that was able to listen to the appropriate COM port and receive the transmitted character.

In terms of schedule, I am on track. My goal for the past week was to finish the implementation of the communication logic but I realized that the logic is tied together with the RTL code for the implementation of the joints algorithm and so, decided to instead start working on next week’s task which involves learning about HLS.

Vishal’s Status Report for 10/10/20

This week I was able to make a bit of progress in terms of the application and user interface. I attempted to use the camera module of the pygame library but soon ran into issues, as it is only certified and optimized to work on linux. However we decided we wanted to develop our application on windows so I had to instead transition to using the open cv library. There was a bit of a learning curve so I spent some time understanding the intricacies the library.

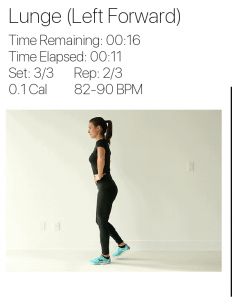

After working with openCV I was able to create a basic application in pygame that now has the live video embedded. It also takes pictures periodically and saves them to a local folder which will be consumed by the FPGA. I’ve also been working on finding and converting model exercises to GIF’s that are displayed within the application. I’ve also begun creating a model page to get the user’s biodata that will be used to calculate things such as calories and heart rate. In terms of the schedule I am currently about half a week to a week behind as I haven’t fully finished creating the GIF’s for the model and am still working on calculating the user’s biodata page and creating the timed workouts.

Team’s Status Report for 10/17/20

In terms of team work this week mostly comprised of working on the design review and design report. We took the feedback from the presentation and updated our report accordingly. We also made a few updates to our schedule to make it more reasonable and up to date.

We also worked on designing and creating the tracker suit. We realized that there were a few colors that weren’t bright enough through the webcam so we had to change them and modify the location the trackers were placed.

Albert’s Status Report for 10/17/20

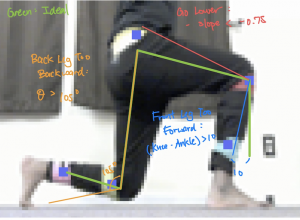

This week I started working on fine tuning the HSV bounds to do the color tracking. This was supposed to be done earlier, but we weren’t able to make the suit as there was some miscommunication in the ordering process. I had been fine tuning the alogirthm on sample images. However, fine tuning to the dark suit that we currently have took longer than expected because we would have to get it even more precise to the noise from a real life downscaled image. Instead of doing a relatively broad range, I have to manually pinpoint the joints first. Then, I would find the HSV values around those points and create an extremely precise bound to have better accuracy. I also finished implementing the posture analysis for pushups and lunges in python to provide feedback to the user. I plan to fine tune this portion after the image processing is set up on the FPGA. Also, since this week I was presenting for the design review presentation, I spent a lot of time fine tuning the presentation after everyone was done and practiced for more than 3 hours because I am not a good presenter.

In terms of schedule, I am more or less on track because I have started things that are due later but haven’t completed things the are already due. I have been doing a lot of verification of individual parts along the way, so integrating may take less time than we have planned. For next week, I will work with Venkata on converting the joint tracking algorithm to RTL using HLS.

Venkata’s Status Report for 10/17/20

This week was predominantly focused on learning HLS. I started reading this tutorial by Xilinx and have a rough idea of the workflow using HLS. I also talked to a couple of friends who are currently taking 18-643: Reconfigurable Logic and they pointed me to further resources for learning how to use HLS. After referring to a combination of these resources and some of the sample HLS projects that Vivado provides, I have an idea of how to go about implementing the design.

I am currently on track with the schedule. According to the schedule, this past week was for learning HLS and the upcoming week is for implementing the design. I have begun working on next week’s task for implementing the design by working with Albert to convert the existing Python code for image processing into C that can be passed into HLS.

Vishal’s Status Report for 10/17/20

This week I worked with Venkata/Albert to create the tracker suit that we will be using for our project. More details on the work done for this can be seen in the team status update for this week.

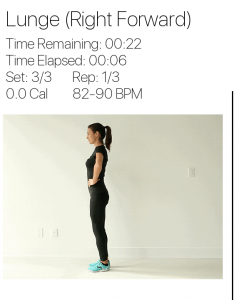

In regards to my own personal work I spent a lot of this week working on the design review report/presentation which ended up taking a major portion of my time. I was also able to make progress in creating the timed workouts. I now have each set timed and it will move onto the next set once the first set is finished. Pictures are being taken periodically as each rep finishes. The user input menu is completed now and the weight and height can be adjusted. In terms of the schedule I have updated it so that I have another week to work on the timed workouts as it ended up being a lot more work than I had anticipated. The timing for the schedule is still fine as I already had a slack week accounted for.

Team’s Status Report for 10/24/20

This week we took sample images with the webcam that recently arrived. In order to appropriately fine-tune the parameters for our posture analysis algorithm, we took multiple images at different positions of the various exercises that we plan on supporting. A major risk that we identified was that we would not be able to fully identify all of the colors. This risk is fully elaborated in Albert’s status report for this week. The contingency plans involve finding more distinct colors that would be easier to track and experimenting with different lighting and background conditions to find the optimal background for our project.

No major changes have been to the overall design and schedule.

Albert’s Status Report for 10/24/20

We were finally able to take some demo pictures from the webcam since we ordered the item slightly later than the rest. I had been working with iPhone images. A noticeable problem when I first got the image is not the lighting is a completely different shade of color from the iPhone image. We hope that the change of saturation is due to the different webcams. However, it can also be due to the lighting of the room. I ran the newly captured images on my previous algorithm and they were not pinpointing the joints. I will do some further tests next week by capturing the images at night with the webcam and see if my new acquired bounds or old ones will be able to detect the trackers. Also, the webcam image seems to come in with a different dimension than a normal picture. Therefore, there are black lines to the side of it which is unnecessary for the joint tracking. Thus, I have made edits to my algorithm to crop out the black lines.

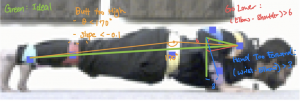

To aid me in finding the bounds, I have to manually find the center pixel of the trackers and capture the HSV bounds by finding the minimum and maximum within the area. I have to do this for every joint on a reference image. Then, I would run the same bounds on a different image to ensure that the joint locations that I returned are in a similar area. I have to redo my fine-tuning because of the different saturation of the image. I started with the pushups this week. I had to modify the morphological transform portion because an erosion followed by a dilation would remove a lot of the important pixels I track. Thus, I changed it to 2 dilations then followed by 2 erosions to better track the pixels. The picture below shows the output. The peach tracker on the elbow would have to be changed because it is too similar to the background; thus, it its not trackable. I hardcoded that position in for the posture analysis portion.

For the posture analysis portion, I had to make edits to the code because due to latency issues we have decided that we will be only tracking the second or more important position of the workout. For a pushup, it is the downwards motion. Since I finally have the joint positions from the image processing portion, I could finally do some threshold fine-tuning. I adjusted the values of the slopes and angle comparisons to fit our model. The current model I got gives me the correct feedback when I track feed in the up position for the pushup analysis. It would output “Butt is too high” and “Go Lower” because it detects the hip joint and elbow joint not in the same slope and angle according to the pushup model. To make this better, we would have to capture more images of faulty pushups in order for me to fine-tune it even better.

I am on schedule in terms of the image processing portion, but is slightly behind on the posture analysis portion. Since the posture analysis portion is easier to implement and fine tune fully with the application and system set up, I will work on helping my teammates with their portions. I will get to the Lunges and Leg Raises posture analysis fine tuning when I have spare time from helping Venkata with the RTL portion. We seem to have found a library in HLS to help us do the image processing portion. However, the bounds that I find will still be useful for the HLS code. Since the joint tracking algorithm and posture analysis is very sensitive to real life noise, I envision the integration portion would constantly be updating, so I would keep on updating my algorithm on a weekly basis.

Venkata’s Status Report for 10/24/20

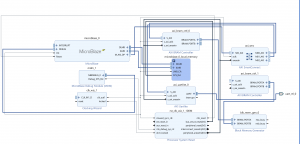

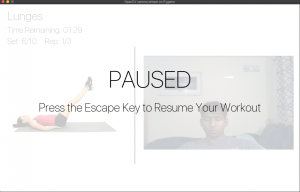

The past week and the upcoming week are allocated for working on the HLS implementation. Unfortunately, I was roadblocked at the start of the week since I was having trouble with HLS and wasn’t sure how to proceed. I then met Zhipeng (who the Professor introduced me to) and he was able to address my questions and pointed me to use the MicroBlaze soft-core CPU that would be responsible for controlling the interactions that take place on the FPGA. I also updated the block diagram that exists on the FPGA to be the following.

Note: It is not complete as it still requires the IP core that is responsible for the image processing. This core will connect to the appropriate AXI ports that have been left unconnected.

I then started learning how to use the MicroBlaze core, which required adding a new piece of technology (Vitis) as the current version of Vivado does not have an SDK to program the MicroBlaze core. I was able to program the core and stream appropriate information via UART. I am looking into the other components in the block diagram and how to control them.

I am on track with the schedule. This week, I hope to be able to learn how to control the various components specified in the block diagram and also have the image processing IP core done.

Vishal’s Status Report for 10/24/20

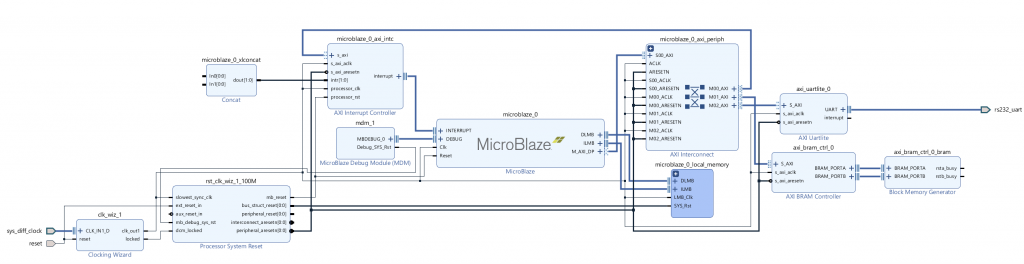

The past two weeks I have been working on creating the custom timed workouts as well as wrapping up the calculations for calories burned + average heart rate.

For calories burned I did a bit of research in terms of the MET (Metabolic equivalents) for various workouts. From my research I found that push ups are have a MET value of 7.55, leg raises have an MET 2.23 and Lunges have a MET value of 2.80. I used the following formula to calculate the aaverage calories burned as well as heart rate in the code:

I was able to complete this section and it will accurately reflect how many calories are burned. I also take into account during rest sections that the MET value will decrement at rate of 20% per minute.

In terms of the timed workout I was not able to fully finish them as I had anticipated as I had a very rough schedule this week (midterms etc.) and not too much time to finish. I have all the necessary media, models and templates at this point to finish but still have a few more buttons and pages to add. I will be finishing this by Monday (10/26) at the latest and will be almost ready for integration.

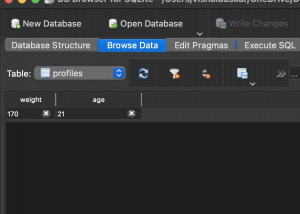

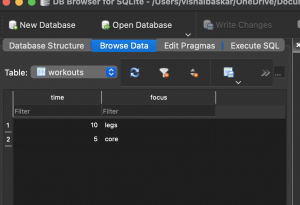

I have also begun researching the way that the data will be stored more in depth so that it can be tied into the application. SQLite will be adequate and be a safe way to store the data in a relation manner. I have the function headers for storing the workout data setup but will be adding that in, this upcoming week.

As I had a tough week I am about half week behind my schedule but will definitely be able to catch up, as my other classes had midterms and I will now be able to put my full effort/time into the application and UI. I anticipate some time will be required to integrate with the UART protocol as well as the posture analysis so I will also begin prepping for that.

Team’s Status Report for 10/31/20

This week we discussed on how the posture classifies as a good posture for the posture analysis portion. Albert was able to change up the thresholds afterwards. We also gave feedback to Vishal’s User Interface to improve the user experience. On the hardware side, we might potentially have to downscale the image further due to memory constraints on the FPGA. We will explore other options before we resort to downscaling the image even further because it may harm the image processing side. Finally, we discussed about what we wanted to include in our checkpoint demo for next week so we tried to polish those portions even more.

Albert’s Status Report for 10/31/20

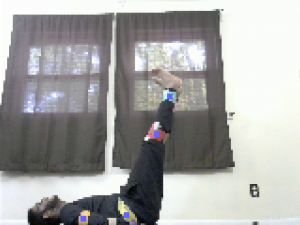

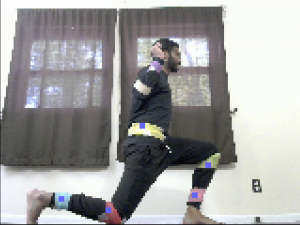

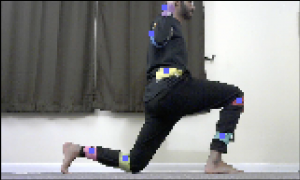

This week I worked on multiple tasks and I am ready to the integration and verification on the image processing side. On the hardware side, Venkata gave me the task of reading and understanding the Vitis Vision Library because we may potentially be using the OpenCV like Library to implement the image processing portion as our current clock period does not meet the baud rate requirements. I converted the maxAreaRectangle, maxAreaHistogram, and getCenter to C code because that was the only portion left to be converted to RTL. On the image processing end, I made good progress and finished the leg raises and lunges posture analysis. I completely restructured the previous code to support our new change where we only give feedback on the necessary position of the workout. Also, to make things easier for integration, I implemented polymorphism for the posture analysis. Instead of calling a specific function for a leg raise or lunge. I grouped them and refactored them to be easier to integrate later on. I was also able to fine tune on the HSV bounds on the leg raise and lunges (as shown below).

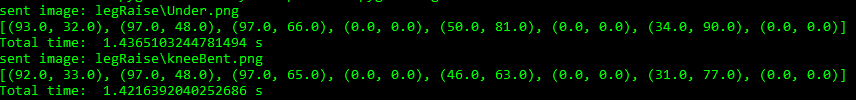

As we take more pictures, I can fine tune the HSV bounds to be more precise and accurate. For the posture analysis portion, I was also fine tuning the feedback for the leg raises and lunges. It was able to output “Raise your Legs Higher” and “Knees Bent” on the appropriate posture. I realized that since the trackers might slide off the middle of the joint and also the natural position of the joints, we might be setting a requirement to try to meet and that is the angles have to be within 10 degrees of the expected. We decided on this because a proper leg raise doesn’t require the hip to be at an exact 90 degree for a good posture. I would be waiting on when we get to integration to test my models on wrong posture and fine tune the thresholds better. Last but not least, I created a way to test the FPGA’s result on the software side.

I am on track in terms of schedule, but I need to help Venkata on the hardware side because we ran into unexpected challenges. Therefore, as more pictures come in, I will be continuing to fine tune them. Next week I will try to implement or help Venkata implement the Vitis Vision Library function and see if that will be be better for timing on the FPGA side.

Venkata’s Status Report for 10/31/20

Last week, I was able to program the FPGA to be able to stream information from the FPGA to the CPU via UART. I had to make a couple of small changes so that I would be able to stream information from the CPU to the FPGA, which worked well on small sets of values but had issues when I tried to stream large sets of values such as an image. This is because the input buffer for the UARTLite IP block only contains 16 bytes so, the device has to read from the buffer at an appropriate rate to ensure that we don’t lose information. I looked into different ways of reading information such as an interrupt handler and polling frequently and was eventually able to get an implementation where it stores all of the information appropriately. Attached is an image where I was able to echo 55000 digits of Pi ensuring that was able to use UART both ways and able to store the information.

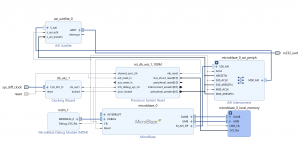

In terms of the block diagram, I realized that the local memory of the MicroBlaze is configurable and invokes BRAMs. So, I simplified the design to the following and tried to store all of the information in the MicroBlaze.

However, I kept running into issues where the binaries would not be created if I used too much memory. I am checking if I am not appropriately using the memory or if we need to downscale the image slightly more (which I have already discussed with my teammates).

Finally, another issue that arose was related to the baud rate. Different baud rates require different clock frequencies. As I was creating different block diagrams, it would sometimes not meet the target frequency and violate timing. In the image above with the digits of Pi, I was able to use our target baud rate.

In terms of the schedule, I was hoping to have most of the design done but ran into quite a few issues 🙁 I have addressed this with my teammates and by next week, I plan on having the ability to stream an image and receive the information (at a potentially smaller image size). I will finish the implementation of the image processing portion with the Vitis Vision library. I will then try to optimize the design to be able to use a high baud rate and the entire image during the weeks that were allocated for slack.

Vishal’s Status Report for 10/31/20

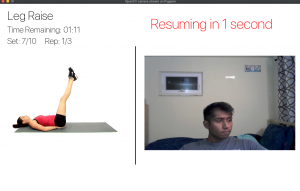

This week I was able to make significant progress and headway on my tasks. Most of my work was in terms of the workout screen and making it better and and more useful for the user. I received a lot of feedback from Albert and Venkata which I took in and implemented as seen below. I also added in a pause menu which wasn’t discussed below as it is something a user would like while performing a workout.

Since I waspretty much caught up with the UI outside of storing workout data for future reference within the database, I decided it would be a bit more worth while to spend time looking into integration as the database storage is essential but the project will definitely still work without it. I realized my code could be a bit more optimized and learned that I will need to implemented threading in order to receive data and send data through the UART protocol. This took a good portion of my week as well but it now definitely seems feasible and I have pseudo code that will be ready to plug into Venkata’s UART protocol.

In terms of the work left I am basically caught up but a bit out of order. I have the database setup but I don’t have anything being stored from workouts or a menu to access the history set up yet. However I have made decent headway into the integration and have setup the serial library so that I can interface properly. In order to test that out more I will have to talk to Venkata and see if the code ends up working out. Next week I will most likely try to actually store data in the database and have a simple UI to view data. I also would like to add the additional feature of multiple profiles as I think it would help make the project more robust overall.

Team’s Status Report for 11/7/20

This week we took more pictures together of various workouts to add to our collection for training thresholds and the posture analysis. From these pictures we were able to fine tune the different types of analysis we will be able to give for leg raise, push ups, and lunges. The details of the different types of analysis are detailed in Albert’s status report. We finally discussed what the details of our demo is going to look like and how much we want integrated for the demo.

Albert’s Status Report for 11/7/20

This week we took even more pictures of bad posture of our workouts for the posture analysis portion. I classified the images into different checks that I perform. For example, for a leg raise, there is a picture where the legs are well over the perpendicular line, and I made sure the feedback would be “Don’t OverExtend”. Here is a breakdown of the images/feedback that I classified.

Leg Raise:

- Perfect: (No Feedback)

- Leg Over: “Don’t Overextend”

- Leg Under: “Raise your legs Higher”

- Knee Bent: “Don’t bend your knees”

Pushup:

- Perfect: (No Feedback)

- Hand Forward: “Position your hands slightly backwards”

- High: “Go Lower”

Lunges (Forward + Backward):

- Perfect: (No Feedback)

- Leg Forward: “Front leg is over extending”

- Leg Backward: “Back Leg too Far Back”

- High: “Go Lower”

I have finished implementing and the basic fine tuning of all the workout positions. It has been formatted in a way for the application to call it directly through the functions I provide. I spend time teaching Vishal how to use my classes and methods. It has I did a slight touch up on the HSV Bounds as well and it has been pinpointing the joints very accurately on 10-15 other images.

This week I also spent a lot of time debugging the HLS Code and ensuring the FPGA is getting the correct joints. I first generated the Binary Mask into a txt file to compare it with the hardware version. We ran into a lot of problems debugging. First, we messed up the row and col accesses because the PIL library doesn’t follow the matrix form of row x col. Thus, I had to make a lot of changes to my python code in order to match the way Venkata stores it in as the bitstream. There were multiple bugs with erosion and dilation that we spent a lot of time using the software test bench to pinpoint the bug. Finally, after all the unit tests have been working, we ran the FPGA on all the joints. However, there was a slight different in the joint locations returned. After spending 1-2 hours checking the main portion of the code. We realized that the bug was when we created the byte array to send it to the FPGA. I had Pillow 6.1.0 while Venkata had Pillow 8.0.3 (newer version). The different versions of the same library did resizing and converting to HSV differently. Since my HSV bounds have been fine tuned, it took a while for Venkata to reinstall Python 3.6 (because Pillow 6.1.0 is only compatible with this version). My portion can soon be integrated either before or after the demo.

I am currently on schedule and slightly ahead. I might help Vishal if he needs help with the database or application if I have extra time. I will aim to do more testing once the FPGA and my posture analysis portion gets integrated into the application. Fine tuning will be much easier and more efficient with real time debugging rather than through pictures. Hopefully in the next week or two, I can fine tune the bounds and thresholds for the posture analysis even better. Also, since our time to get the image processed on the FPGA is slightly high, Venkata and I will work on optimizing the algorithm more.

Venkata’s Status Report for 11/7/20

I was able to make significant progress this week. Firstly, I realized that I was incorrectly using the BRAM in the MicroBlaze and had to use a Block Memory Generator IP block, so I updated the block diagram to the following.

After learning how to use the IP block, I was able to effectively store the entire input image and allocate different portions of the BRAM for the various intermediate results that are generated as follows:

I then worked with Albert to fully convert the image processing Python code to an implementation that I could place on the MicroBlaze. I was able to synthesize an implementation that met our target baud rate and was able to meet timing by playing around with different configurations of the block design. As a result, I was able to have the FPGA take in an image such as the following

and return the coordinates as follows.

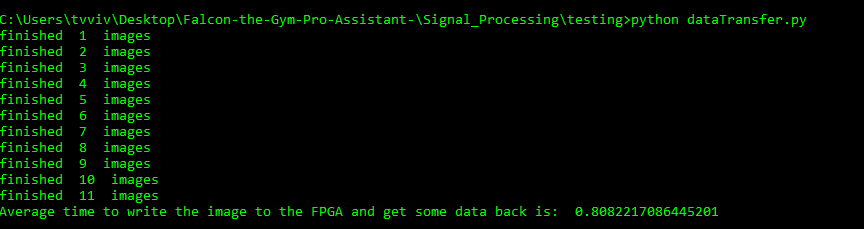

The UART.py file loads an image, downscales the image, converts it to HSV, and writes it to the FPGA serially, and then waits until it receives all of the coordinates. It is similar to the overall workflow and is ready to be integrated.

As mentioned last week, I am slightly behind schedule. The arrival time of the locations is slightly higher than our target requirement. I plan on optimizing this during the weeks allocated for the slack but also plan on investigating and trying to reduce the latency to our target the upcoming week. I also plan on working with Vishal and having the interface and communication between the FPGA and UI be fully completed by next week.

Vishal’s Status Report for 11/7/20

This week I was able to make a decent amount of progress in the user interface application, as well a bit in terms of integration. I worked on actually implementing the calorie estimator as well as the heart rate estimator. I used my previous calorie formulas and data to keep track of calories burned during both rest as well as different workouts. I had to a do a bit of new research for heart rate but found out a proper value using the following formula and table.

Heart Rate = (Heart Rate Reserve)*(MET Reserve Percentage)

I also refined a few bugs in the workout page such as the pausing and timing for that, as well as having transitions between different workouts and changing the heart rate/calorie estimation.

I also worked a bit on integration with both Albert and Venkata. With the hardware I was able to work with the pyserial library and receive and send data from the captured images. I also was able to integrate the posture analysis in a naive and basic manner and have feedback shown in the workout summary at the end. In terms of schedule I am still on track to complete on time, but I will still be prioritizing integration over the database.

Team’s Status Report for 11/14/20

This week we were able to complete our MVP that entailed basic integration by the time of our demo. We were able to present a product that was able to read in a random image, stream it to the FPGA, grab the feedback and display the appropriate feedback in Terminal fully synced with the User Interface. We also spent time collecting more images to help us train the posture analysis portion and ensure that we are able to provide the appropriate feedback while also testing the image thresholds for the various joints in various lighting conditions.

This week, there were no changes made to the overall design of the project nor any major risks identified.

Albert’s Status Report for 11/14/20

At the start of the week, my laptop’s screen broke and I had to send it in for repairs. I couldn’t make as much progress as I would have liked. I would make more live fine tuning for the posture analysis and HSV bounds in the next weeks. This week I was able to work on dealing with invalid joint positions. When we were integrating the FPGA with the application, my code crashed because I was doing a divide by zero to get the slope. This was caused by the FPGA not being able to detect the joints, so it outputted the origin. Therefore, this week I added further checks to ensure that the joints I received were valid positions. If not, I would output an invalid signal to the application. In the image below the output of the application would be “Invalid: [‘Invalid Joints Detected: Shoulder!’]” as the shoulder cannot be detected.

Since we wanted to satisfy our requirement of having a feedback every 1.5 seconds, Venkata measured the time from getting the image to outputting the posture analysis. He concluded that it is best every workout to be limited to 5 joints so that we can get the feedback in 1.42 seconds. Leg raises and Lunges would not need more than 5 joints. For a pushup, we can relax the requirement to provide more feedback on the lower body, find a way to not send portions of the data to the FPGA, downscale the image even more, or take away one of the joints in the lower body.

Next week, I would conduct more live testing with the application, webcam, and FPGA integrated. I will also create ways to test the feedback and generate checks for the hardware side as well. I am on schedule in terms of the overall progress.

Venkata’s Status Report for 11/14/20

I was able to work on quite a few different things this week. By the time of the last status report and the demo, I worked with Vishal on the interface and the communication between the FPGA and the computer, and we were able to have it completely integrated by the demo, which was presented during our demo.

Last week, I mentioned that the arrival time of the various pixel locations is higher than our target requirement of 1.5 s. I addressed this issue with the other team members and we were able to determine that we would be able to provide appropriate feedback to a user for a particular exercise if we passed an additional byte at the start that indicated the type of exercise being performed. This byte is located at the bottom of the stack after the erosion output depicted in last week’s status report.

This would allow the FPGA to only process the locations of the joints that are used for the appropriate exercise and let the FPGA skip the processing of the other joints and simply output 0s for those joints as in the example below.

By doing this, we were able to reduce the arrival time to less than 1.5 s and were able to receive the feedback after taking an image to our target requirement.

Note: The timer begins before opening the image and ends after it receives the appropriate feedback from the posture analysis functions.

I also began testing the various portions of the code. I was able to verify that the time it takes to send the image via UART and receive some information is less than our target of 1 s.

In terms of the schedule, I am on track. Since I do not have to do any further optimizations to make for the code and the other portions of the project are not fully refined, I plan on spending a large portion of the remaining time for data collection by serving as the model and ensure that the various portions have enough data to allow them to be fully refined.

Vishal’s Status Report for 11/14/20

This week I was able to make a good amount of progress for both the integration and the database aspect of the application. For the database I was able to create the database through python code and have two tables setup. One for profiles and the respective biodata and one for keeping track of workout data. Both of these tables are integrated into the UI code. At the end of workouts the statistics about that specific workout will be loaded into the database. Currently there is only one profile but I will be integrating the profile switching in the upcoming weeks. Here is a look at the database schema.

In terms of integration I now have the feedback received by the application and displayed at the correct time in the UI. In the upcoming weeks I will be making sure that the UI is working well overall and refining things that looks buggy. It will be inspected and tested using visual inspection for the most part and a bit of test frameworks.

Team’s Status Report for 11/21/20

This week the three of us spend a lot of time doing the final integration for videoing the workout portion of the final video. We had a setup and finished recording for our final video that we have to submit. The final hurdle took a lot longer than expected as the image processing and HSV bounds were not behaving as expected (explained in individual post). We also discussed on our plan of action for the next few weeks. We are considering adding two new features to our project because there are two weeks left in the semester. We want to have audio feedback so that people do not have to keep staring at the screen during their workout. We will also try to integrate the Spotify App so we can play music while working out.

Albert’s Status Report for 11/21/20

Earlier this week, I was working on refining the posture analysis on the extra 30 or so images that we captured last week. I changed the HSV value for the shoulder and wrist so had to edit it on the HLS code as well. The posture analysis was also fine tuned. I also handle unlikely errors that cause the program to crash because there might be duplicate points for angle calculation.

This week we wanted to video the workout portion of the project as a whole because everyone is gone for Thanksgiving and would not be back in Pittsburgh after Thanksgiving as well. Therefore, it means that the FPGA, webcam, application, and posture analysis section have to be integrated entirely. We set up everything to do the recording; however, things didn’t went well as expected. The pictures I used to get when I did the fine tuning of the HSV bounds is directly from the camera. However, in order to present the live feed from the webcam, the application uses OpenCV, which does some processing on its own. Vishal had to do processing to change it back to the original image. However, there is still a difference between the saturation of the images I directly get from the camera and images captured and stored through OpenCV. I had to spend more than two hours to pinpoint every joint and fine tune it again due to the discrepancy between the images I currently receive and used to receive. Since I had a test bench and some functions written to speedup the process, it took a lot faster than without the classes and functions I wrote previously. Also, we decided to test the image processing portion without the dark suit that we built earlier. It also took a longer time to get rid of the noise from their different colored T-shirts and pants. While doing the final fine tuning for the video, we decided to reuse colors of the trackers because certain colors are easier to track than others. Another problem with the program is since the workout is a live movement, a lot of the darker colors get blurred out. In the picture below, the red becomes a lot lighter than normal and sometimes it turns into light green for some reason. Since we originally anticipated using 8 joints but only actually needing 5, we pinpointed the mutually exclusive joints for the workouts and made them the error prone colors to less error prone.

Also, for some reason, the camera reversed the left and right so some of my posture analysis gave back incorrect results that took a while to realize and debug. Since everything was on the main application and we were running it as a whole, it was pretty hard to isolate the bug and realize that the camera was flipped.

Next week is Thanksgiving and I would be flying back to Asia, so I would not have a lot of time to work on Capstone. However, I will probably try to work on the audio feedback portion by sending audio recordings from online to the application.

Venkata’s Status Report for 11/21/20

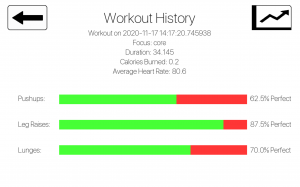

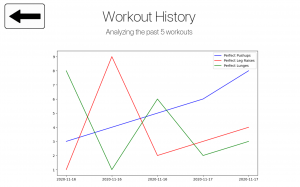

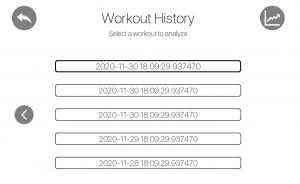

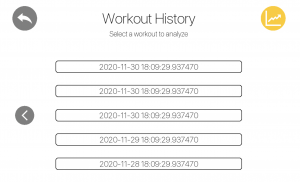

This week was predominantly spent on working on tasks not focused on the hardware. Since I was able to meet our performance requirement for the FPGA, I decided to help out with the software side, specifically by working on parts of the UI that didn’t directly interfere with Vishal’s work. I decided to focus on the pages that provide workout history to the users. I first came up with a couple of mockups and after discussing with the rest of the team, I decided to start coding up the various pages. I had to learn how to use the needed python libraries (Pygame and Matplotlib) and the existing codebase for the python application. I then was able to create the following three pages that pull the appropriate information from our database and display it to the user.

- Workout History Options – Users will be able to navigate the database and pick a workout to analyze

- Workout History Summary – Users will receive a detailed summary of a selected former workout

- Workout History Trends – Users will receive a graph analyzing their performance over the past 5 workouts

I am on track with the schedule. For the upcoming week(s), I plan on working with Vishal to fully integrate the changes that I made and work on refining the various pages to ensure that it is visually appealing. I will also do some more testing with the FPGA and integrate the new changes that Albert made to the image processing code.

Vishal’s Status Report for 11/21/20

This week I made a lot of progress and headway on the project that has made the overall project a lot more robust. I started off the week refining the changes I made last week for displaying the feedback from the FPGA. It turned out that when the entire project was run together with the hardware as well as the signal processing the timing for the feedback was a little delayed especially for pushups. I had to work on editing the gif’s and reimplementing the timing for the workouts so that the final rep of a set had more time remaining so that the user could properly read their feedback before moving onto the next workout. After fixing up timing for the different workouts and more specifically the pushup exercise I moved onto working on implementing a second type of lunge and fixing up the gif for the lunge.

We originally had the lunge implemented for the right leg forward but we worked on changing it so that two different types of lunges are shown with also a version where the left leg is forward. With the help of Venkata I was able to apply the new gifs since the old ones ended up being too pixelated and did not flow cohesively in the user interface. In order to accommodate both types of the lunge I had to refactor some of the code and implemented new logic.

I worked on making sure that the images are captured periodically in a proper manner and made sure the scaling and coloring for them were proper. The frame through opencv was given in blue scale and I had to cast it in order to be consumable by Albert’s signal processing as in the past we have been using photos from a saved folder that was taken by an external webcam application.

I’ve wrapped up this week working with Albert and Venkata to record some workout footage that we will be using in our final demo as we will all be heading home next week for Thanksgiving. We had some issues integrating so I spent a little bit of time making sure those bugs were cleaned up.

In the upcoming weeks I will be making the main menu which will connect all our different pages together and then I will be integrating a profile customization screen.

Team’s Status Report for 12/5/20

Our main priority for this week was working on the demo. Since we are all back home and are in different time zones, it was fairly hard for us to meet but we were able to effectively plan and practice for our demo. We were able to focus on any final touches needed for integrating our final project and were able to successfully fully demonstrate our progress and project during our demo. For the upcoming week, our main priorities are working on the video and the final presentation.

There were no major risks identified this week and our schedule and existing design of the project has not changed at all.

Albert’s Status Report for 12/5/20

This week I added audio Feedback to our application. Instead of only returning written feedback, our application would also return audio feedback. Since the user may be doing a leg raise or pushup and may not be looking at the screen, the audio feedback would allow the user to perfect his or her better. I converted some text to mp3 files and changed the outputs sent to the User Interface. I used Amazon’s Joanne as the audio voice. In terms of the feedback that we received for the live demo, I looked into the skeleton feedback and realized that we I would have to redo a lot of the posture analysis as well as the image processing because we don’t really have the opportunity to re-record the entire workout. Therefore, the best I can do is to feed our current screen recording into the algorithm. However, the screen recording does some processing to the live feed, so the HSV values are not consistent with what it was originally. We realized that it may be too much work to re-record since we are all in different physical locations.

Since the final presentation is this week, I had to create the slides and organize the metrics and testbenchs that I have created in the previous weeks. Also, I am mostly in charge of assembling the final video, so I planned out the time stamps for each section of the video. I distributed the tasks to Venkata and Vishal for them to give me short clips of their portions. I generated diagrams for the posture analysis (shown below).

I also edited the code so that it saves images of what the binary mask looks like after every significant step. These diagrams will help me record the technical portions of image processing and posture analysis. I played around with iMovie to get familiar with it. I created an Ending scene and have started to cut and edit the videos that we want.

Next week, I will mainly be focusing on generating the video. The following week will be to complete the final report.

Venkata’s Status Report for 12/5/20

This past week was focused on working on the UI to be able to demo. I first focused on adding additional features such as the functionality to navigate through the database by adding navigation buttons to grab the appropriate entries as well as adding more functionality to the history trends page so that it combines workouts of the same day and standardizes the y axis. I then focused on cleaning up the UI by creating new icons and adding the ability to highlight the buttons/icons when the user hovers over the various options.

After working on the UI and cleaning up the Workout History Summary and Workout History Trends page, I worked on trying to integrate Spotify into our project. After learning about Spotipy, I was able to create a Developer app that connected with a user’s Spotify account to allow our app to modify the user’s playback state by pausing/playing/skipping the current song and allow our app to identify the name of the current song. We were also considering cleaning up the authentication flow but after our demo, we decided that we should focus on other aspects and so, I simply worked with Vishal to create a simple UI for the Spotify section and began working on the other deliverables.

I am on track with the schedule. For the upcoming week, I plan on focusing on the various deliverables such as working on the final presentation and providing Albert with the various video/audio files he needs to successfully integrate our various sections for our final video. I will then work on the final report the week after that.

Vishal’s Status Report for 12/5/20

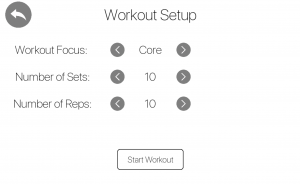

This week I mostly worked on making sure the UI was in a ready state to present for our demo on Wednesday. The main task that I had to tackle in terms of the UI was the main menu which is used to link the various pages within the UI. I also worked on creating the workout setup screen and settings screen in which the user can customize their workout as well as their profile information. The next thing I did for the UI was enable profile switching and save unique states for each user within the sqlite database. The final thing I did was clean up the overall flow and details for all the pages and made sure that different aspects were integrated properly.

After the demo I worked a bit with the spotify integration. I have a log in menu for each profile and then I started working on the UI aspect of spotify. This UI will give the user the ability to play, pause, next song, previous song, as well as see the current song they are playing on their device. I have a bit more work to do to actually get it to display but the button logic works properly with the API.

In the next weeks I will be working on creating clips for Albert that will demonstrate the UI and features we were able to implement after the recording we had before thanksgiving. After that I will be finishing the spotify UI buttons and have it properly display. In addition I will be preparing for the presentation this Monday and then start on the design report.