Adrian:

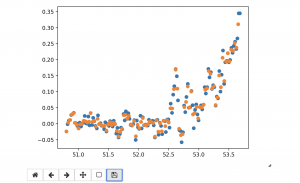

So far, I have created the backend flask server that communicates with the iOS app. I have also created a suite of preprocessing tools that will be used to feed the imu data into the deep net for form detection. This includes segmenting sets from the various exercise routine files, then segmenting handmarked reps from the sets and finally normalizing the data by using 1D signal interpolation the bring all signals into a 100×12 dimension basis. This on average should minimize the destruction and “making up” of information because the average bicep curls is about 100 samples which is 2 secs (at 50hz sampling rate). See image below of the normalized data for a single imu time series.

Kyle:

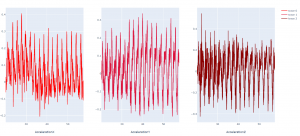

So far, I have developed a tool to parse and visualize IMU data from the Apple Watch. The plotter uses the Plotly library. I’ve also begun updating it to use Pandas DataFrames, hoping for easier long-term use and higher efficiency in processing the data. I have also begun evaluating several different methods of extracting pose by IMU tracking, since there is no generic open-source library for doing so. This should eventually lead to doing inverse kinematics to estimate the joint angles of the user’s arm, which should allow us to give the user visual feedback.

Matt: