This week, we focused heavily on preparing for our midpoint demo. Caroline and Snigdha worked to integrate the first half of the system with both hardware and software components. We ported our encoding script to Java and are now able to enter a message on the raspberry pi and print out the encoded message directly. During the later half of the week, we focused on catching up on the CV side, working to make sure the CV output was as desired to be able to properly decode the message. We also worked on setting up the second raspberry pi and installing openCV and other necessary software on it.

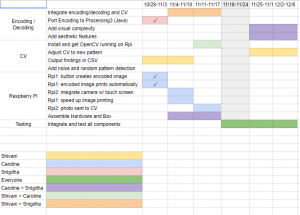

Below is our updated Gantt chart for the midpoint demo.

Caroline

Snigdha

This week, I worked on making sure the encoding algorithm we created would be able to run continuously on the first raspberry pi from the command line. In order to do this, we had to decide between either using JavaScript and web sockets or porting our encoding to Java. In the interest of time and in order to avoid unnecessary complexity, Caroline and I decided switching to Java was the most effective approach since Processing with Java can be run from the command line. After Caroline and I were able to print the image as described above, I worked with Shivani to sync up on the CV and decoder parts. We worked together to tweak the output CSV in order to make the decoding process smoother. Using that, I modified the decoder to properly parse the CSV as it’s read in and use this to decode the message. During the coming week, I’ll be working on this more to get the decoder to correctly output the decoded message.