By

Marios Savvides

Kiran Bhat

Abstract

We have implemented a real time video morphing system using feature

points on the images. Our method uses morphing for motion compensation

instead of the standard block based techniques. The input to our system

can be a stored video sequence or live video data from a camera. The system

automatically selects and tracks feature points in the video stream using

the KLT feature tracking algorithm. The number of feature points to be

tracked can be controlled by the user. The feature tracker works in realtime

at around 3 Hz (on a Pentium 300). The motion vectors of the feature points

is passed on to the morphing algorithm. Our current morphing system produces

one intermediate morphed image for every two successive "feature-tracked

image". The morphing algorithm uses the motion vectors of the nearest feature

point for texture mapping. Though simple to implement, this morphing algorithm

is not very computationally efficient. The overall system works at

1Hz, which can be improved to around 3Hz (feature tracker frame rate)

using a standard triangulation methods combined with scanline algorithms

for morphing. This system has numerous applications including video-conferencing

and net video.

KLT Feature Tracking Method

Feature tracking is a widely researched topic in the Computer Vision

community. The most commonly used methods for feature tracking employ image

correlation or sum of squared difference (SSD) techniques. With small inter-frame

displacements, a window can be tracked by optimising some matching criterion

with respect to translation and linear image deformation.

Our current system tracks features in the image based on the method

proposed by Tomasi.et.al (KLT system). The KLT (Kanade Lucas Tomasi) feature

tracking system identifies and tracks features by monitoring a measure

of feature dissimilarity (that quantifies the change of appearance of a

feature between the previous and current frame).

Features with good texture (instead of the traditional "interest"

or "cornerness" measures) are selected and are tracked using an affine

tracking model, which can account/compensate for translation and linear

warping. The affine model is computed numerically using Newton Raphson

minimization technique. Translation gives more reliable results than affine

changes when the inter-frame motion is small, but affine changes are necessary

to compare frames with large motions to determine dissimilarity. We tested

the tracker on a few face sequences and observed that features which were

identified were tracked satisfactory. The feature tracker works in realtime

at around 3Hz.

The Morphing Algorithm

We have implmented a very simple algorithm for morphing the intermediate

frame from the current and previous images of the videostream. We look

at all the tracked feature points in the current image, and find their

corresponding positions in the previous image. Using this information,

we can compute the motion vectors of all the feature points in the current

image. Then, for each pixel (ix, iy) in the current image, we find its

closest feature vector and assign that motion vector (mv_x, mv_y) to the

pixel. For each pixel (ix, iy) in the current image, we move to the pixel

location (ix-mv_x/2,iy-mv_y/2) in the intermediate image, and assign

its value equal to 0.5*(val[ix, iy] + val[ix - mv_x, iy - mv_y]). Note

here that (ix-mv_x, iy-mv_y) indicates the position of the corresponding

pixel in the previous image.

We note here that even though this algorithm is simple and produces

the desired morphing effect, it is slow since we are processing pixel by

pixel. We employ a few tricks at the pixels in the background (like skipping

every 24 pixels if the distance to the nearest feature point is greater

than a particular threshold) to improve the morphing speed.

System Description

Our system comprises of a Pentium 300 with an MRT framegrabber

card and a Sony Camcoder .

We have modified the KLT routines (from Stanford Robotics Lab) and

implemented the morphing algorithm to produce a visual testing framework.

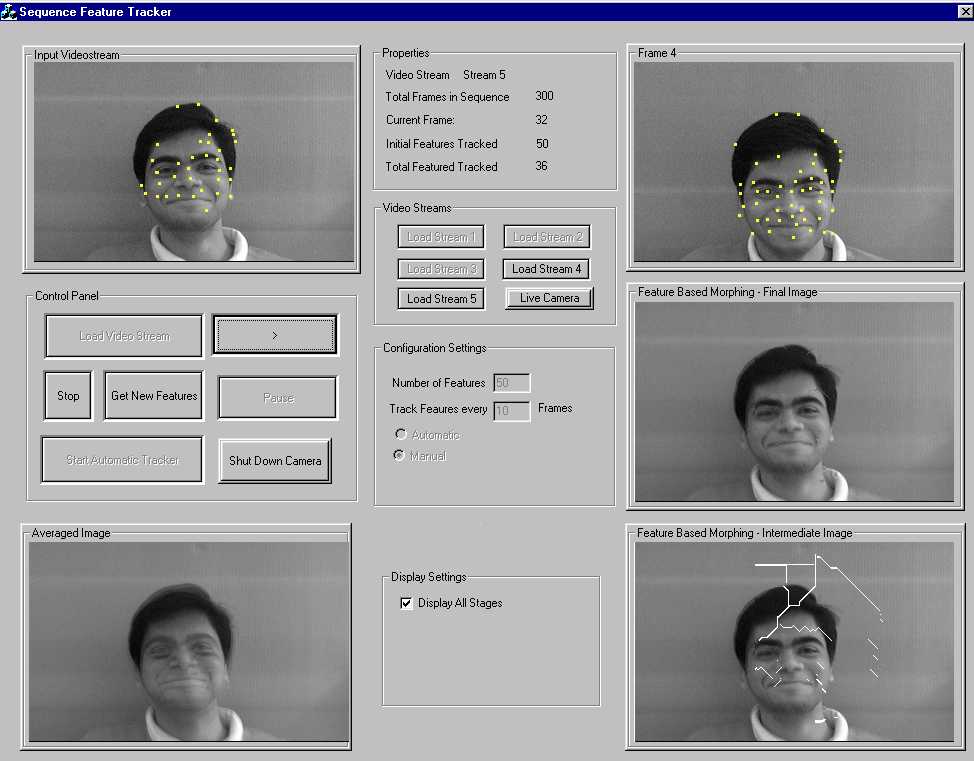

GUI

-

Choice of loading from the camera or from 5 stored video streams (faces,

people walking etc).

-

Image display of base frame(image when feature tracker replaces lost features)

-

Image display of current frame ( for comparison between the two).

-

Image display of the morphed image (Final).

-

Image display of the intermediate morphed image (before filling the gaps).

-

Image display of the averaged image (by averaging the current and

previous frames).

(This is displayed to compare with the final morphed image.)

-

Automatic frame-by-frame feature tracking (500ms timed update interval).

-

Manual step-through feature tracking process.

-

Ability to specify the number of features to be found and tracked through

a sequence.

-

Ability to specify the number of frames to elapse before replacing lost

features.

-

Number of features succesfully tracked in the current frame (relative to

features in base frame).

-

Implemented user-friendly/program safe- interface (ie. depending on user

action, GUI disables un-available options controls to avoid user

from performing an illegal action like pressing Load Stream 2 button while

in auto mode viewing video stream 1. Using this method, the GUI directs

the user to what options are valid at each moment during the execution

of the program.

Results and Future Work

The following snapshot illustrates the performance of our feature based

morphing system. The top left image shows the current frame of the video

stream, the top right image is offset from the current image by 10 frames

(the frame when the features were replaced previously). The center image

shows the final morphed image, the bottom right shows the intermediate

morphed image and the bottom left shows the averaged image. From these

images it is clear that feature points are tracked temporally, and the

quality of the morphed image is comparable to the input image (for small

motions). We also note that the quality of the morphed image is much superior

to the average image.

We found that the feature tracker works at around 3-4 Hz, and the overall

system works at around 1Hz. We can improve the speed of the feature tracker

by selectively choosing feature points on the foreground object. The morphing

algorithm can be improved by obtaining the voronoi regions prior to texture

mapping of each pixel. This system has numerous commercial applications

and is also a good testbed for testing various advanced computer vision

& image processing algorithms.

References

1) Shi. J and C. Tomasi, "Good Features to Track", IEEE Conf. Comp

Vision & Pattern Recog. (CVPR 94) Seattle, June 1994.

2) CMU Computer Vision home page.

3) Beier. T and S. Neely, "Feature -Based Image Metamorphosis", Computer

Graphics (SIGGRAPH), 26, 2, July 1992.

4) Wolberg. G, "Digital Image Warping", IEEE Computer Society Press

Monograph, 1988.

5) Prosise. J, "Programming Windows 95 with MFC", Microsoft Press,

1996.

6) Petzold. C, "Programming Windows - fifth edition", Microsoft Press,

1999.

Link to our code