Concept

Motivation

Competitive Analysis

Oculus Touch/HTC Vive Controller

The current solutions offered by Oculus and HTC offer very little when it comes to touch and focus mostly on interacting with VR rather than feeling it. The Oculus Touch features IR sensors that track the position of fingers and the Vive Controller features some haptic feedback. Although these controllers are advertised as "natural" and "intuitive" ways to interact with VR, they are anything but. Both solutions require holding something in your hand in the real world where none may exist in the virtual world, which breaks the immersion of the VR experience.

Gloveone

A Kickstarter back in mid-2015, this is a glove utilizing IMU sensors and vibro-tactile actuators for haptic feedback in virtual reality. Despite reaching its funding goal of $150,000 and promising a release in 2016, the Gloveone has yet to ship and their website is still offering preorders.

Dexmo

An exoskeleton for your hand, the Dexmo offers realistic physical resistance for VR and AR platforms, but it is large and heavy and would likely break immersion for the user. The product is also not yet available to the public.

Use Cases

Our system aims to create more immersive VR experiences without the use of handheld controllers and covers use cases for both the consumer and professional markets such as more intuitive controls for VR video games (interacting with the environment with your hands, holding items, etc) and surgeries performed remotely by doctors through VR.

Requirements

- Hand Tracking

- Precise In-Field-Of-Vision tracking with a Leap Motion mounted to front of VR set

- Approximate Outside-Field-Of-Vision tracking with IMUs mounted to the gloves

- Collision detection between VR objects and a user's hands.

- Haptic Feedback

- Simulate touch and weight with a multiplexed array of vibrational actuators

- Restrict the movement of a user's fingers and hands using a series of hand mounted servos

- Lightweight and low-profile

- Comfortable to wear and does not interfere with the immersion of the VR experience

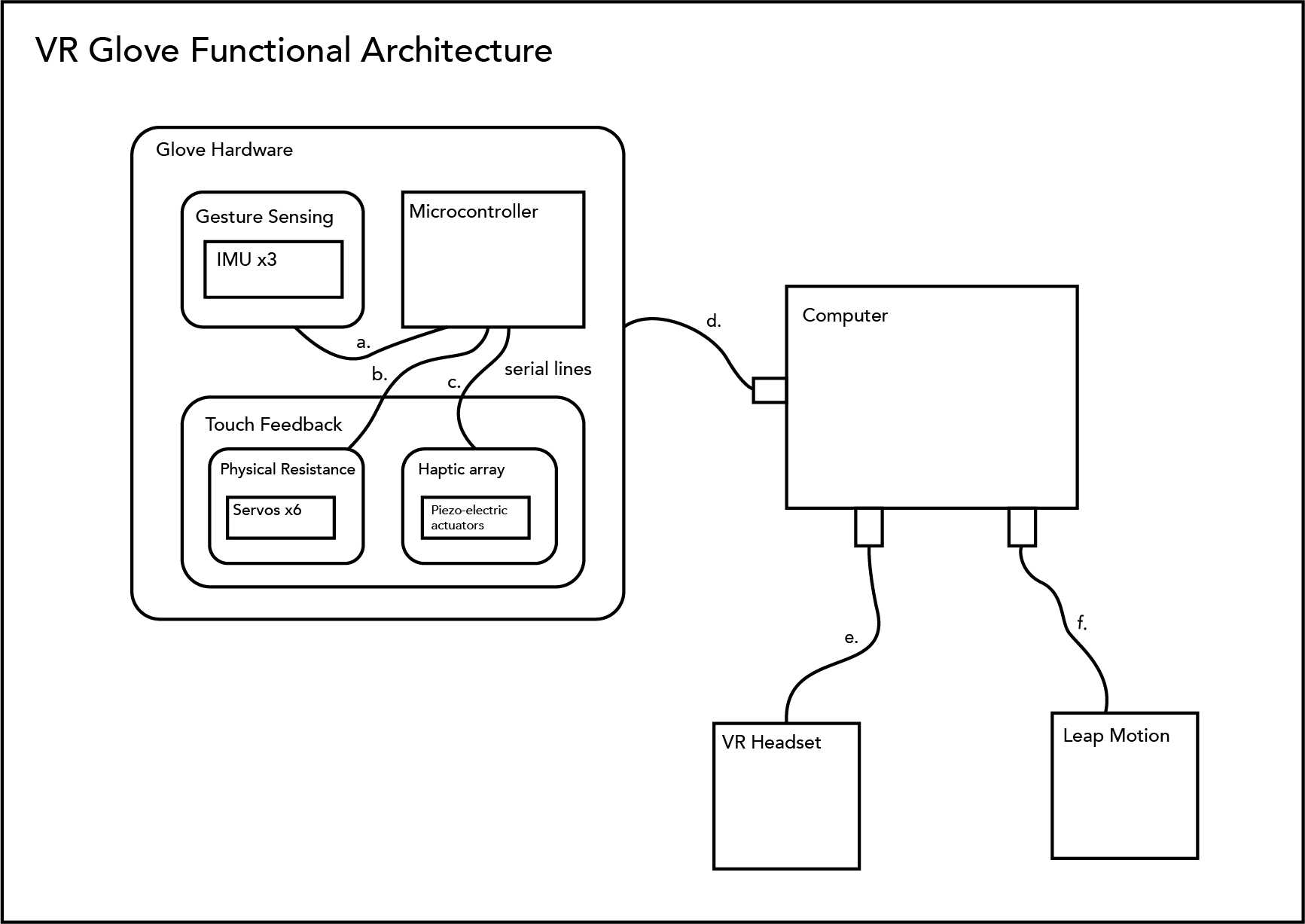

Architecture

- For gestures made outside of the Leap Motion's field of view, we need simple gesture recognition so that we can maintain our systems capabilities of grasping objects. 3 IMUs (1 on the index finger, 1 on the thumb, and 1 at the base of the fingers) allow us to detect simple contraction and extension. This information is sent to the microcontroller and then to the computer to maintain grasping positions so that models of the hands and objects remain in contact.

- In order to simulate the sense of physical resistance when grasping an object, we have servos controlling cables connected to each finger. The servos will take inputs from the microcontroller that interprets the mesh collision info. They then restrict finger movement based on the collision info by extending or retracting the connected cable.

- To simulate when the user touches an object in the VR space, the controller will select specific areas of the haptic array to induce vibrative sensations from the piezo-electric actuators. For example, if an index finger presses down on a virtual keyboard, a vibration in the tip of the finger would go off to indicate that it is in contact with a key.

- All sensory and actuation data is sent to and from the microcontroller and the computer. The computer takes sensory data to update models of the user's hands. The microcontroller takes actuation data to create physical responses inside the gloves.

- The VR headset is where the system obtains positional information about the user’s head in 3D space. This information will be useful in determining collisions with the glove.

- The Leap Motion is our main form of grip recognition. It will recognize hand position and certain gestures to convey to the headset’s SDK, when the users are attempting to pick up an object.

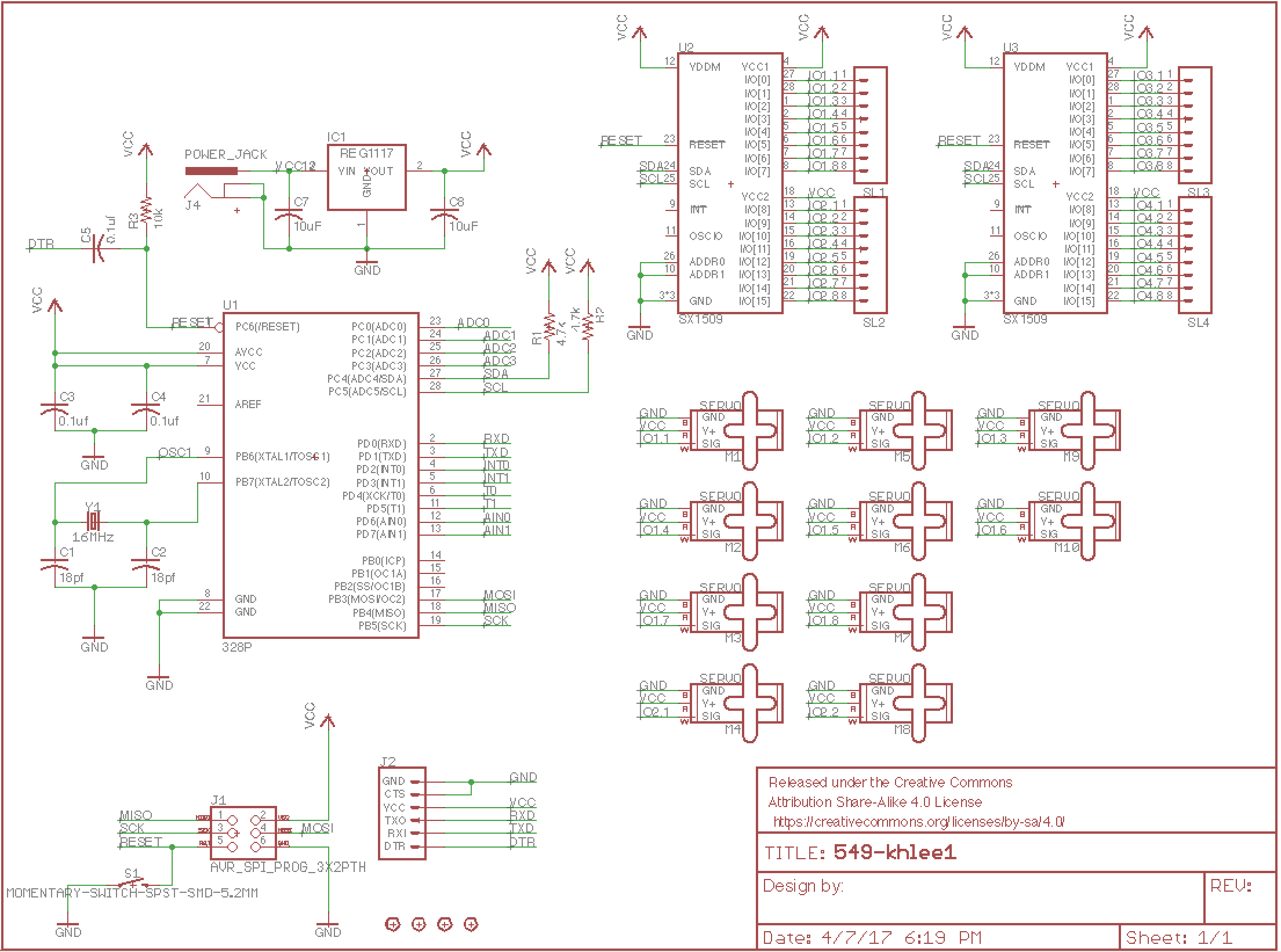

Technical Specifications

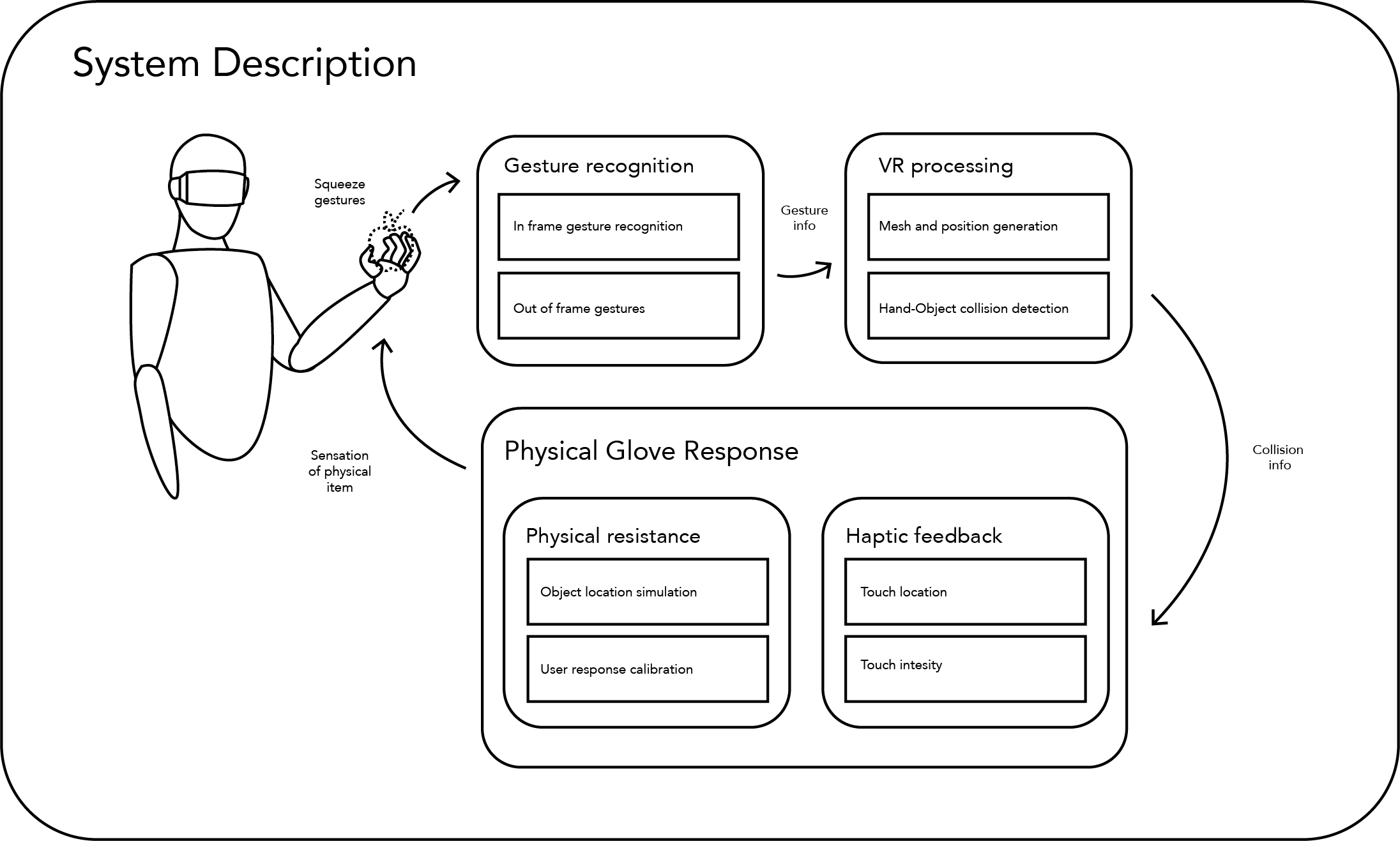

The overall goal of our system is to simulate the feeling of grasping a real object in a virtual environment. This requires a constant adjustment cycle, where our glove hardware continuously updates its restrictive elements to match the interaction between the user's hands and objects in the virtual environment. As the user goes to grab an object, our gesture recognition system (Vive + Leap Motion) work to update the virtual model of their hands. Then, based on the new hand position and hand-object collision information, the glove's physical response system reacts accordingly. Because your fingers shouldn't be able to pass through an object, the servo motors will stop your fingers from closing past the collision point. Because there are resistive features in this system, calibration will have to be done to indicate a neutral state; this would be a user-dictated point at which the servos shouldn’t be able to pull further back because of potential hand injury. Furthermore, the haptic signals give the user feedback about where their hands are making contact with objects. Both touch location and touch intensity will let the user know how to react to interact with the object they are holding.

Interaction Diagram

Components

Hardware

- HTC Vive

- Leap Motion

- Vibrational Actuators

- Motor Servos

- 3-D Printed Exoskeleton

- Glove with Platform

- Custom PCB

- Port Expander

Software

- Unity

- Steam VR

- C#

Protocols

- UART

- USB

- I2C

Team Members

Stephen Chung, Kevin Lee, Brian Li, Vincent Liu