TEAM 8: Rapid Ocular Sideline Concussion Diagnostics

Spring 2014

Team Members

- Brandon Lee

- Andrew Pfeifer

- Thomas Phillips

- Ryan Quinn

Project Concept and Motivation

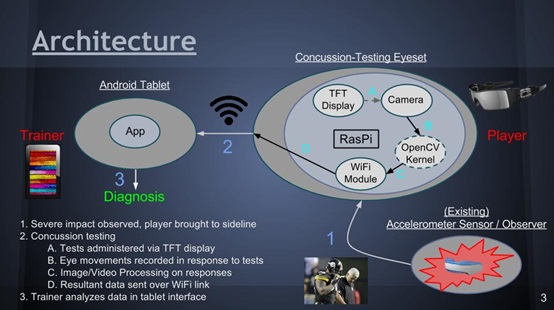

Concussions are a major concern in modern sports. Our goal is to provide tools

to trainers that will help to automate existing diagnostic processes and to expedite

existing tests to more quickly diagnosis multiple players. Our project focuses

specifically on ocular nerve testing; that is, the device we are working on is a

head-mounted eye-set that administers various tests to the patient, recording and

processing pupil and eye responses to produce a concussion diagnosis. Results and

diagnosis suggestions will be integrated wirelessly with an application running on

a trainer's Android tablet.

Competitive Analysis

Most of the existing products are simply accelerometers mounted to or in common

sports equipment or the players themselves. These devices are useful for observing

severe impacts, but they do not provide any diagnosis of possible condition. Ideally,

our product would be used in conjunction with one of these devices, another similar

device, or traditional observations in order to pull a player to the sideline for

testing and diagnosiswith the eye-set.

Links to further information on existing products:

Technical Specifications

Hardware:

Eye-set

- A custom, ergonomic head-mounted housing

- Raspberry Pi, Model B

Attachments

- Wifi Module

- 2 RasPi Camera Boards (with custom lensing)

- 2 RasPi NTSC/PAL TFT Displays (with custom viewing lenses)

- SD Card

- Cell Batteries (for mobile power)

Android Tablet

- Prontotec A20 PT7-A20-U with Linux/Android 4.2 used for testing

Software and Protocols:

- OpenCV Image/Video Processing (C++ / Python)

- Android Application

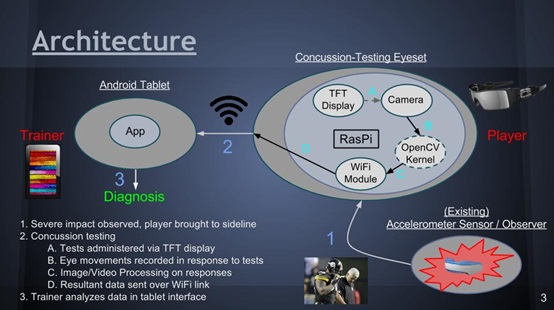

Simple architecture diagram from Presentation 2:

Images: © Amazon, Rayban, Steelers, X2 Biosystems

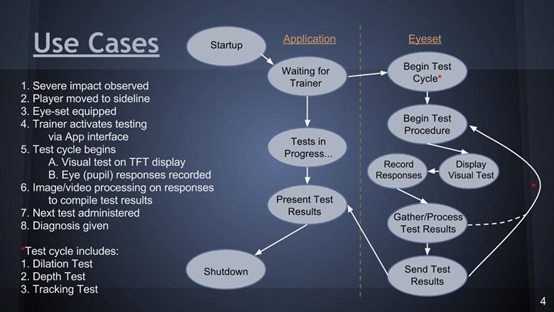

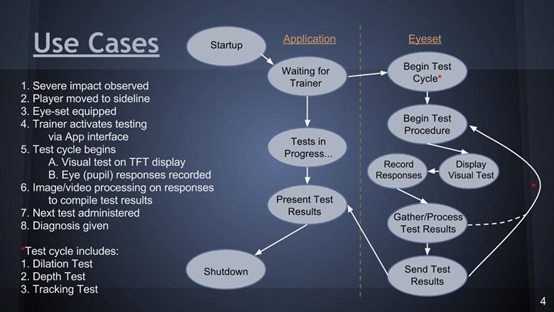

Simple use case flow from Presentation 2:

Requirements

- Functional Requirement: Accepts image/video feed as input.

- Functional Requirement: Processes image/video with OpenCV.

- Functional Requirement: Produces results base on pupil dilations in reponse to tests.

- Functional Requirement: Sends data from eye-set to tablet.

- Functional Requirement: Tablet displays data and diagnosis in easy-to-use application.

- Timing Requirement: Camera must pick up eye responses in "real-time".

- Timing Requirement: Reponse processing should be completed and diagnosis given

within 10 seconds of test completion.

Risks and Mitigation Strategies

- Direct access to concussed patients for testing might not be available

- Extensive testing with un-concussed subjects

- UPMC contacts may help

- Eye-set design may be uncomfortable, unbalanced, or clunky

- Design a compact housing for RasPi and sensors

- A counter-balance may be needed to offset RasPi weight

- Camera focus may lack sharpness and clarity to perform accurate analysis

- Alternative lenses may need to be acquired or made

- Image and video processing algorithms may account for possible blurs

- Image and video processing algorithms may account for varying eye types and colors

Time Lapse

- 30 January 2014 - The team began to

investigate possible products that could be designed for the use of concussion

diagnosis, particularly in American Football situations. The idea was presented

that our research and design could be submitted to the National Football League -

General Electric - Under Armour Head Health Challenge II. This would be an

opportunity to be awarded funding by the NFL-GE-UA collective responsible for

issuing the challenge.

- 5 February 2014 - An initial presentation was given about our new idea - the

Rapid Ocular Sideline Concussion Diagnostics eye-set.

- 11 February 2014 - A proposal was submitted to the NFL-GE-UA Head Health

Challenge II.

- 19 February 2014 - A second presentaion was given that detailed our porposed

design and architecture.

- 19 February 2014 - Contact was made with a UPMC-affiliated connection based

in West Virginia. This doctor confirmed that ocular testing is probably the most

conclusive testing approach for concussion-related injuries and that the strategy

involving tests on pupil contraction and dilation is a medically-accurate

way to go about this. In fact, the doctor is currently working on a mouthguard

that incorporates both accelerometer and gyroscope tools to calculate precise

measurements on sustained impacts. He suggested that we may be able to design

a more accurate testing device if something that tests balance is integrated

into the system; he reckoned that an accurate visual testing device that also

included balance testing would be among the most comprehensive automated

testing devices for this sort of diagnosis that are currently known.

- 19 February 2014 - Most of the prototyping parts that were ordered had arrived,

and work began. The Raspberry Pi was integrated with the WiFi module, and

initial integration with the camera boards began. It was also realized that

depth-like illusions can be created on small screens by using properly tuned

lenses. It will need to be ensured that the displays, which would be very close

to the eyes of the user, will 1) not cause headaches to the wearer, 2) create

a reliable illusion of depth for testing, and 3) have clear and focused images.

- 21 February 2014 - More research was done on the OpenCV libraries that we plan

to use for the image and video processing. Very importantly, research was also

done on techniques that can allow for changing focal depth of the RasPi camera

boards. This may solve the problem of clear focus at very small distances

for the video response recording of the eye during tests. This is one of two

lense-related measures that will need to be considered, the other being the

closeness of the testing displays to the user's eyes. Some ideas that may aid

in finding a solution for the latter include a sort of lense between the eye

and the display to create an illusion of depth with a clear, non-blurred image.

- 24 February 2014 - *Team Meeting Scheduled*

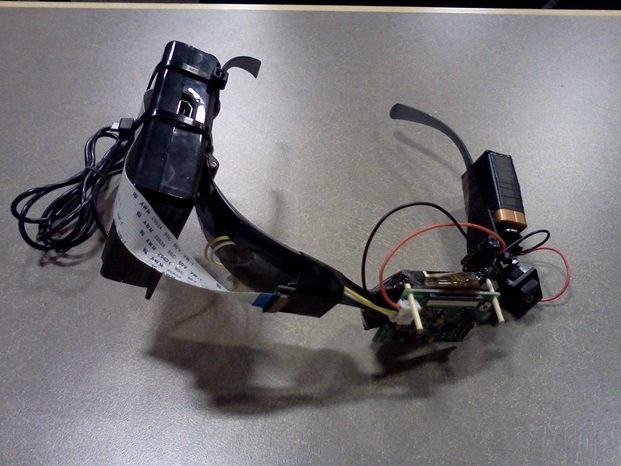

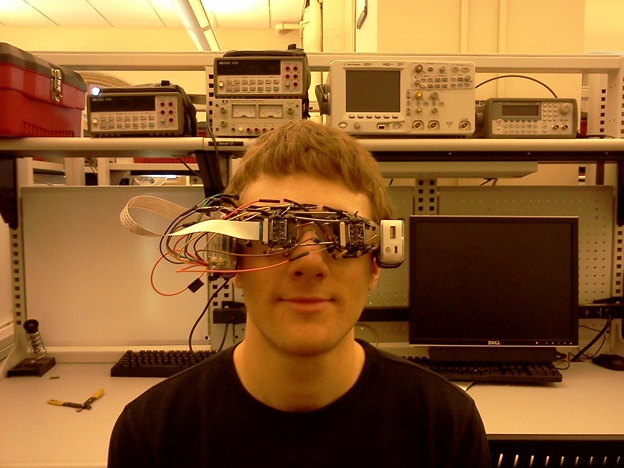

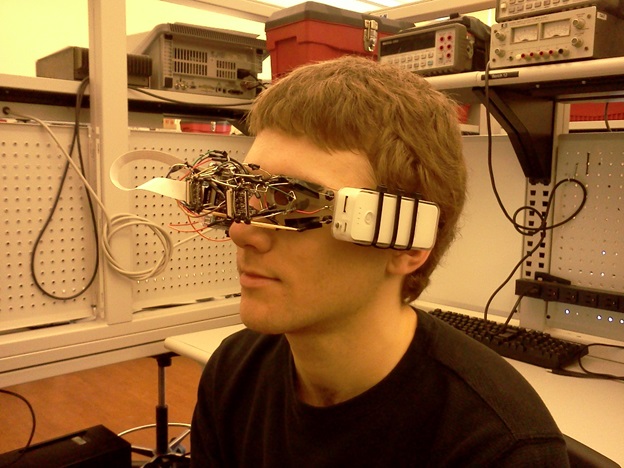

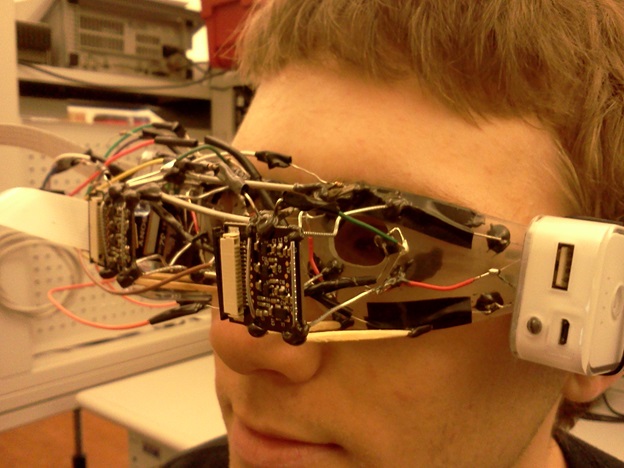

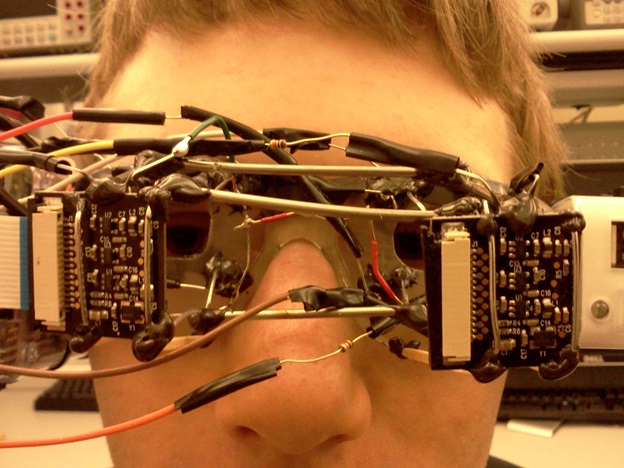

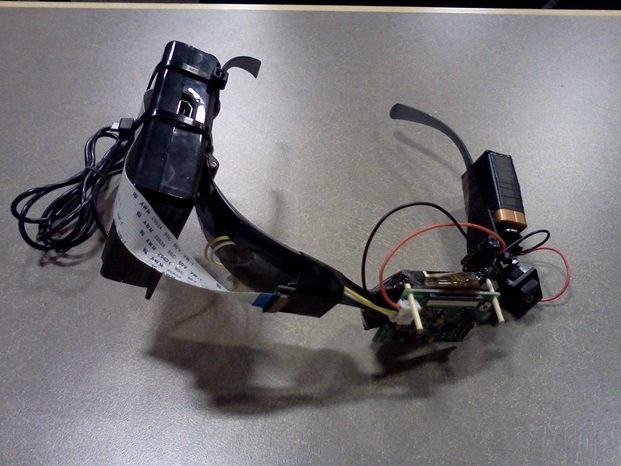

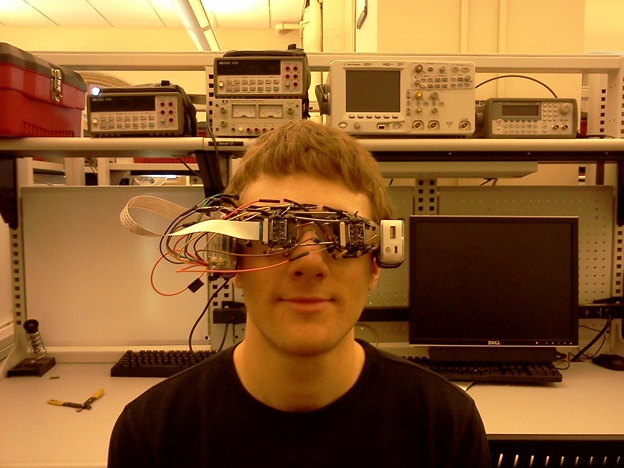

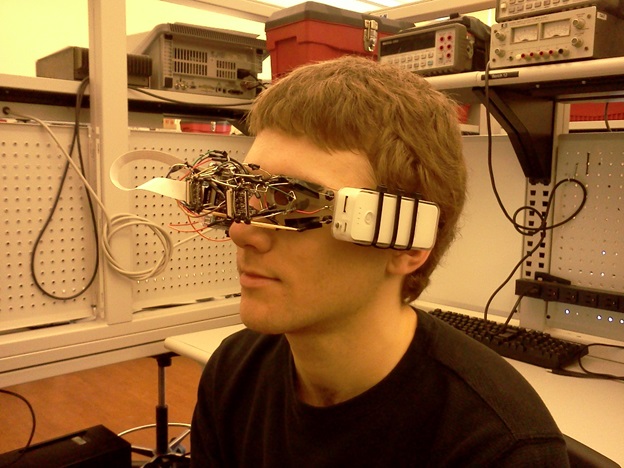

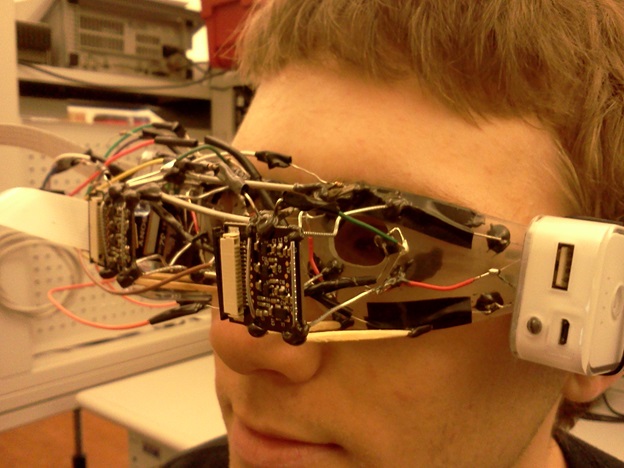

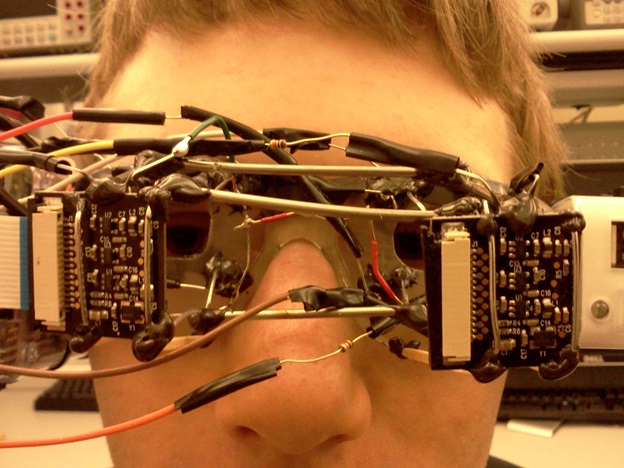

- 5 March 2014 - The mid-semester demo went very well. We presented

our first prototype to the class and showed some videos that detailed

the current pupil size tracking and processing algorithms. There are some images

below of the first prototype, from which we could collect good quality video of

pupil responses to a brightness test.

- 26 March 2014 - The third presentation about our testing plans was

presented. Initial testing plans can be seen in the attached presentation

below.

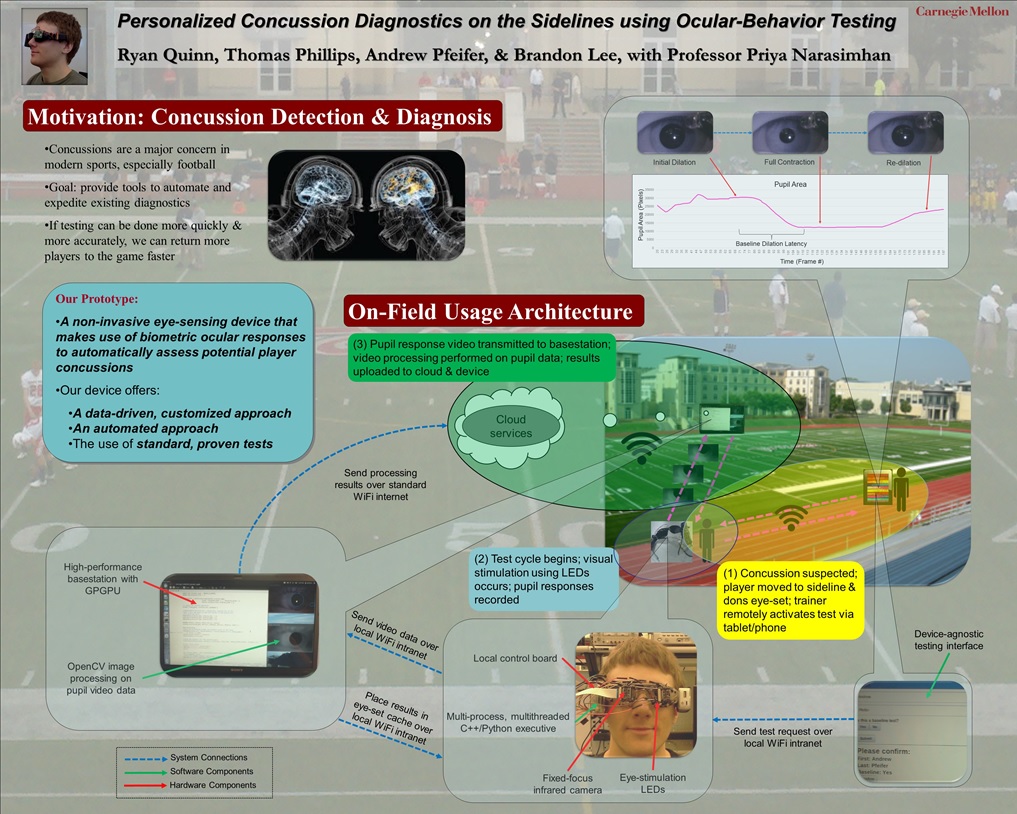

- 26 April 2014 - The device can now be used in a "headless" mode, with web

application interface integration working smoothly. Also, a battery pack is

now affixed to the device, and - together with the WiFi connection capabilities

- leads to the device being fully portable now. There are some images of the

second prototype below.

- 30 April 2014 - *Public Poster and Demo Scheduled*

Presentations

Presentation 1 - Project Proposal

Click to Download (.pptx)

Presentation 2 - Design and Architecture

Click to Download (.pptx)

Mid-Semester Demo

Testing in the lab:

Prototype 1.0, as presented at the Mid-Semester Demo:

Presentation 3 - Testing Plan

Click to Download (.pptx)

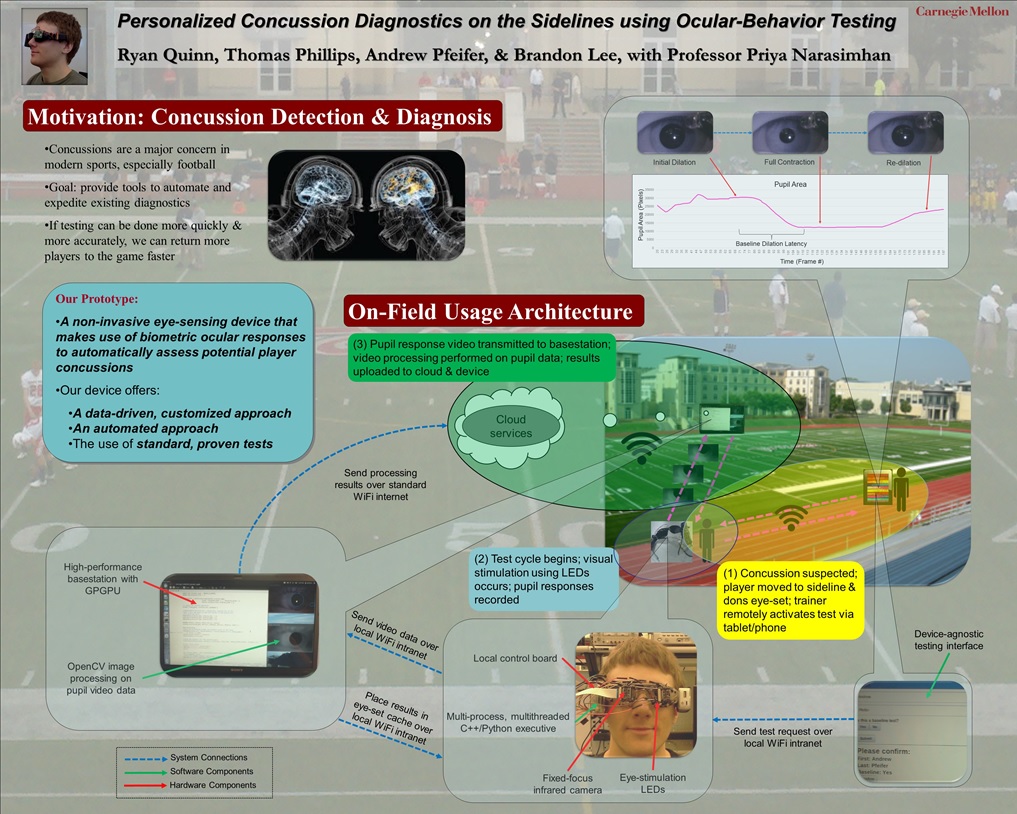

Final Demo Poster

Testing the second prototype in the lab:

Last Updated: 26 April 2014

^Top

18-549 course home page