With regards to progress, I believe that I am very slightly behind schedule. Once again, I am not currently too worried about not getting our deliverables completed, and next week, the majority of our time will likely center around some light user testing, and preparing the materials for our final submissions (poster, slides, writeup).

Andrew Wang’s Status Report 4/19/2025

The next week, I also anticipate refining the navigation logic further, and getting a speech to text feedback loop tested on the Jetson, as mentioned before.

Andrew Wang’s Status Report 4/12/2025

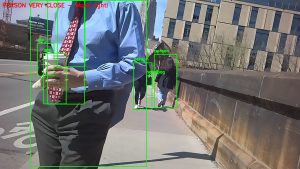

Using our models, I manually implemented visualizations of the bounding boxes identified by the model in the images sampled, and printed out any feedback prompts that would be relayed to the user on the top left of each image.

Visually inspecting these images, we see that not only do the bounding boxes look accurate to their identified obstacles, but that we are able to use their positional information in the image to relay feedback. For sample, in the second image, there is a person coming up behind the user on the right side, so the user is instructed to move to the left. Additionally, the inference speed was very quick; the average inference time per image was roughly 8ms for the non-quantized models, which is an acceptably quick turnaround for a base model. I haven’t tried running inference on the quantized models, but previous experimentation has indicated that it would likely be faster. As such, since the current models are already acceptably quick, I plan on testing the base models on the Jetson Nano first before I attempt to use to quantized models to maximize performance accuracy.

Currently, I am about on schedule with regards to ML model integration and evaluation. For this week, I plan on working with the staff and the rest of our group to refine the feedback logic given by the object detection model, as currently the logic is pretty rudimentary, often giving multiple prompts to adjust the user’s path when only one or even none are actually necessary. I also plan on integrating the text to speech functionality of our navigation submodule with our hardware if time allows. Additionally, I will need to port the object detection models onto the Jetson Nano and more rigorously evaluate the speed on our hardware, so that we can determine if more intense model compression will be necessary to make sure inference and feedback are as quick as we need it to be.

Andrew Wang’s Status Report: 3/29/2025

I am using TensorRT and Onnx packages to perform the quantization on the YOLO models, which I found online was the best method for quantizing ML models for Edge AI computing, due to the fact that this method results in the fastest speedups while shrinking the models appropriately. However, this process was pretty difficult as there are a lot of hardware specific issues I ran into in this process. Specifically, the process of quantizing using these packages involves at lot of under the hood implementation assumptions about how the layers are implemented and whether or not they are fuse-able, which is a key part of the process.

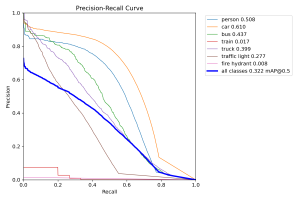

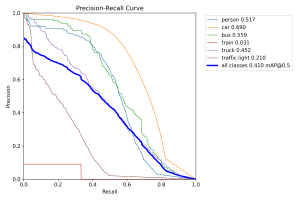

I did end up figuring it out, and ran some evaluations on the YOLOv12 model to see how performance changes with quantization:

Visually inspecting the prediction-labelled images and PR curve as well as the normalized confusion matrix, just like before, it generally appears that there isn’t much difference in performance with the base, un-quantized model, which is great news. This implies that we can compress our models in this way without sacrificing performance, which was the primary concern

Currently, I am about on schedule with regards to ML model development, but slightly behind on integration/testing the navigation components.

For this week, in tandem with the demos we are scheduled for, I plan on getting into the details of integration testing. I also would be interesting in benchmarking any inference speedups associated with the quantization, both in my development environment and on the Jetson itself in a formal testing setup.

Andrew Wang’s Status Report: 3/22/2025

This week, I spent some time implementing the crosswalk navigation submodule. One of the main difficulties was using pyttsx3 for real-time feedback. While it offers offline text-to-speech capabilities, fine-tuning parameters such as speech speed, volume, and clarity will require extensive experimentation to ensure the audio cues were both immediate and comprehensible. Since we will be integrating all of the modules soon, I anticipate that this will be acted upon. I also had to spend some time familiarizing myself with text-to-speech libraries in python since I had never worked in this space before.

I also anticipate there being some effort required to optimize the speech generation, as the feedback for this particular submodule needs to be especially quick. Once again, we will address this as appropriate if it becomes a problem during integration and testing.

Currently, I am about on schedule as I have a preliminary version of the pipeline ready to go. Hopefully, we will be able to begin the integration process soon, so that we may have a functional product ready for our demo coming up in a few weeks.

This week, I will probably focus on further optimizing text-to-speech response time, refining the heading correction logic to accommodate natural walking patterns, and conducting real-world testing to validate performance under various conditions.

Andrew Wang’s Status Report: 3/15/2025

Unfortunately, I wasn’t able to make much progress on this end. So far, I attempted switching the label mapping of the bdd100k dataset, trying to fine-tune different versions of the YOLOv8 models, and tuning the hyperparameters slightly, but they all had exactly the same outcome of the model performing extremely poorly on held out data. As to why this is happening, I am still very lost, and have had little luck figuring this out.

However, since we remain interested in having a small suite of object detection models to test, I decided to try to find some more YOLO variants to evaluate while the fine-tuning portion is being solved. Specifically, I decided to evaluate YOLOv12 and Baidu’s RT-DETR models, both compatible with my current pipeline for pedestrian object detection. The YOLOv12 model architecture introduces an attention mechanism for processing large receptive fields for objects more effectively, and the RT-DETR model takes inspiration from a Vision-Transformer architecture to efficiently process multiscale features in the input.

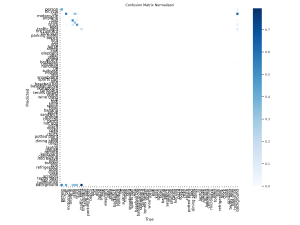

It appears that despite being more advanced/larger models, these models actually don’t do much better than the original YOLOv8 models I was working with. Here are the prediction confusion matrices for the YOLOv12 and RT-DETR models, respectively:

This suggests that it’s possible that these object detection models are hitting the limit of performance on this particular out of distribution dataset, and that testing these out in the real world might have similar performance across models as well.

Currently, I am a bit behind schedule as I was unable to fix the fine-tuning issues, and subsequently was not able to make much progress on the integration components with the navigation submodules.

For this week, I will temporarily shelve the fine-tuning implementation debugging in favor of implementing the transitions between the object detection and navigation submodules. Specifically, I plan on beginning to handle the miscellaneous code that will be required to pass control between our modules.

Andrew Wang’s Status Report: 3/8/2025

This week, I worked on fine-tuning the pretrained YOLOv8 models for better performance. Previously, the models worked reasonably well out of the box on an out of distribution dataset, so I was interested in fine-tuning it on this dataset to improve the robustness of the detection model.

Unfortunately, so far the fine-tuning does not appear to help much. My first few attempts at training the model on the new dataset resulted in the model not detecting any objects, and marking everything as a “background”. See below for the latest confusion matrix:

I’m personally a little confused as to why this is happening. I did verify that the out of the box model’s metrics that I generated for my last status report are reproducible, so I suspect that there might be a small issue with how I am retraining the model, which I am currently looking into.

Due to this unexpected issue, I am currently a bit behind schedule, as I had previously anticipated that I would be able to finish the fine tuning by this point in time. However, I anticipate that after resolving this issue, I will be back on track this week as the remaining action items for me are simply to integrate the model outputs with the rest of the components, which can be done regardless of if I have the new models ready or not. Additionally, I have implemented the necessary pipelines for our model evaluation and training for the most part, and am slightly ahead of schedule in that regard relative to our Gantt chart.

For this week, I hope to begin coordinating efforts to integrate the object detection models’ output to the navigation modules in the hardware, as well as resolving the current issues with the model fine-tuning. Specifically, I plan on beginning to handle the miscellaneous code that will be required to pass control between our modules.

Andrew Wang’s Status Report – 02/22/2025

This week, I was able to begin the evaluation and fine-tuning of a few out of the box YOLO object detection models. More specifically, I used the YOLOv8x, which is a large, high performance model trained on the COCO dataset.

For evaluation, we were advised to be wary of the robustness of the object detection models with regards to its performance on out-of-distribution data, as previous teams have run into difficulty when trying to use the models in a real world setting. Since the validation metrics of the model on the COCO dataset are already available online, so I decided to use the validation set of the BDD100k dataset to determine the level of performance decay on a out-of-distribution dataset to mimic performance in a real world setting.

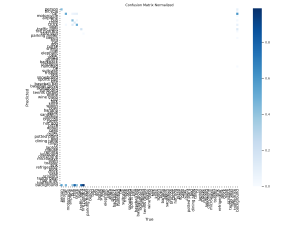

So far, it appears that the out of the box model does reasonably well on the new dataset out of distribution. I first generated a confusion matrix to examine how well the model does on each class. Note that our evaluation dataset only contains the first 10 labels of the YOLO model, and so only the top left square of the matrix should be considered in our evaluation:

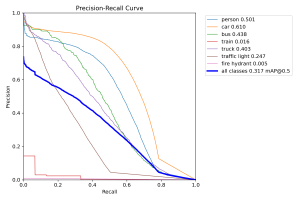

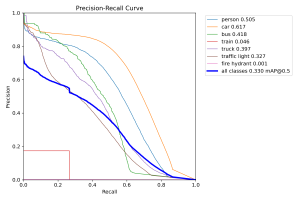

It appears that the model mistakingly assigns a “background” label to some images that should be been classified as another item on the road, which is especially troublesome for our use case. Besides this, the accuracy appears somewhat reasonable, with some notable off-target predictions. I also generated a precision-recall across the different classes:

It appears that the model struggles most with identifying traffic lights and trains. However, in our use case of crossing the road, these two objects are definitely less important to detect in comparison to the other categories, so I’m not personally too worried about this. As a whole, the mAP metrics across the other labels seem reasonable compared to the reported mAP metrics of the same models on the COCO dataset. Considering that these models weren’t trained on this new BDD100k dataset, I’m cautiously optimistic that they could perform well in our testing as is, even without extensive fine-tuning.

Finally, I generated a few example images with the model predictions overlaid to visually depict what the model is doing. Here is an example:

The top picture are the images with the reference labels,

and the bottom picture are the same images with our model predictions overlaid. On the top row, the second image to the left stood out to me, since our model detected trains where there weren’t any. To me, this might be an interesting dive point into why our model does so poorly with regards to detecting trains, although given that we have established that trains aren’t as important in our use case, we might not need to do a detailed analysis if time is tight.

With regards to progress, I believe that I am about on track as per our Gantt chart; I have been able to complete preliminary evaluation of the object detection models, and I have also started implementing a fine-tuning pipeline, in order to incorporate more datasets into the out of the box models we are currently using.

Next week, I plan on moving into the second part of my deliverables; writing out a pipeline to handle the outputs from our model with regards to navigation. I plan on brainstorming how to make proper use of the detection model inputs, as well as how they should be integrated into the larger navigation module that we have planned. I also plan on gathering some more datasets such that I can make use of the fine-tuning pipeline I already have implemented to develop even better object detection models, such that we have a wider array of options when we are ready to our integrated project.

Andrew Wang’s Status Report for 2/15/2025

This week, I was able to gain access to a new computing cluster with higher amounts of storage and GPU availability late into the week. As such, I began downloading an open source objection detection dataset, BD100K, from Kaggle onto the cluster for evaluation/fine-tuning. After all of the images were downloaded (the version I downloaded had 120,000+ images), I was able to start working on the implementation of the evaluation/fine-tuning pipeline, although this is still a work in progress.

With regards to schedule, I believe that I am slightly behind schedule. Due to some issues with gaining access to the cluster and the download time required to fetch a large dataset, I did not anticipate not being able to work on this until the later half of the week. I would have liked to have finished the evaluation/fine-tuning implementation by this week, and so I anticipate having to put in a bit of extra work this week to catch up and have a few different versions of the model ready to export to our Jetson Nano.

By the end of this week, I hope to have completed the evaluation/fine-tuning pipelines. More specifically, I would like to have concrete results for evaluating a few out of the box YOLO models with regards to accuracy and other metrics, in addition to hopefully have fine-tuned a few models for evaluation.

Andrew Wang’s Status Report for 2/8/2025

This week, I began looking into different object detection algorithms online that we can use as part of our first iteration of our implementation. Specifically, I installed a pre-trained YOLOv8 model from the YOLO package “ultralytics”, and was able to get it working on a CMU computing cluster. Since a rigorous evaluation and fine-tuning of the models will be necessary for integration, I’m planning on beginning to implement an fine-tuning and evaluation pipeline in the next few days to measure the model performance on unseen data, such as generic datasets containing images of streets such as BDD100K, EuroCity Persons, and Mapillary Vistas. Unfortunately, these datasets are way too big to store on the clusters I currently have access to, so I am working on obtaining access to alternative computing resources, which should be approved in the next few days.

With regards to progress, I believe that I am about on schedule. We have specifically set aside the upcoming week and next to evaluate and handle the ML side of our project based on our Gantt chart, and I am optimistic that we should be able to get this done in the next two weeks as the models themselves can simply be fine-tuned to any degree as we see fit with our constraints.

By the end of next week, I’d hope to have completed the download of the image datasets, as well as finished preliminary evaluation of the YOLOv8 model. We may also consider using different object detection models, although this is likely something we will consider more seriously as we get the first results from our YOLOv8 model.