Items done this week:

- Sewed all the pockets for the parts on the hat, finalized the layout of all the components, and soldered ESP32 to IMU so they can be inserted into the hat without terrible wires sticking out.

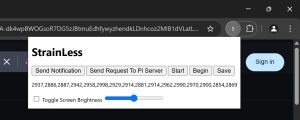

- Wrote/tested recalibration code using server<->Pi communication, and synchronized this with requests from the browser extension. I decided to just handle all the recalibration on the Pi since I realized the process doesn’t have to be that complicated to work properly.

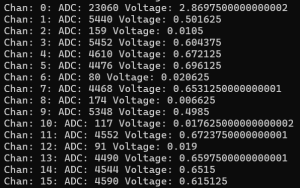

- Implemented simple low pass filtering (just a moving average) to kill some of the bumpiness in the angle data. Still using the Kalman filtering/sensor fusion algorithm from the interim demo. Seems to work well enough (main thing is it doesn’t drift up/down at rest) but I need to test with a camera instead of just eyeballing to see how accurate the “real-time” angles are.

- Tweaked code for sending alerts to avoid over-alerting the user.

- Tested and debugged wireless setup for hat system.

- Roughly tested with a person wearing the hat.

Progress

Ready to start verifying the angle calculations at this point now that everything is attached to the hat and the wireless mode is fixed, so I’m not too behind schedule. One unfortunate thing is that the JST connectors I ordered ended up not being the right size so I will have to do some more soldering on Monday to extend the connector on the battery. This is not too much of a roadblock for testing, since there’s a convenient little flap on the hat that the battery can be tucked into, close to the ESP32. And, the soldering job will be quick anyway. Overall I don’t think there will be an issue with finishing the final tweaks + testing of this subsystem in time for the final demo.

Deliverables for next week

- Make components more secure on the hat + solder extension wires to battery connectors

- Test calculated angles with a camera

- Configure a demo and “real” mode.

Verification

- User testing with the hat – have someone wear the hat and do some work at a computer, record from side view (~10 min), calibrate ~90 degrees (upright) as starting position, have a screen with the current angles being printed in the frame, take a sample of ~20 frames from the video, use an image analysis tool (e.g. Kinovea) to get a ground truth angle to compare the “real-time” data with. Test “passes” if the ground truth angle and calculated angles are within 5 degrees in all frames. I also want to repeat this test on a user working for an hour, and take an even sampling of 20 frames across this hour to see if the accuracy gets worse over time.

Video of initial testing (this still with the jumper wires, forgot to re-record with soldered version): https://drive.google.com/file/d/1_j-dwfMfuiTcbsXzWj6ul1kb8jZTK9rN/view?usp=sharing

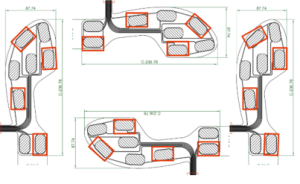

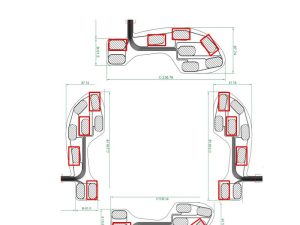

Here’s the layout of parts on the hat, from the middle of my adventures in sewing earlier this week: