Risks:

Lower accuracy than anticipated, but no large risks!

Changes:

Changed our algorithm for how we are doing downward stairs detection.

Unit Tests and Overall System:

I will list the test followed by the findings we had from each test:

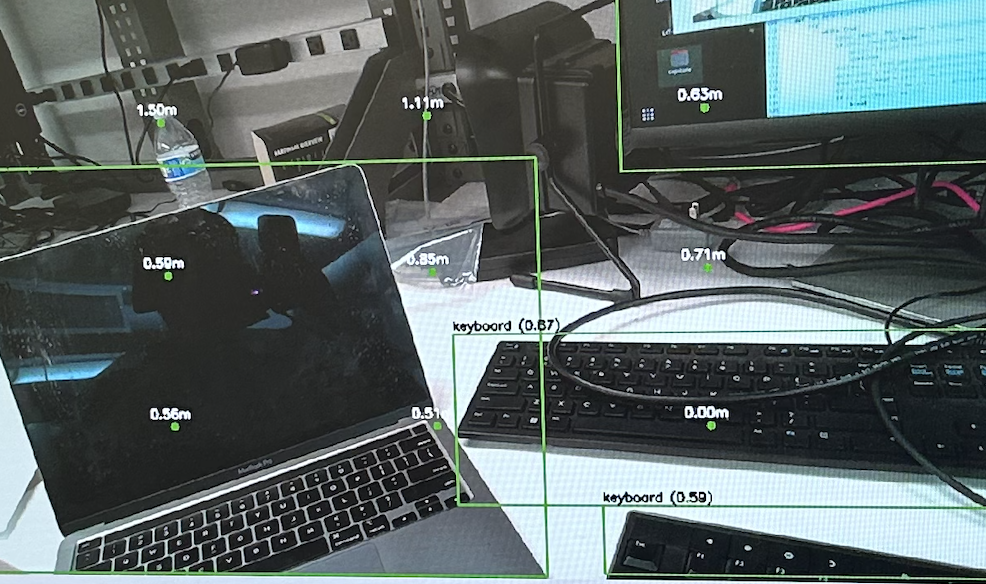

Object Detection –> Pretty solid all around. We tightened up the range that we accept objects so that it will only detect objects in a shorter range.

Steps –> With data augmentation, our model is very accurate in dim/weird angle areas.

Wall Test –> Mainly very accurate, only inaccurate on slanted walls where the closer wall is in a distance hole (distance of 0).

FSR -> mainly accurate, only inaccurate on carpets

Haptics –> completely accurate

Integration –> mainly accurate, only inaccurate on moments where there is a person on the stairs.