What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

One major risk is the unreliability of gesture recognition, as OpenPose struggles with noise and time consistency. To address this, the team pivoted to a location-based input model, where users interact with virtual buttons by holding their hands in place. This approach improves reliability and user feedback, with potential refinements like additional smoothing filters if needed.

System integration is also behind schedule due to incomplete subsystems. While slack time allows for adjustments, delays in dependent components remain a risk. To mitigate this, the team is refining individual modules and may use mock data for parallel development if necessary.

Finally, GPU performance issues could affect real-time AR overlays. Ongoing shader optimizations prioritize stability and responsiveness, with fallback rendering techniques as a contingency if improvements are insufficient.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Gesture-based input has been replaced with a location-based system due to unreliable pose recognition. While this requires UI redesign and new logic for button-based interactions, it improves usability and consistency. The team is expediting this transition to ensure thorough testing before integration.

Another key change is a focus on GPU optimization after identifying shader inefficiencies. This delays secondary features like dynamic resolution scaling but ensures smooth AR performance. Efforts will continue to balance visual quality and efficiency.

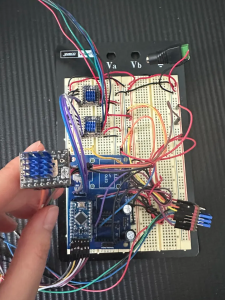

The PCB didn’t exactly match the electrical components, especially the stepper motor driver being used. We had ordered a different kind of stepper motor to match our needs (being run for long periods of time), but it required an alternative design. So, we made new wire connections to be able to use the stepper motor.

Provide an updated schedule if changes have occurred.

This week, the team is refining motion tracking, improving GPU performance, and finalizing the new input system. Next week, focus will shift to full system integration, finalizing input event handling, and testing eye-tracking once the camera rig is ready. While integration is slightly behind, a clear plan is in place to stay on track. We will begin integrating the camera rig that is ready while the second one is being built.

In particular, how will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

We will use logs to determine whether there is more than 200 ms latency between the gesture recognition and camera movement and a 1 s delay for generating face models.