- Currently, we still have to finish polishing the web application and facial recognition part of the project. The basic functionality of the web app – showing a camera feed and running the facial recognition system – is working pretty well. We still have to finalize things such as displaying the check-in and check-out logs, along with manual checkout (in case the system cannot detect a checked-in user). The other risk is racial recognition. Throughout our testing in the last 2 days, it is highly effective at distinguishing between diverse populations, but when the testing set contains many very similar faces, the facial recognition system fails to differentiate them. The new facial recognition library we are using is definitely much better than the old one though. We will try our best to flesh out these issues before the final deliverables.

- The only change we have really made this week is switching to a new facial recognition library, which drastically improves the accuracy and performance of the facial recognition part of the project. This change was necessary because the old facial recognition code was not accurate enough for our metrics. This change did not incur and costs, except perhaps time.

- There is no change to our schedule at this time (and there can’t really be because it is the last week).

UNIT TESTING:

Item Stand Integrity Tests:

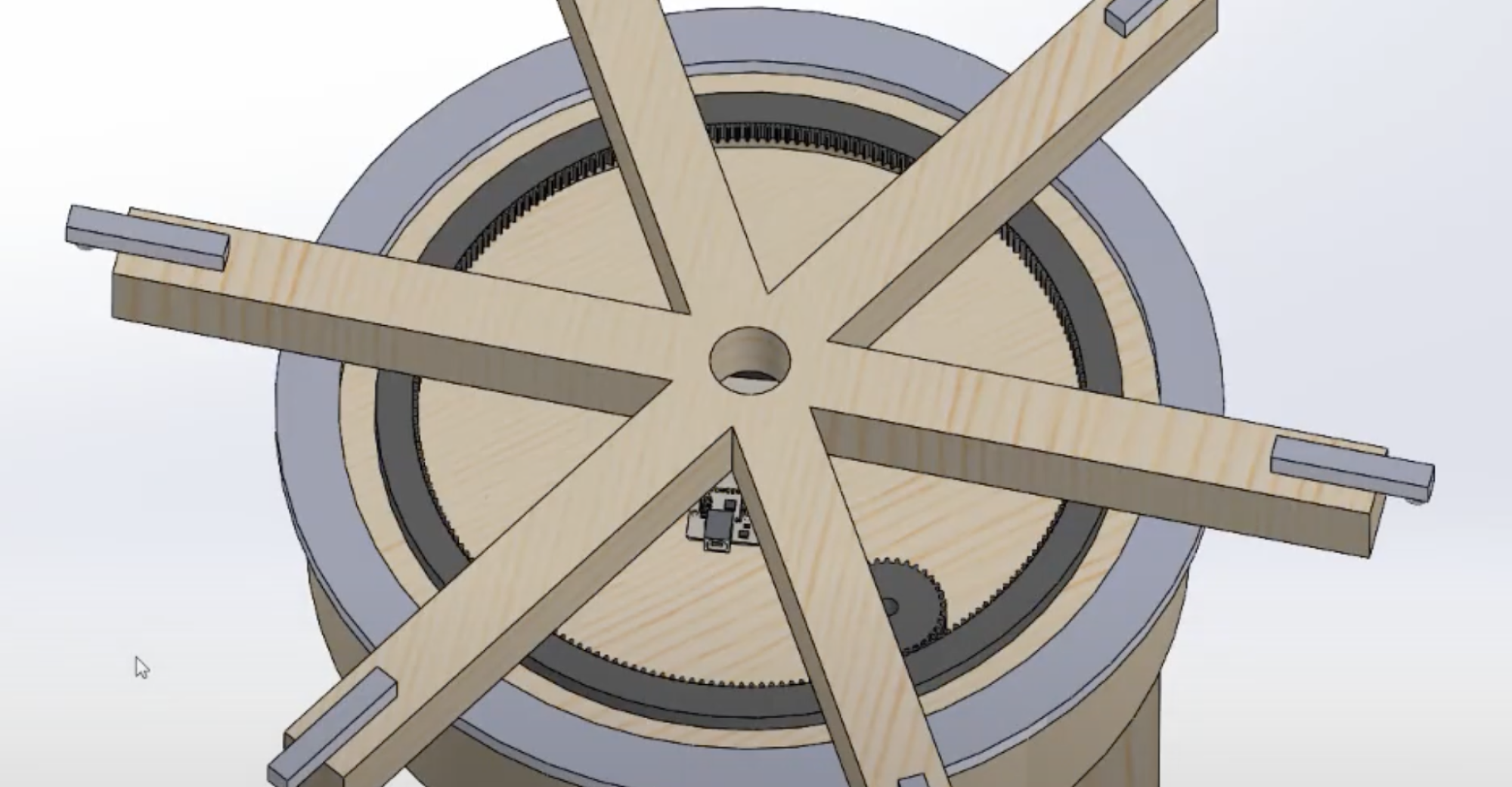

- Hook Robustness: Placing 20 pounds on each of the 6 hooks at a time and making one full rotation

- Rack Imbalance: Placing 60 pounds on 3 hooks on one side of the rack and making a full rotation

- Max Weight: Gradually place more and more weight on a rotating rack until the max weight of 120 pounds is reached

- RESULTS: From these tests, we determined that our item stand was robust enough for our use cases. We found that though some of the wood and electronic components did flex (expected), it still held up great. The hooks were able to handle repeated deposit and removal of items, the rack did not tip over from imbalance, and even with a max weight of 120 pounds, the rack rotated continuously.

Integration Tests:

- Item Placement/Removal: Placing and removing items from load cells and measuring the time it takes for the web app to receive information about it.

- User Position Finding: Check-in and check-out users and measure the time it takes for the rack to rotate to a certain position.

- RESULTS: We found that the detection of placing and removal of items from the item stand was propagated throughout the system very quickly, less than our design requirements. So no design changes were needed there. On the other hand, when we tested the ability of the rack to provide users with a new position or their check-in positions, we found that the time it took went way above our design requirements of 1 second. We did not think about the significant time it takes for the motor to rotate safety to the target location, and thus adjusted our design requirements to 7 seconds in the final presentation.

Facial Recognition Tests:

- Distance Test: Stand at various distances away from the camera, and see if facial recognition starts recognizing faces.

- Face Recognition Time Test: Once close enough to camera, time the time it takes for the face to be seen as new or already in the system.

- Accuracy Test: Check-in various faces and check-out, while measuring if the system accurate maps users to their stored faces.

- RESULTS: Spoiler: we switched to a new facial recognition library which was a big improvement over the old one. However, our old algorithm with the SVM classifier was adequate at recognizing people at the correct distance of 0.5 meters and within the time of 5 seconds. Accuracy though, took a hit. On very diverse facial datasets, our old model hit 95% accuracy during pure software testing. While this is good on paper, our integration tests involving this system found in real life that in a high percentage of the time, its was just wrong, sometimes up to 20%. With this data from our testing, we decided to switch to a new model using the facial_recognition Python library, which reportedly has a 99% facial accuracy rate. We recently also conducted extensive testing and found that its accuracy rate was well above 90-95% on diverse facial data. It still has some issues when everyone checked into the system look very similar, but we believe this might be unavoidable and thus want to build in some extra safeguards such as manual checkout in our web application (still a work in progress).