THIS WEEK

- This week, the NEMA 34 stepper motor arrived, along with a very larger motor driver and a AC to DC 6A power adapter. The team and I tested these components to our satisfaction and determined it provided enough torque to handle our applications.

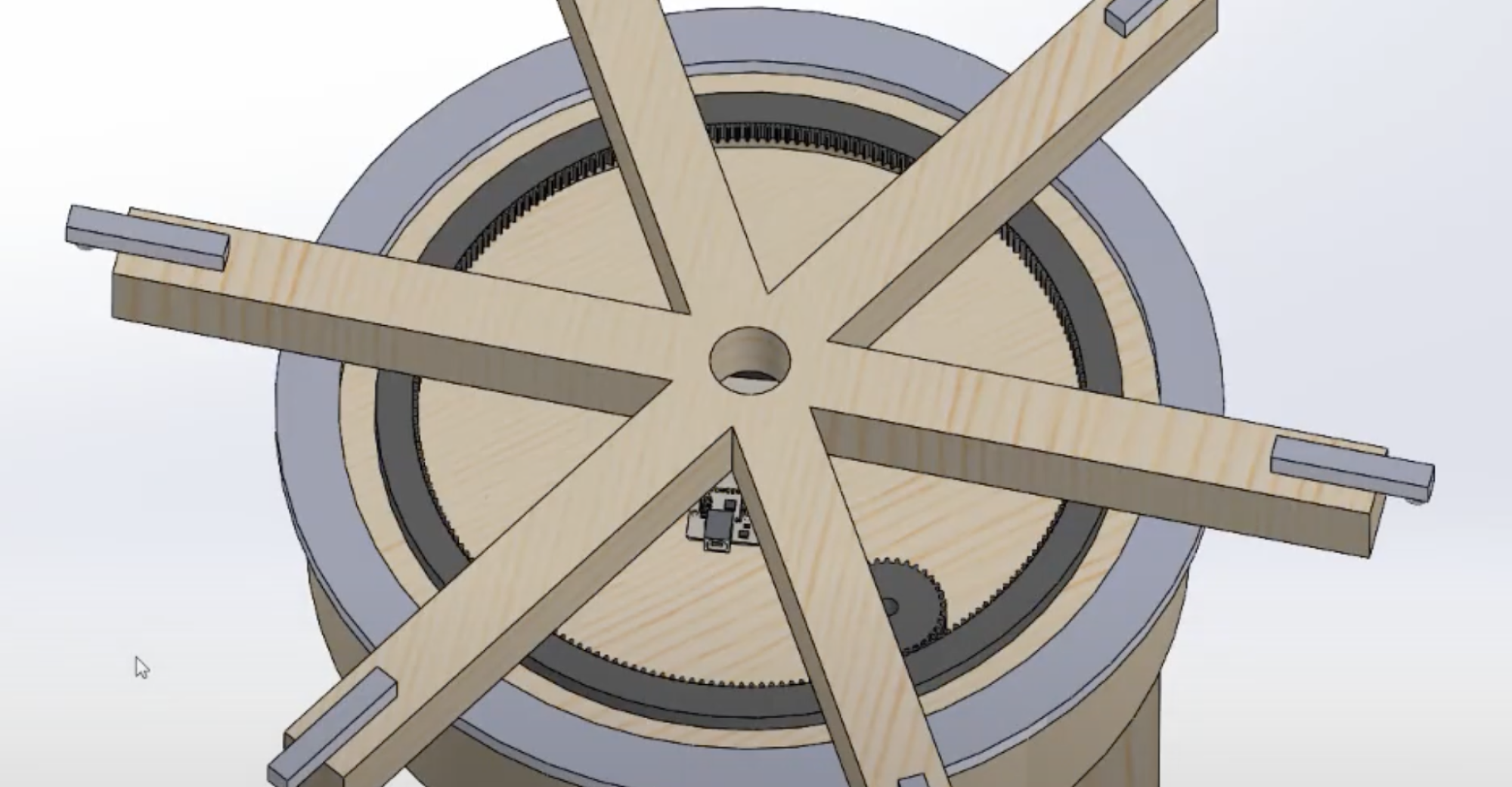

- The item stand did not have enough space to accommodate a large motor, so I worked on cutting a large hole in the structure so that the motor can sit in it. I also modified a gear so that it could attach to the larger drive shaft of the motor. We combined all the electronics together for some integration testing, which used the facial recognition software to directly control the item stand. After that was done, Doreen and I worked on code to turn the motor to a specific position, accounting for the gear ratio between the motor shaft and the item stand.

- Next, we installed 2 LEDs to display information to the user- a blinking yellow LED to show the user which position to place their item, and a red LED to tell the user that their either checked in or checked out their item too late and would have to retry again.

NEXT WEEK

- Just like last week, I am still slightly behind schedule, though I have caught up more since last week. As a team, we should be approaching the end of end-to-end testing, but we are just getting our system cleaned up and finished for the start of testing. Again, the slack time we allocated perfectly covers this case. There is not much work left to be done on the project.

- Next week, I plan to install all the components permanently and help Surafel with facial recognition speed and accuracy. After that, we can perform testing and wrap up the project.

SUBSYSTEM VERIFICATION

- The main subsystem I have been working on this semester is the hardware item stand, which in itself contains several smaller components. For example, the load cell system polls on weight change, the item stand was built to support high load, and the motor was chosen to be able to move heavy load. The use case requirement that relates directly and solely to the item stand is its hardware integrity (how much weight it can handle) and how fast the item stand can detect user interaction (placing vs withdrawing an item).

- I have already somewhat tested putting weights on the item stand, but not anywhere near our original 150 pounds. I also have not tested increasing the weights or introducing load imbalance yet. After the system is done, I plan to get objects of varying weights and test item stand integrity.

- The second system that relates to a design/use case requirement is the detection of user interaction. I have already tested this subsystem a while ago, by removing and adding random objects to the load cells and detecting when the load cells see the change. It takes less than 1 second for the load cells to detected weight changes.

- Overall, I plan to implement the tests described in the design proposal related to the hardware item stand and see if they hit the benchmarks we prescribed.