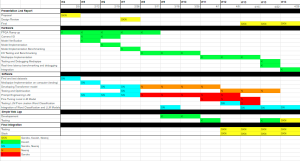

After our demo this week, the two main risks we face are being able to accelerate the HPE on the FPGA with a low enough latency and ensuring that our classification model has a high enough accuracy. Our contingency plan for HPE remains the same, if the FPGA accelerations does not make it fast enough, we will switch to accelerating it on the GPU on our Jetson nano. As for our classification model, our contingency is retraining it on an altered dataset with better parameter tuning. Our subsystems and main idea have not changed yet. No updated schedule is necessary, the one submitted on slack channel for interim demo (seen below) is correct.

Validation We need to check for two main things when doing end to end testing: latency and accuracy. For latency, we plan to run pre-recording videos through the entire system and benchmark the time of each subsystem’s output alongside end-to-end latency. This will help us ensure that each part is within the latency limits listed in our design report and the entire project’s latency is within our set goals. For accuracy, we plan on running live stream of us signing words, phrases, and sentences and recording the given output. We will then compare our intended sentence with the output to calculate a BLUE score to ensure it is greater than or equal to 40%.