What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans a re ready?

As discussed in Casper’s individual report, the most significant risk currently is the “Out of frame resources” error that is occurring when trying to run our program. This is causing indeterminate crashes when we try to run the program sometimes. If we are unable to find out when exactly this error occurs, and how to solve it, we can revert back to when we are only running YoloV8 without VSLAM.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

As discussed above, it is possible that we might have to discard VSLAM due to resource limitations. This means that we will not be able to visualize the map and employ the compass coordinates from the IMU.

Provide an updated schedule if changes have occurred

List all unit tests and overall system test carried out for experimentation of the system. List any findings and design changes made from your analysis of test results and other data obtained from the experimentation.

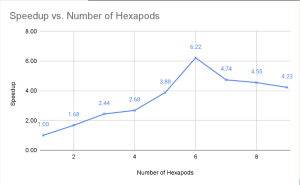

Scalability speedup test:

We found that after 6 hexapods our speedup decreased so we shouldn’t ever scale above the number 6.

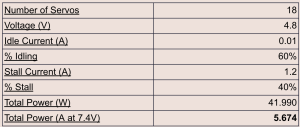

Battery test:

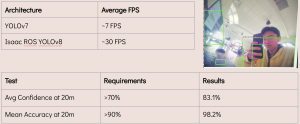

Object classification test

Through the object classification tests we decided to change our design to use a Jetson Orin Nano instead of a Jetson Nano due to the need for better computation speed and our desire to run YOLOv8 which is hardware accelerated and requires a Jetpack version that the Jetson Nano could not run. The mean accuracy readings led us to no longer need to extensively train a new model since the accuracy of our COCO dataset pretrained model was already very strong.