What did you personally accomplish this week on the project?

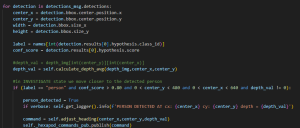

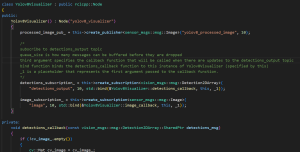

This week I focused on debugging the depth image sensing and image pipeline. In the beginning of the week our depth image and color image was no longer triggering our main callback, after a long debug process we found that the main issue was that our images were being synced together correctly with the TimeSynchronizer object. To get around this, we decided to use an ApproximateTimeSynchronizer with an adjustable slop value. Additionally I continued to work on making our custom yolov8 model. This week I added more images of hexapods and refined our dataset with more images of people of different ethnicities so that our model would not be biased.

Is your progress on schedule or behind? If you are behind, what actions will betaken to catch up to the project schedule.

I think we are on schedule if we count the addition of slack time. We’re doing some final search behavior testing right now and we’ll be testing our multiple hexapod system soon.

What deliverables in the next week?

In the next week we just hope to complete our posters and refine our hexapod behaviors.