This week, I trained and tested the broader dataset I incorporated last week with 10 additional classes. I originally recorded 13 videos, and then performed data augmentation to create more data. For example, I increased and decreased the brightness a bit, and rotated the video by a few degrees. After performing data augmentation, I had the same amount of data for each class, since data balance is important in model training. When I tested this new model, it did not seem to accurately predict the new signs correctly, and even decreased the accuracy of predicting the original signs. After trying to diagnose the issue for a bit, I went back to my original model and only added 1 new sign, ensuring that each video was consistent in terms of the amount of features MediaPipe could extract from them. However, this one additional sign was not being accurately predicted either. After this, I fine tuned some of the model parameters, such as adding more LSTM and dense layers, to see if model complexity was the issue.

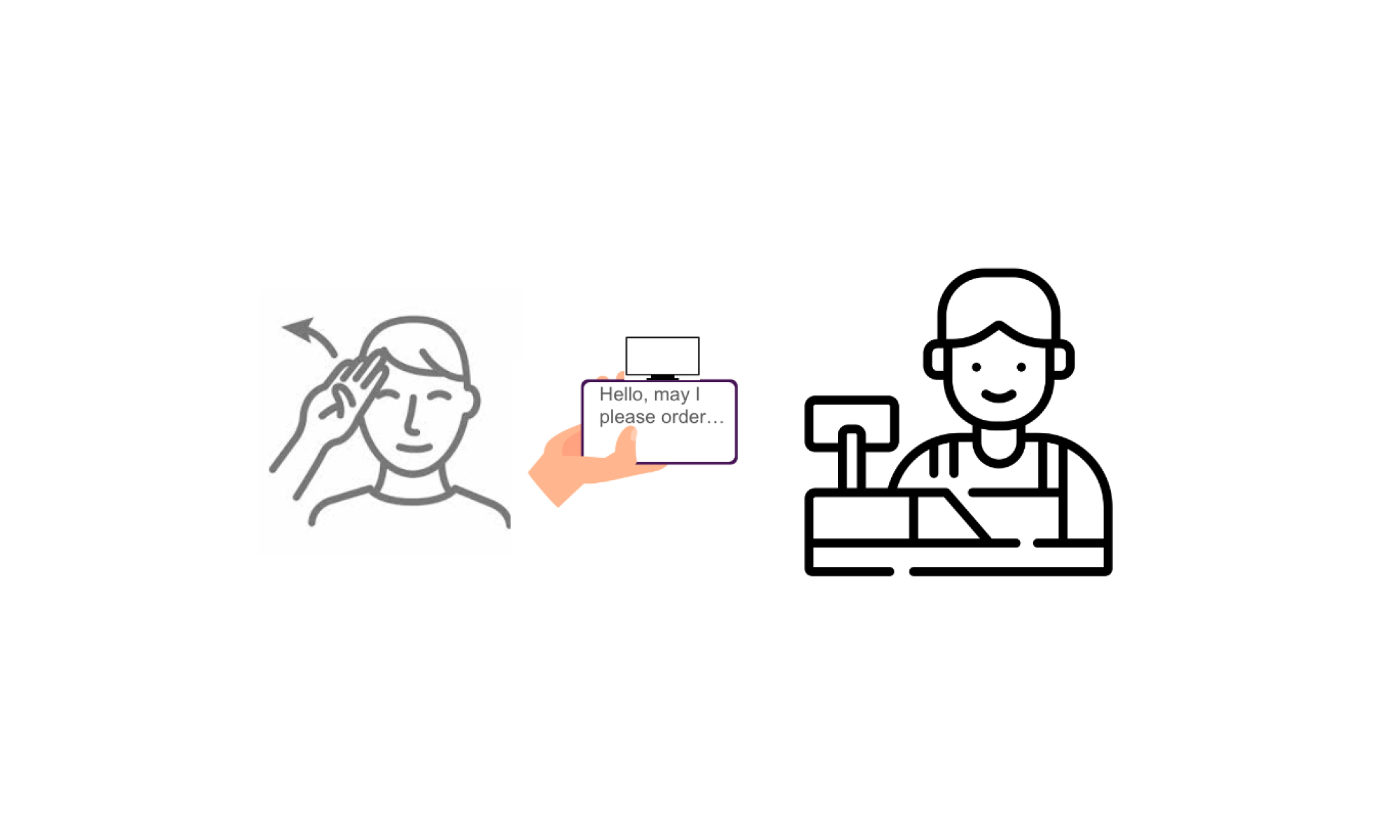

While training this, I created some support for sentence displaying and structuring. I signaled the end of a sentence by detecting if hands are not in the frame for 5 seconds, which would reset the displayed words on the screen. Since sign language contains different word order than written English, I worked on the LLM that would detect and modify this structuring. To do this, I used the OpenAI API to send an API request after words have been predicted. This request asks the gpt3.5 engine to modify the sentence into readable English, and then display it on the webcam screen. After working with the prompt for a while, the LLM eventually modified the words into accurate sentences and displayed this to the user. In the images below, the green text is what is being directly translated and the white text is the output from the LLM.

My progress is mostly on schedule since I have added the LLM for sentence structuring.

Next week, I will continue trying to optimize the machine learning model to incorporate more phrases successfully. I will also work with my teammates to integrate the model to the IOS app using coreML.