- What did you personally accomplish this week on the project?

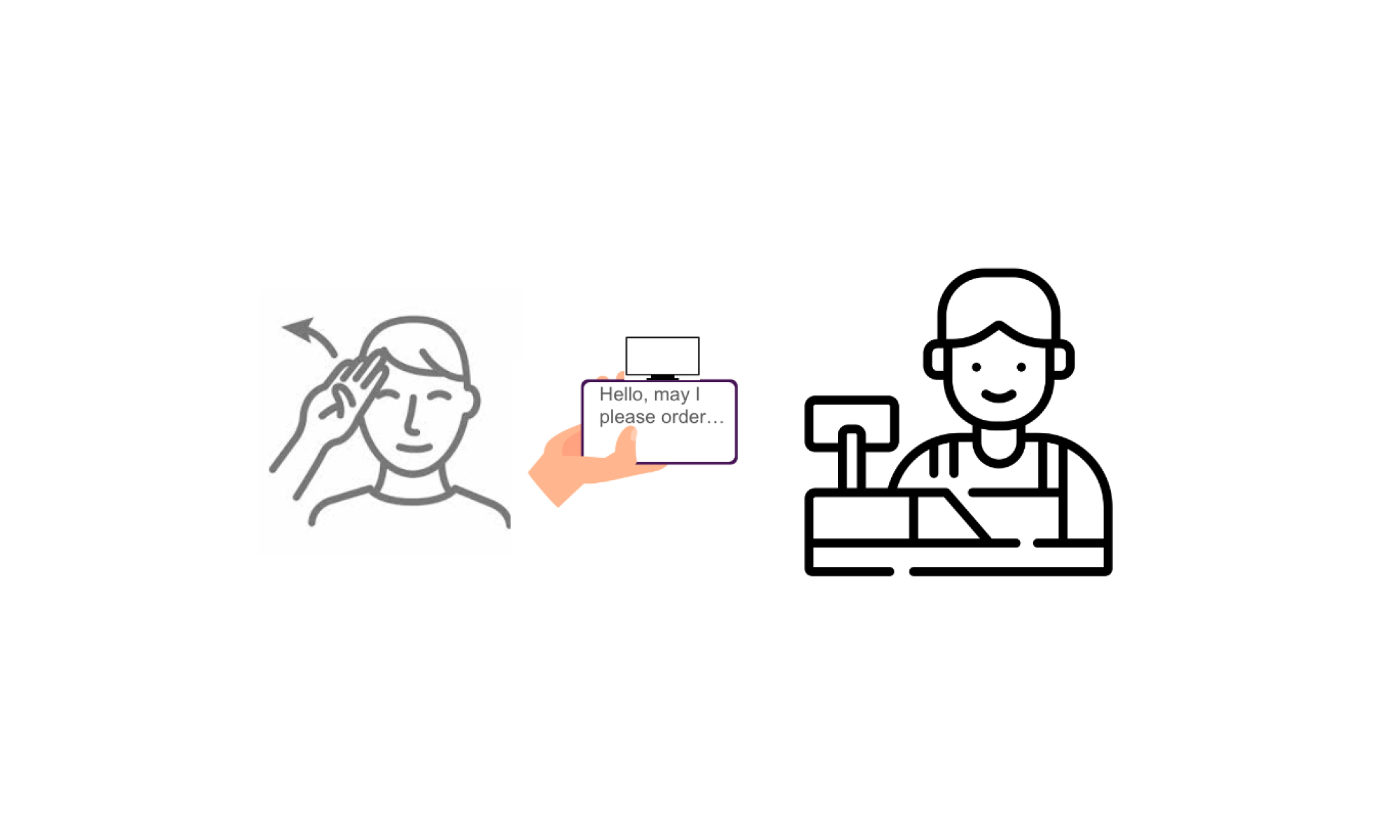

After obtaining MediaPipe hands recognition on loaded video, I continued to work on enabling live video input and detecting dynamic pose in addition to hands. Notably, with instructions from Professor Savvides and Neha, I was able to answer the frame processing rate question from last week. The reason was that a loaded mp4 video might have played at a high frame rate incompatible with OpenCV. However, this issue turned out to be irrelevant to our implementation plan, since our use-case only involves live feed-in from webcam or phone camera. I added a live frame rate monitoring function to quantify our recognition frame rate to be 10s-15s under real-time dynamic capture conditions. Thanks to Sejal’s primitive working ML model, at this point, I completed my assigned task of static alphabetical gesture recognition. Our team then decided to include detection of the user’s upper body’s movement, basically including arm & shoulder poses, as well as the intricate gesture of both hands. Accordingly, I experimented with the MediaPipe holistic model to successfully implement this recognition feature (as shown in the screenshot below, code in GitHub). Lastly, as the presenter for the design review presentation, I prepared for the presentation slides and rehearsed for the speech.

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress in on schedule.

- What deliverables do you hope to complete in the next week?

Boost CV processing

Detect webcam feed-in of static alphabetical gestures

Design presentation slides and speech