Part A was written by Rohan, Part B was written by Karen, and Part C was written by Arnav.

Global Factors

People in the workforce, across a wide variety of disciplines and geographic regions, spend significant amounts of time working at a desk with a laptop or monitor setup. While the average work day lasts 8 hours, most people are only actually productive for 2-3 hours. Improved focus and longer-lasting productivity have many benefits for individuals including personal fulfillment, pride in one’s performance, and improved standing in the workplace. At a larger scale, improving individuals’ productivity also leads to a more rapidly advancing society where the workforce as a whole can innovate and execute more efficiently. Overall, our product will improve individuals’ quality of life and self-satisfaction while simultaneously improving the rate of global societal advancement.

Cultural Factors

In today’s digital age, there’s a growing trend among people, particularly the younger generation and students, to embrace technology as a tool to improve their daily lives. This demographic is highly interested in leveraging technology to improve productivity, efficiency, and overall well-being. Also within a culture that values innovation and efficiency, there is a strong desire to optimize workflows and streamline tasks to achieve better outcomes in less time. Moreover, there’s an increasing awareness of the importance of mindfulness and focus in achieving work satisfaction and personal fulfillment. As a result, individuals seek tools and solutions that help them cultivate mindfulness, enhance focus, and maintain a healthy work-life balance amidst the distractions of the digital world. Our product aligns with these cultural trends by providing users with a user-friendly platform to monitor their focus levels, identify distractions, and ultimately enhance their productivity and overall satisfaction with their work.

Environmental Factors

The Focus Tracker App takes into account the surrounding environment, like background motion/ light, interruptions, and conversations to help users stay focused. It uses sensors and machine learning to understand and react to these conditions. By optimizing work conditions such as informing the user that the phone is being used too often or the light is too bright, it encourages a reduction in unnecessary energy consumption. Additionally, the app’s emphasis on creating a focused environment helps minimize disruptions that could affect both the user and its surroundings.

Team Progress

The majority of our time this week was spent working on the design report.

This week, we sorted out the issues we were experiencing with putting together the data collection system last week. In the end, we settled on a two-pronged design: we will utilize the EmotivPRO application’s built-in EEG data recording system to record power readings within each of the frequency bands from the AF3 and AF4 sensors (the two sensors corresponding to the prefrontal cortex) while simultaneously running a simple python program which takes in Professor Dueck’s keyboard input, ‘F’ for focused ‘D’ for distracted and ‘N’ for neutral. While this system felt natural to us, we were not sure if this type of stateful labeling system would match Professor Dueck’s mental model when observing her students. Furthermore, given that Professor Dueck would be deeply focused on observing her students, we were hoping that the system would be easy enough for her to use without having to apply much thought to it. On Monday of this week, we met with Professor Dueck after our weekly progress update with Professor Savvides and Jean for our first round of raw data collection and ground truth labeling. To our great relief, everything ran extremely smoothly with the EEG quality coming through with minimal noise and Professor Dueck finding our data labeling system to be extremely intuitive and natural to use. One of the significant risk factors for our project has been EEG-based focus detection. As with all types of signal processing and analysis, the quality of the raw data and ground truth labels are critical to training a highly performant model. This was a significant milestone because while we had tested the data labeling system that Arnav and Rohan designed, it was the first time Professor Dueck was using it. We continued to collect data on Wednesday on a different one from Professor Dueck, and this session went equally as smoothly. Having secured some initial high-fidelity data with high granularity ground truth labels, we feel that the EEG aspect of our project has been significantly de-risked. Going forward, we have to map the logged timestamps from the EEG readings to the timestamps from Professor Dueck’s ground truth labels so we can begin feeding our labeled data into a model for training. This coming week, we hope to have this linking of the raw data with the labels complete as well as an initial CNN trained on the resulting dataset. From there, we can assess the performance of the model, verify that the data has a high signal-to-noise ratio, and begin to fine-tune the model to improve upon our base model’s performance.

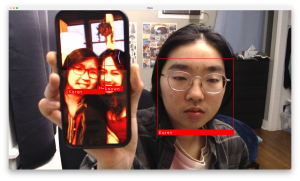

A new risk that could jeopardize the progress of our project is the performance of the phone object detection model. The custom YOLOv8 model that has been trained does not currently meet the design requirements of mAP ≥95%. We may need to lower this threshold, improve the model with further training, or use a pre-trained object detection model. We have already found other datasets that we can further train the model on (like this one) and have also found a pre-trained model on Roboflow that has higher performance than the custom model that we trained. This Roboflow model can be something we fall back on if we cannot get our custom model to perform sufficiently well.

The schedule for camera-based detections was updated to be broken down into the implementation of each type of distraction to be detected. Unit testing and then combining each of the detectors into one module will begin on March 18.

To mitigate the risks associated with EEG data reliability in predicting focus states, we have developed 3 different plans:

Plan A involves leveraging EEG data collected from musicians and Professor Jocelyn uses her expertise and visual cues to label states of focus and distraction during music practice sessions. This method relies heavily on her understanding of individual focus patterns within a specific, skill-based activity.

Plan B broadens the data collection to include ourselves and other participants engaged in completing multiplication worksheets under time constraints. Here, focus states are identified in environments controlled for auditory distractions using noise-canceling headphones, while distracted states are simulated by introducing conversations during tasks. This strategy aims to diversify the conditions under which EEG data is collected.

Plan C shifts towards using predefined performance metrics from the Emotiv EEG system, such as Attention and Engagement, setting thresholds to classify focus states. Recognizing the potential oversimplification in this method, we plan to correlate specific distractions or behaviors, such as phone pick-ups, with these metrics to draw more detailed insights into their impact on user focus and engagement. By using language model-generated suggestions, we can create personalized advice for improving focus and productivity based on observed patterns, such as recommending strategies for minimizing phone-induced distractions. This approach not only enhances the precision of focus state prediction through EEG data but also integrates behavioral insights to provide users with actionable feedback for optimizing their work environments and habits.

Additionally, we established a formula for the productivity score we will assign to users throughout the work session. The productivity score calculation in the Focus Tracker App quantifies an individual’s work efficiency by evaluating both focus duration and distraction frequency. It establishes a distraction score (D) by comparing the actual number of distractions (A) encountered during a work session against an expected number (E), calculated based on the session’s length with an assumption of one distraction every 5 minutes. The baseline distraction score (D) starts at 0.5. If A <= E: then D = 1 – 0.5 * A / E. If A > E, then  .

.

This ensures the distraction score decreases but never turns negative. The productivity score (P) is then determined by averaging the focus fraction and the distraction score. This method ensures a comprehensive assessment, with half of the productivity score derived from focus duration and the other half reflecting the impact of distractions.

Overall, our progress is on schedule.

.

.