I spent this week more thoroughly researching and exploring CV and ML libraries I can use to implement distraction and behavior detection via a camera. I found MediaPipe and Dlib, both libraries compatible with Python and can be used for facial landmark detection. I plan to use these libraries to help detect drowsiness, yawning, and off-screen gazing. MediaPipe can also be used for object recognition, which I plan to experiment with for phone pick-up detection. Here is a document summarizing my research and brainstorming for camera-based distraction and behavior detection.

I also looked into and experimented with a few existing implementations of drowsiness detection. From this research and experimentation, I plan to use facial landmark detection to calculate the eye aspect ratio and mouth aspect ratio, and potentially a trained neural network to predict the drowsiness of the user.

Lastly, I submitted an order for a 1080p web camera that I will use to produce consistent camera results.

Overall, my progress is on schedule.

In the coming week, I hope to have a preliminary implementation of drowsiness detection. I would like to have successful yawning and closed eye detection via eye aspect ratio and mouth aspect ratio. I will also collect data and train a preliminary neural network to classify images as drowsy vs. not. If time permits, I will also begin experimentation with head tracking and off-screen gaze detection.

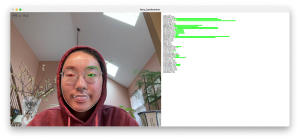

Below is a screenshot of me experimenting with the MediaPipe face landmark detection.

Below is a screenshot of me experimenting with an existing drowsiness detector.