What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We initially estimated a weight of 200 grams for the whole device which in retrospect was a vast underestimate. Our on-board computer (NVIDIA Jetson Nano) alone comes to 250 grams, along with some other heavy components such as the PCB/ Arduino, the rechargeable battery pack and other sensors. We also intend on 3D printing a case to improve the overall look and feel of the device. Given all of this, the total weight is going to be around 400-450g. We now run the risk of the device being too bulky and just uncomfortable and impractical for our user case. Although we will certainly make efforts along the way to reduce weight when we can, our backup plan is to offload the battery pack and potentially the Jetson to the waist of the user so that the weight is distributed and less of a disturbance for the user.

- Connection to peripherals:

We plan to connect the peripherals (buttons, sensor, and vibration motor) to the GPIO pins of the Jetson, with a custom PCB in between to manage the voltage and current levels. A risk with this approach is that custom PCBs take time to order, and there may not be enough time to redesign a PCB if there are bugs. We plan to manage this risk by first breadboarding the PCB circuit to ensure it is adequate for safely connecting the peripherals before we place the PCB order. Our contingency plan in case the PCB still has bugs is to replace the PCB with an Arduino, which will require us to switch to serial communication between the Jetson and Arduino and will cause us to reevaluate our power and weight requirements.

- Recognition of partial frame of an object

Although we are planning to find the dataset of indoor objects that includes some additions of partial images of objects, the recognition of a cropped image of an object due to close distance can be inaccurate. To mitigate this risk, we are planning to implement a history referral system that can track back to the history of recognized objects and determine the object if the accuracy is below a chosen threshold. Then, even when a user walks closer to the object to the point where the product cannot recognize the item, it can still produce a result by using the history.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

- Switch from RPi to NVIDIA Jetson Nano:

In our previous report, we mentioned taking a network-based approach that offloads the bulk of the processing from the RPi to a server hosted on the cloud. This raised the issue of having the device be Wifi dependent and we quickly decided against taking that approach as we wanted our device to be accessible and easy to use, rather than an added chore for the user. To mitigate this, we did some research and found that switching from the RPi to the NVIDIA Jetson Nano as our on-board computer would make the most sense for the purposes of our project, as well as resolving the problem of overexerting the RPi or having to rely on a network connection to a server. The NVIDIA Jetson has higher performance, more powerful GPUs that make it better suited to run our object recognition model on board. Here is an updated block diagram:

As for changes in the cost, we have been able to get an NVIDIA Jetson Nano from the class inventory and so there is no additional cost. However, we have had to place a purchase order for a Jetson-compatible camera as the ones in the inventory were all taken. This was $28 out of our budget, which we believe we can definitely afford, and we don’t foresee any extra costs due to this switch.

- Extra device control (from 1 button to 2 buttons):

Our design prior to this modification was such that the vibration module that alerts the user of objects in their way would be the default mode for our device, and that there would be one button that worked as follows: single-press for single object identification, double-press for continuous speech identification. As we got into discussing this implementation further, we realized that having the vibration module be turned on by default during the speech settings may be uncomfortable and possibly distracting for the user. To avoid risking overstimulation for the user, we decided to have both the vibration and speech modules be controllable via buttons, allowing the user to choose the combination of modes they wanted to use. This change is reflected in the above block diagram that now shows buttons A and B.

The added cost for this change should be fairly minimal as buttons cost around $5-10 and will greatly improve user experience.

Since we have switched to using the Jetson and plan to configure the GPIO pins for connecting peripherals, we now need to design and order a custom PCB for voltage conversion and current limiting. This change was necessary because the operating voltage of peripherals and the GPIO pin tolerances are different, and require a circuit in between to ensure safe operation without damaging any of the devices.

The added cost of this change is the cost of ordering a PCB as well as shipping costs associated with this. Since we are using a Jetson from ECE inventory, and the rest of our peripherals are fairly inexpensive, this should not significantly impact our remaining budget.

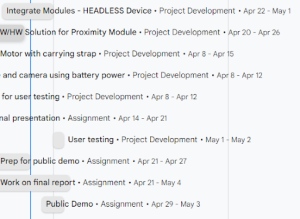

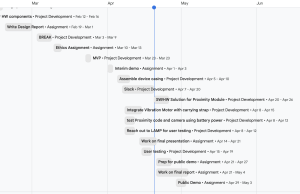

Provide an updated schedule if changes have occurred.

The hardware development schedule has changed slightly since we are now ordering a custom PCB. The plan for hardware development this week was to integrate the camera and sensor with the Jetson, but since these devices haven’t been delivered yet, we will focus on PCB design this week and will push hardware integration to the following week.

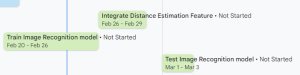

During testing of a pre-trained object recognition and distance estimation model, we have realized that the model only detects few objects that are irrelevant to indoor settings. Therefore, we have decided to train the model ourselves by using the dataset of common indoor objects. The workload of searching for a suitable dataset and training the model is added to the schedule, which has pushed back the object recognition model testing stage for around a week.

Please write a paragraph or two describing how the product solution you are designing will meet a specified need.

Part A: … with respect to considerations of public health, safety or welfare.

Our product aims to aid visually impaired people from encountering an unnoticed danger that has not been detected by just using a cane. Not only does the product notifies the user what the object is but also alerts that there exists an obstacle right in front. We are projecting the safety distance to be 2 meters, so that the user has time to avoid an obstacle in their own methods.

If the product is successfully implemented, it can benefit blind people also in a physiological sense. The people no longer need to be worried about running into an obstacle and getting hurt, which can significantly reduce their anxiety when walking in an unfamiliar environment. In addition, the user has an option to change a device setting to a manual option, in which the user manually presses a button to identify what the object in front of them is. This will alleviate the user’s stress of hearing the recognized objects every second.

Part B: … with consideration of social factors.

The visually impaired face significant challenges when it comes to indoor navigation, often relying on assistance from those around them or guide dogs. To address this, our goal is to have our device use technology to provide an intuitive and independent navigation experience. We use a combination of depth sensors, cameras, object recognition algorithms and speech synthesis to hopefully achieve this objective. The driving factor for this project is to improve inclusivity and accessibility in our society, aiming to empower individuals to participate freely in social activities and navigate public spaces with autonomy. Through our collaboration with the Library of Accessible Media, Pittsburgh, we also hope to involve our target audience in the developmental stages, as well as testing during the final stages of our project.

Part C: … with consideration of economic factors.

Guide dogs are expensive to train and care for, and can cost tens of thousands of dollars for the visually impaired dog owner. Visually impaired people may also find it difficult to care for their guide dog, making them inaccessible options for many people. Our device aims to provide the services of guide dogs without the associated costs and care. Our device would reach a much lower price point and would be available for use immediately, while guide dogs require years of training. This makes indoor navigation aid more financially accessible to visually impaired people.