What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The major risks remain the same as last week: the weight of the device, the PCB connection between Jetson and peripherals, and the identification of partial frames of objects.

A new risk that can potentially jeopardize the success of the project is the longevity of the support for the Yolov5 model. Although it is constantly supported and updated by Ultralytics, we cannot guarantee that this version will be supported in the next few years. To mitigate this risk, we are planning to upgrade this version to the Yolov9 model, which is the most recent version (currently being updated). The reason for this approach is that we have also found an open source that had already integrated distance estimation features to the OR model. Therefore, we can reduce the development time and just need to focus on training the model and creating a data processor to manage the data output. If this development faces an issue due to the ongoing deployment by the developers, we are planning to stick to the Yolov5 model and meet the MVP.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There is currently one change made to the existing design of the system. It is the upgrade from Yolov5 to Yolov9. This change is necessary to raise the accuracy of the object recognition and to mitigate the risk of the module not being supported in the future. It can also reduce the development time of integrating a distance estimation feature by referring to an open source that uses this model and the feature.

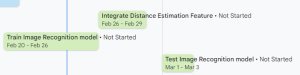

Provide an updated schedule if changes have occurred.

By 03/11, retraining the Yolov9 + Dist. Est. feature will be completed. Then, by 03/15, implementing the data processor will be done, so that the testing can be done by 03/16.

Please write a paragraph or two describing how the product solution you are designing will meet a specified need…

Part A (written by Josh): … with consideration of global factors.

The product solution focuses on its influence in a global setting. Our navigation aid device is designed to be easily worn with a simple neck-wearable structure. There are only two buttons in the device to control all the alert and object recognition settings, so visually impaired people can easily utilize the device without any technological concerns or visual necessity. The only requirement is learning the functionalities of each button, which we delegate the instructions to the user’s helper.

Another global factor considered is that the device outputs results in English. Because English is the most commonly used language, the product can be used by not only those from the United States but also those who are aware of indoor objects in English terms.

Part B (written by Shakthi): … with consideration of cultural factors.

The product solution considers the cultural factors by taking into consideration the commonly used indoor items in many cultures. That is, this design takes an account of indoor items like a sofa, table, chair, trash bin, and a shelf, which can be easily found in most indoor settings. Furthermore, as mentioned in part A, English is used to identify the items, so the cultures with English as their first language or secondary languages can easily use the device.

Most importantly, the device is aiming to positively influence the community of visually impaired people. Its goal is to give them confidence to go around indoor settings without any safety concerns. After an interview with several blind people, the device takes into consideration the common struggle and challenge that they face in daily lives. We hope that our product can flourish the relationship between the people with visual needs and the people who do not.

Part C (written by Meera): … with consideration of environmental factors.

The product solution considers environmental factors by allowing the users to take care of the waste properly. A trash bin is one of the indoor objects that is in the dataset, so the users can know if a bin is in front of them. This design encourages the visually impaired people to put trash into the bin.

Furthermore, this navigation device utilizes a rechargeable battery, so it reduces the total amount of product that may go to waste after its usage. In addition, we are connecting the sensor, vibration motor, and logic level converter to the PCB using headers and jumper wires instead of soldering them onto the PCB so that we can reuse them if the PCB needs to be redesigned. We are attempting to avoid using disposable items as much as possible to avoid harming the environment.