Accomplishments

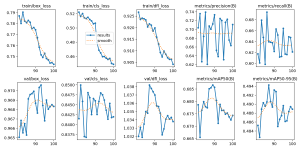

My model finished training, and this last Sunday Simon and I went to Giant Eagle to film some videos for testing purposes. However, running my throughput code on the video made me realize that the model performs very poorly on live video, not even recognizing a single item. I then trained another dataset for 100 epochs, and the results are below:

Even after training the second dataset, the accuracy on live video is quite poor. Giant Eagle also has advertisements and a design on their conveyor belts, which could’ve caused the accuracy to drop. Below there is an image that clearly just shows only one thing being recognized as an object, which is clearly very inaccurate for what we want to do.

Therefore, I started working on edge detection to see if there was a way to calculate throughput and count items that wasn’t so inaccurate. Below are my results from trying to get rid of noise using Gaussian blur and canny. Words are still being shown which makes it seem as though it will be very difficult to continue with this approach as well.

In terms of interim demo progress, I will pick up a RPi and cameras as they are available and try to work on getting the RPi working with two cameras.

Progress

I am still a bit behind schedule. I will try tomorrow to get the integration on the software side done so that we can have something to demo. As for throughput, if I can’t make significant progress training for more epochs or with edge detection, I will need to pivot to adding pictures of items specifically on conveyor belts from Salem’s Market to the dataset for training.