This week our team continued to work towards the full integration of our system. We continued conducting tests that would allow us to let our different modules interact with each other. We identified several edge cases that we did not account for in both our object detection models and physics models. This week, we worked towards accounting for these edge cases. Furthermore, we had great advancements in improving the accuracy of our cue stick and wall detection models.

In the computer vision side of our project, our team made significant progress on cue stick detection and wall detection. Andrew spent a lot of time creating a much more accurate cue stick detection model after testing out different detection methods. Although the model was quite accurate in detecting the cue stick’s position and direction, there is an issue where the cue stick is not detected in every frame captured by our camera. This is an issue that Andrew will continue to address in the coming week as it is crucial that the cue stick data is always available for our physics model’s calculations. Tjun Jet was able to detect the walls on the table with much better accuracy using an image masking technique. This advancement is beneficial for the better accuracy of our trajectory predictions.

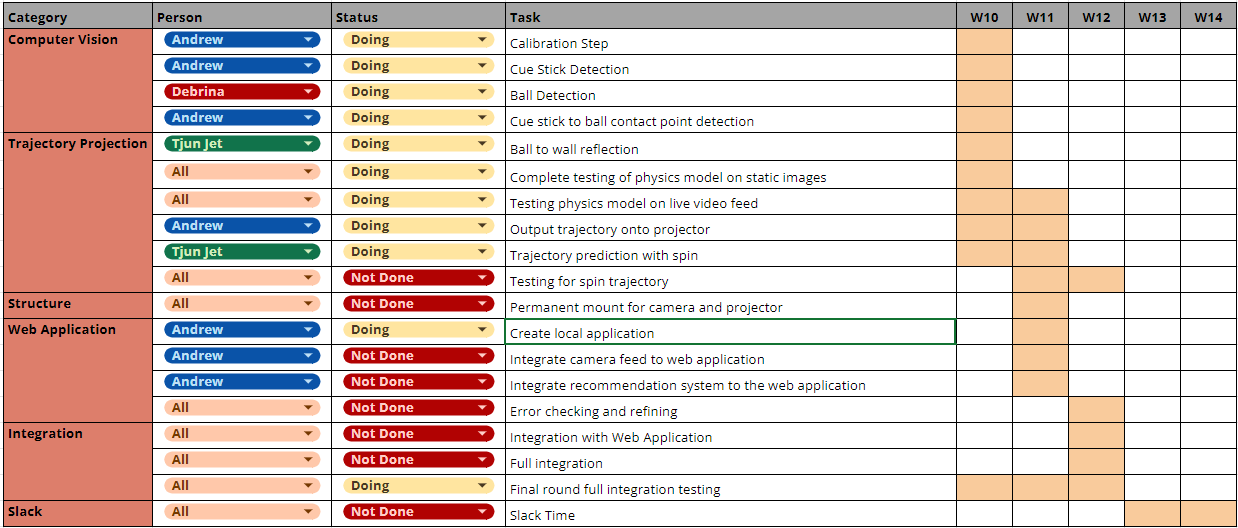

The most significant risk for this project right now is we did not successfully get our output line out, which we intended to do this week. Initially, the output line seemed to be working as we tested it on a singular image. However, during integration with the video, we realized that there were a lot of edge cases that we did not consider. One factor we didn’t account for was the importance of the direction of the trajectory lines. In our backend, we represent lines using two points on the line; however, some of the functions need to be able to distinguish between the starting and ending point of the trajectory. Hence, this is an additional detail that we will standardize across our systems. Another edge case was ensuring that the pool stick’s cue tip had to lie within a certain distance with the cue ball in order for us to project the line. Further, the accuracy of the cue stick trajectory is heavily affected by the kind of grip one uses to hold the stick. There were also other edge cases we had to account for when calculating the ball’s reflection on the walls. Thus, a lot of our code had to be re-written to account for such edge cases, putting us slightly behind on schedule regarding the physics calculations.

One change that was made for this week is that Debrina and Tjun Jet swapped roles. This change sparked as Debrina realized that she was not making much progress in improving the accuracy of the Computer Vision techniques, and Tjun Jet felt that it would be better to have a second pair of eyes to implement the physics model, as it was non-trivial. Thus, Tjun Jet searched for more accurate methods for wall detections and Debrina helped to find out what’s wrong with the physics output line. Another reason for this swap is because integration efforts have begun, and thus, roles are now slightly more overlapped. We also began working together and having more meetups. Thus, our group mates have ended up working together to solve each other’s problems more compared to before.