This week, our team continued integration efforts across the different subsystems. Our integration process was quite smooth at the beginning with the computer vision working for the various subsystems, but we ran into several edge cases for the physics implementation, which needed a re-implementation on some of our functions, which I will go through in further detail in this report. Furthermore, the wall detection did not seem to be very accurate, so I helped implement a new method to improve the accuracy of our wall detection. Finally, as we realized that we don’t have guaranteed detections of balls, walls, and pockets every frame, we decided to do a 30-frame average to fix the points, which I will also elaborate on later.

Firstly, we realized that our wall reflection algorithm was not very accurate as it only accounted for walls that are completely horizontal or vertical. However, from our detection model, we realized that this would not always be the case, especially if our table is slanted relative to the camera. Hence, Debrina and I reimplemented the function that accounted for the reflected points on the wall. This used geometry to account for slanted walls as well. This has not been successfully completed yet, which we will hopefully complete during our meeting tomorrow.

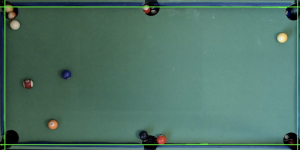

Next, we realized that our wall detections were not completely accurate relative to the wall of the pool table. Hence, I explored a different method of wall detection that improved our detection method. This method first masked our pool table with a green color, which is the color of our pool table. Next, it did Canny Edge Detection to output the edges. This algorithm then filtered out the most horizontal and vertical lines from the walls. This returned the four walls in a more accurate manner, which we can see in the photo below.

However, one problem we faced was that the detections were not present in every frame. This can be seen in the photo below. As the walls, pockets, and balls (at a stationary state) will not be changed throughout the course of the game, we decided to include a “calibrate” button to perform calibration. This means that we will take a 30-frame moving average and get the median detection of the walls. Since the walls are detected in most of the frames, this will allow us to find the wall, pockets, and ball detections whenever the user is not moving, instead of detecting every frame.

I am currently slightly behind schedule for whatever I wanted to accomplish. I was expecting the physics calculations to work and project the output line flawlessly, as I had got it to work on one image. However, I realized I did not account for a lot of the edge cases in terms of the cue stick’s orientation and the direction that it is pointed in. However, our team will be working together to try solving these issues together, especially within the next few days. Our team has been working really well together and consulting each other through ideating and solving each other’s problems, if any. Thus, we’re hoping to continue doing this and hopefully get the output line projected by wednesday this week, so that we can move forward in implementing our spin physics.