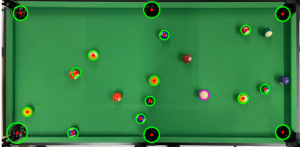

This week I was able to stay on schedule. I created documentation for the object detection models. I updated some of the return and input parameters of the existing object detection models to better follow our new API guidelines. Furthermore, I created a new implementation for detecting the cue ball and distinguishing it from the other balls. Previously, cue ball detection depended on the cue stick’s position, but we decided to change it to be dependent on the colors of the balls. To do this, I filtered the image to emphasize the white colors in the frame, then performed a Hough transform to detect circles in the image. Since this method of detecting the cue ball worked quite well on our test images, in the coming week I plan to try implementing a similar method to detect the other balls on the table in the hopes of being able to classify the number and color of each ball.

In terms of the physical structure of our project, I worked with my team to set up a mount for the projector and camera and perform some calibration to ensure that the projection could span the entire surface of our pool table setup. Aside from the technical components of our project, I spent a decent amount of time working on the individual ethics assignment that was due this week.

In the next week I hope to continue doing some integration among the backend subsystems and conduct testing. It is likely that the object detection models will need recalibration and potential modifications to better accommodate the image conditions of our setup, so I will spend time in the coming week working on this. Furthermore, I plan to create an automated calibration step in our backend. This calibration step would detect the bounds of the walls of our pool table setup, the locations of the pockets, and tweak parameters based on the lighting conditions. This calibration function would allow us to more efficiently recalibrate our system when we start it up in order to yield more accurate detections.