This week was a lot of physical hardware work.

Firstly, the encoder connections on the motor, which is required to drive the motors are raw 28 gauge wire. I needed to fix that in order to interface with them with the microcontroller to control the motors. As such, I soldered some spare DuPont connector wire on:

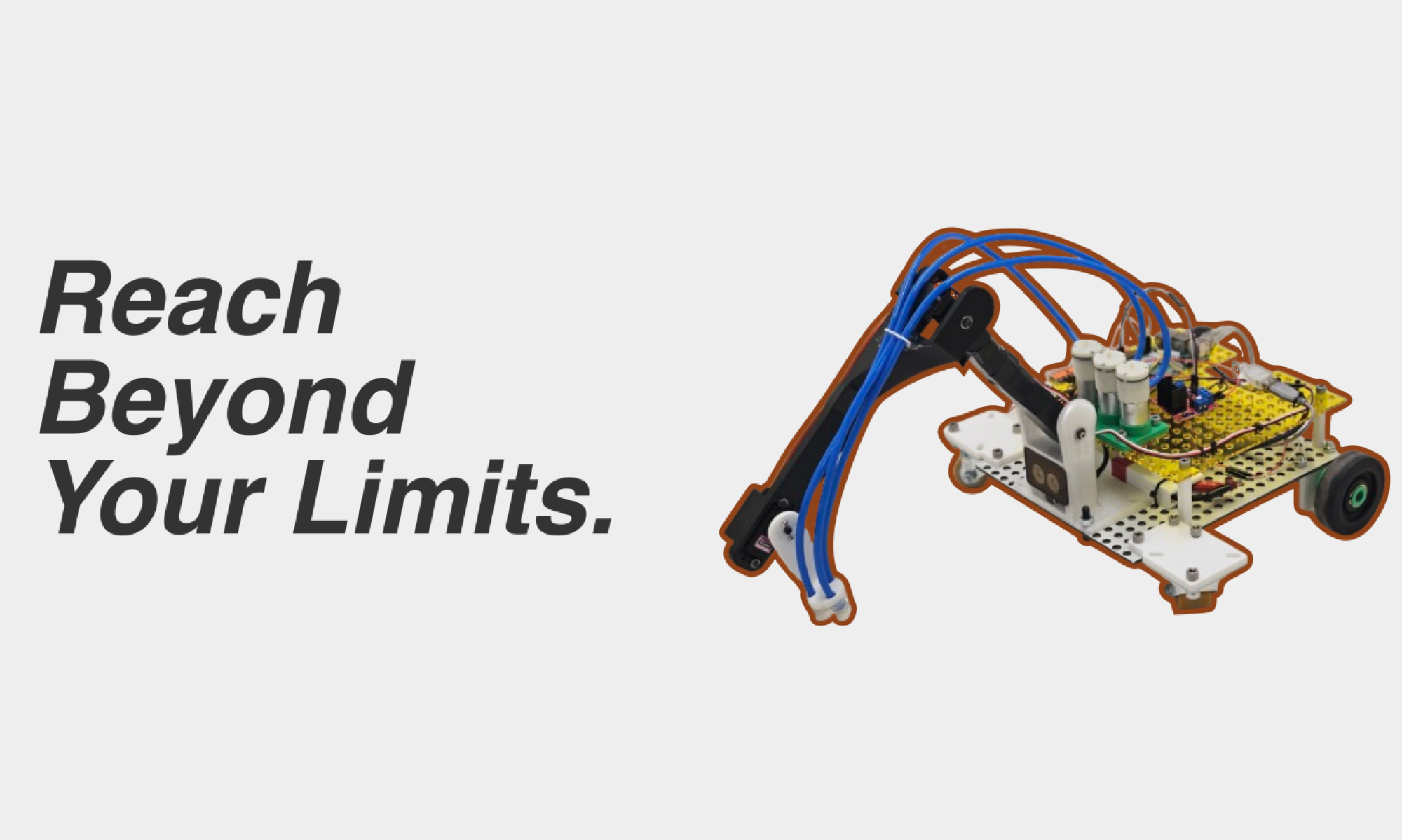

Once that was settled, the next task was to get the Raspberry Pi Pico to connect and drive the motors. Right? The Raspberry Pi Pico, right? Wrong. Turns out, with the H-bridge driver that can run the motors, one cannot use 3.3V logic to drive it. Pi Picos, being a modern microcontroller, output 3.3V logic, which indeed is not compatible to drive the motors. So, we switched to an Arduino Nano. Unfortunately, the ones I could get my hands on quickly were the cheap off-brand ones that don’t come with a pre-flashed bootloader and with a chip that indeed does not interface out of box with the Arduino software on Windows 11. After connecting an Arduino Uno to the Nano and flashing said bootloader onto the Nano, I was STILL unable to connect. The final error? Windows 11. I had to click the ‘Upload’ button in Arduino, then open up the Serial Monitor and make it a foreground task (for some reason??), and then, ONLY THEN, did the code upload. I found that neat little trick on a random reddit thread. So, the motors moved, finally. It was around this time that a fellow RoboClub member saw my usage of the 2S LiPo, and cautioned heavily against using it without a fuse as a battery-protection circuit. Luckily, we have those lying around in RoboClub, so I grabbed one and began work on the fuse box. Finally, here is what it looked like.

That pair of wires took an embarrassingly long amount of time to solder. Lastly, I designed and printed the box that would go around these wires, such that something like a random Allen Key couldn’t be accidentally dropped on the fuse and melt it unnecessarily.

With that all complete, I finally made the Rover wheels turn, safely this time. Link here: https://drive.google.com/file/d/1zICyOJkQBSxv6ApgS1hE1o7wqdp9SjWX/view?usp=sharing

The code for the correct driving will be developed tomorrow, once we figure out an appropriate serial protocol to communicate actions across the entire control-to-Rover pipeline.

With that done, it was time to turn to how we could make the Rover Raspberry Pi receive things to drive the Nano. Hayden had already figured out a way to use his existing Nano to send signals over Serial to his laptop. We re-flashed the Raspberry Pi 4B to use a lighter OS instead of Ubuntu, so it wouldn’t be lagging all the time. Once we did that, we were able to set up communication across the CMU-DEVICE network. We realized that as it stood, the communication was much too laggy to meet our defined time, so we wanted to see if it was a hardware or comm protocol issue. To test, I set up my laptop to be the server socket side and registered it on CMU-DEVICE, after which point we tested communication from Hayden’s to my laptop. The latency instantly disappeared, so I think we’ll need a better Raspberry Pi 4B. (We ordered one with 2GB RAM but got 1GB, so we’ll need to figure that out).

All in all, a very productive week. We are much less behind, and at the rate that we are finally able to go at, we are targeting Wednesday to drive the Robot AND control the Servos. (we’re targeting driving for Monday, which is definitely possible.) By next week, I want a clean implementation of all of the electronics of the Rover drive and pickup capability. The next target after that is to finish encoder integration, after which point we can finally finish with the camera integration. I still want at least two weeks for doing full on testing and tuning, and I think on this timeline, we are still set to do that. Lots of work to be put in this week.