This week, I personally accomplished two main tasks. First, I edited the depth pipeline to finally be able to utilize the ColorCamera ability of the camera. Specifically, using the same mobilenet framework, I was able to make the two StereoDepth inputs come from the color camera and utilize the color camera for input into the spatial network pipeline as well as for display. The updated code can be seen here https://github.com/njzhu/HomeRover/blob/main/depthai-tutorials-practice/1-hello-world/depth_script_color.py

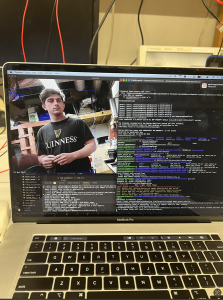

Additionally, a photo demonstrating this color functionality is shown here:  We can see a bounding box around Hayden, demonstrating that the pipeline is able to make detections. A closer glimpse into the code can be seen here: https://drive.google.com/file/d/1MTEEsWslS3K_79CAxSCOV2DoH274E5bl/view?usp=sharing

We can see a bounding box around Hayden, demonstrating that the pipeline is able to make detections. A closer glimpse into the code can be seen here: https://drive.google.com/file/d/1MTEEsWslS3K_79CAxSCOV2DoH274E5bl/view?usp=sharing

From this photo, we can see the linking together of the pipeline, starting from the color cameras, going through stereo depth, and ending at the spatial detection output.

The second main task I accomplished was that I was able to put a live camera feed onto a locally-hosted website. To achieve this, I followed and adapted a Flask tutorial to setup the website, html pages, and routing actions. We plan on having the controller Pi access this website to display it on the display. One challenge was similar to a previous issue of multithreading and accessing variables in different files. To overcome this, I combined the code of my depth pipeline with the locking and unlocking required to update the frame to be displayed on the website. A video showing the live camera feed updating on the website can be found here: https://drive.google.com/file/d/1kDW8dthBEhdgHHi2DJDfmURngqmv2ULZ/view?usp=sharing

After this week, I believe my progress is on schedule because a point of worry from last week was the ability to broadcast the video and live camera feed for the user, but a major component of that functionality was figured out today, which is good.

In this coming week, I hope to be able to fully finalize the rover in a culmination of all of our combined effort over the semester. In addition, I hope to, together with the team, finalize all the requisite documents as well.