This week saw significant progress towards the main control loop of the pick up mechanism. After making the plan last week to figure out the manner in which we align the arm to pick up items, I implemented it this week. Specifically, our system works like this: when I receive an array of detections that the camera detects with its onboard camera, I check if the z distance is within our desired pick up range to see if we are in range and in scope of an item. Then, I do a series of x-coordinate checks to ensure that the object that we are near is within a threshold that is not yet decided. If our frame has the object to the left – meaning our camera is right leaning, we print “Turn Right”, and vice versa for a right leaning object. This print statement occurrence can be adapted to send a signal to the arm controller code. This hasn’t been set up yet, but the underlying infrastructure is there which will hopefully make this connection easier. Additionally, the threshold I mentioned earlier will be calibrated once we do rover tests with the camera attached to its intended location.

A video showcasing this functionality is linked here: https://drive.google.com/file/d/1XJyA2q35H8Kpg9TzOHVndv2On-Wu5Cji/view?usp=sharing

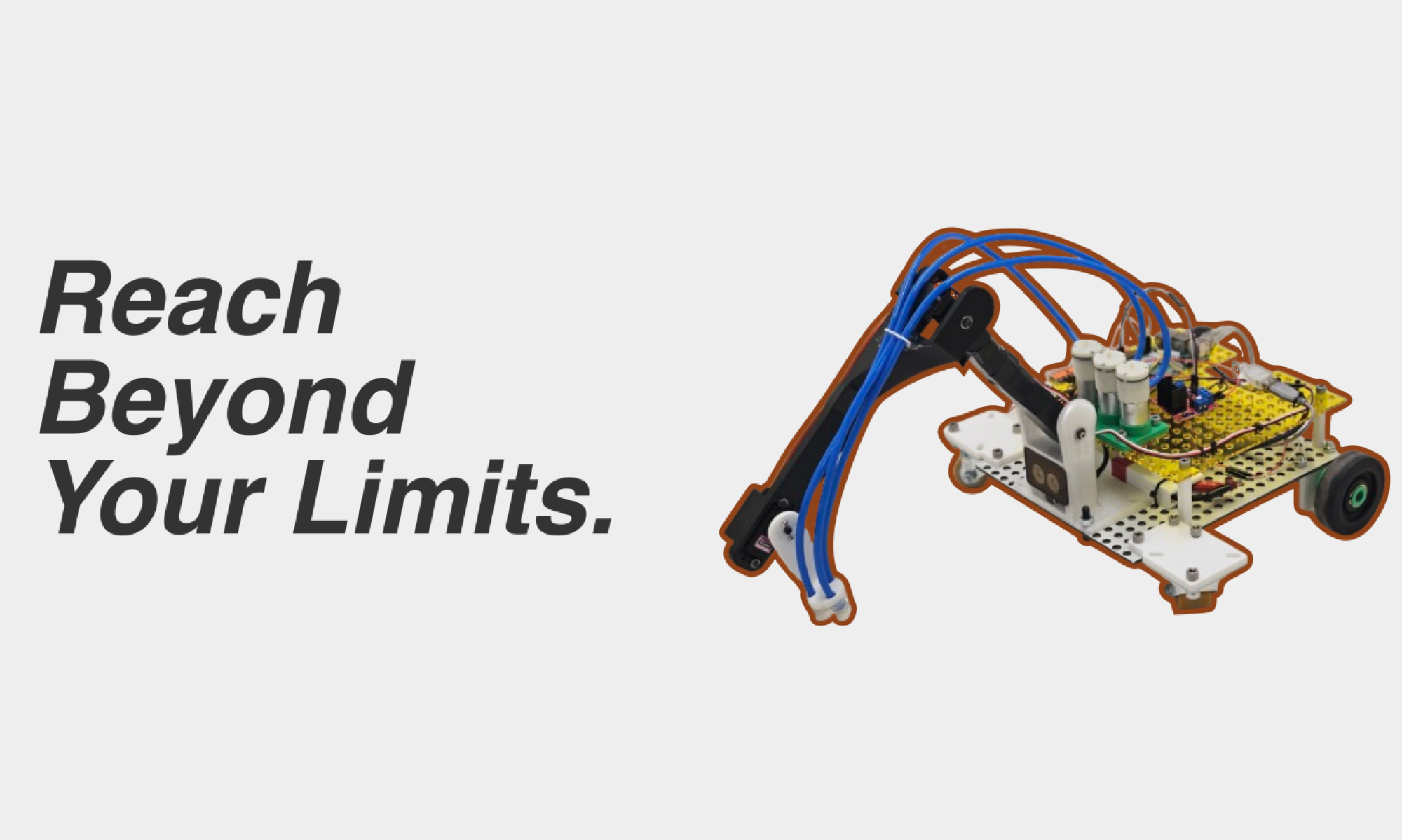

The photos that show this functionality can be seen here:

Additionally, I ran this code off of the Raspberry Pi as well and the speed of the program did not seem to suffer a performance hit after transitioning to the RPi. Thus, I am increasingly confident of our system to work successfully on Raspberry Pi.

After implementation this week, my progress is back on schedule. To further catch up to the project schedule in this coming week, I hope to be able to integrate with the rover to determine the proper threshold amount to coordinate with the arm. In addition, I hope to be able to write the communication protocol with the arm controller code and potentially be able to receive inputs on an arm-controller program.