This week, together with Varun, we finalized the control loop and main function for how the rover should detect and position itself for the kinematics to be successful. To reiterate, it involves a series of four steps:

1) The user drives in front of the object in frame

2) It detects if it finds an object under the distance

3) Track until x distance from object to the camera is the same as camera and arm

4) then we can pick it up

For step 2, this would involve utilizing either a sweep of the frame to find a block that meets our minimum distance and then adjusting the x-value from there, or utilizing an object detection algorithm combined with depth measurements to automatically detect the object on the screen. In the latter case, classification is not necessary since there will only be one object in our immediate radius as defined by our scope.

The progress I have made on the code can be found at github.com/njzhu/HomeRover

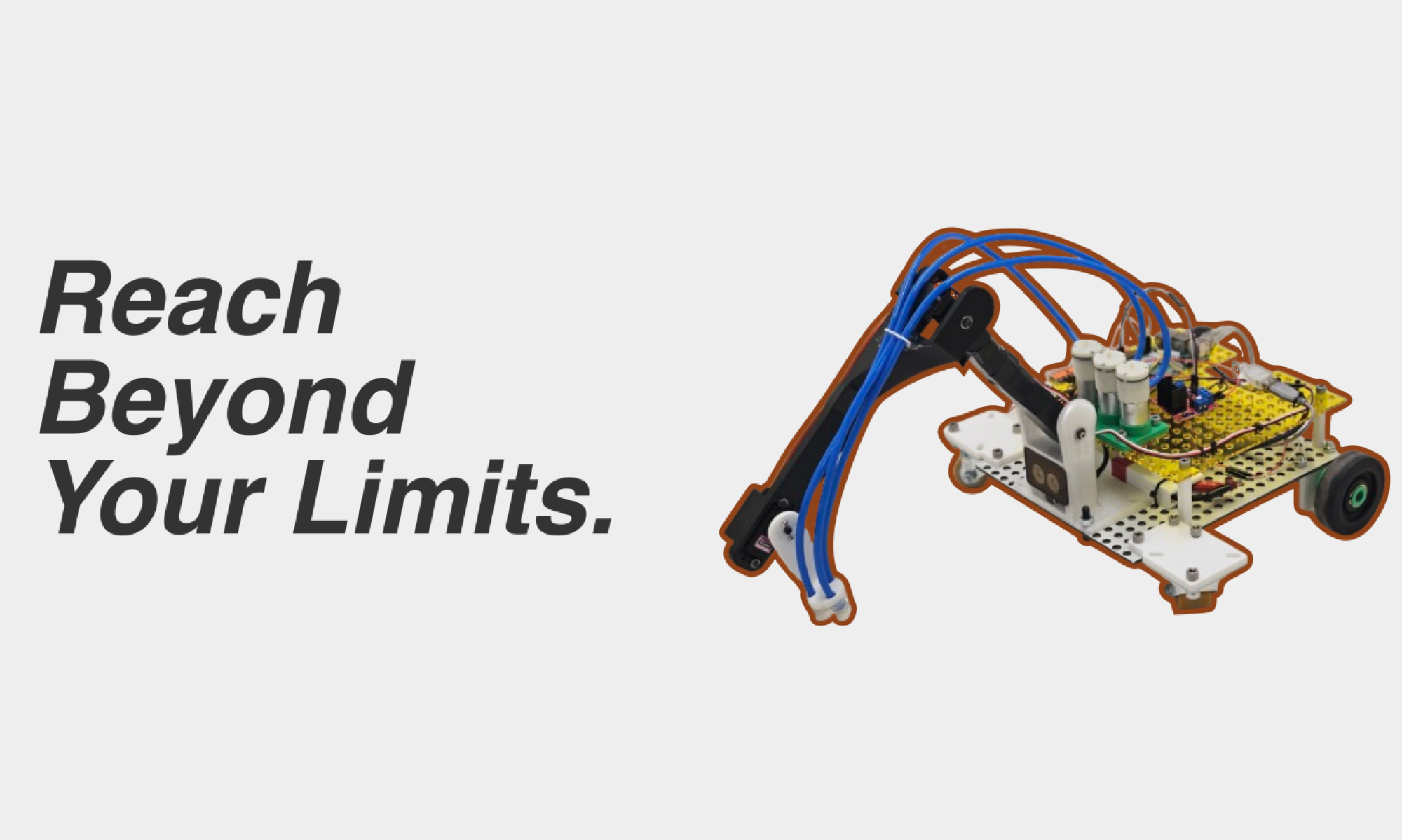

My progress is aligning with the completion of the fabrication of the rover and development of the kinematics scheme. To match this project schedule, I will be in close coordination, developing clear, explicit interfaces of information passing to ensure integration goes smoothly.

In the next week, I hope to get a preliminary version of the control loop working, outputting the necessary information that the other modules require.