Risk Mitigation

The main risk of our system is the possible failure in parsing the voice commands. To resolve this issue, we listed out the supported commands that are guaranteed to work and integrated these instructions in the “help” command. The user can simply say “help” to get sample commands and start exploring more from there.

Design Changes

There is no change in our design.

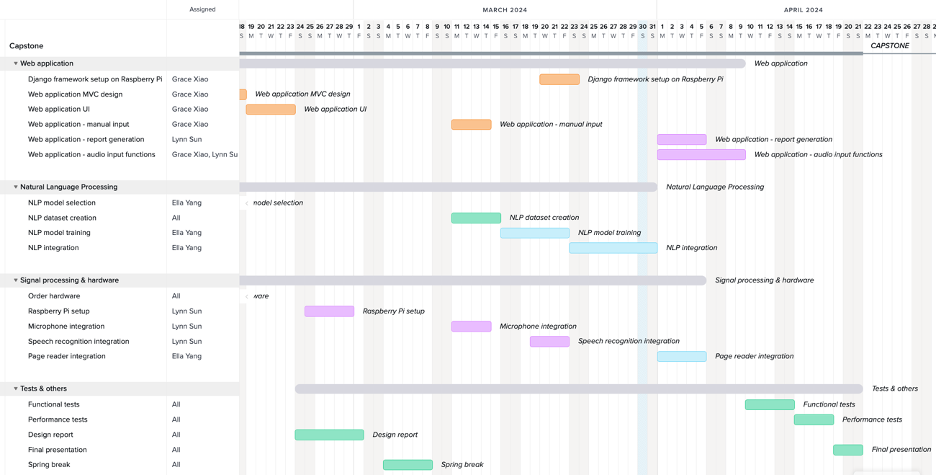

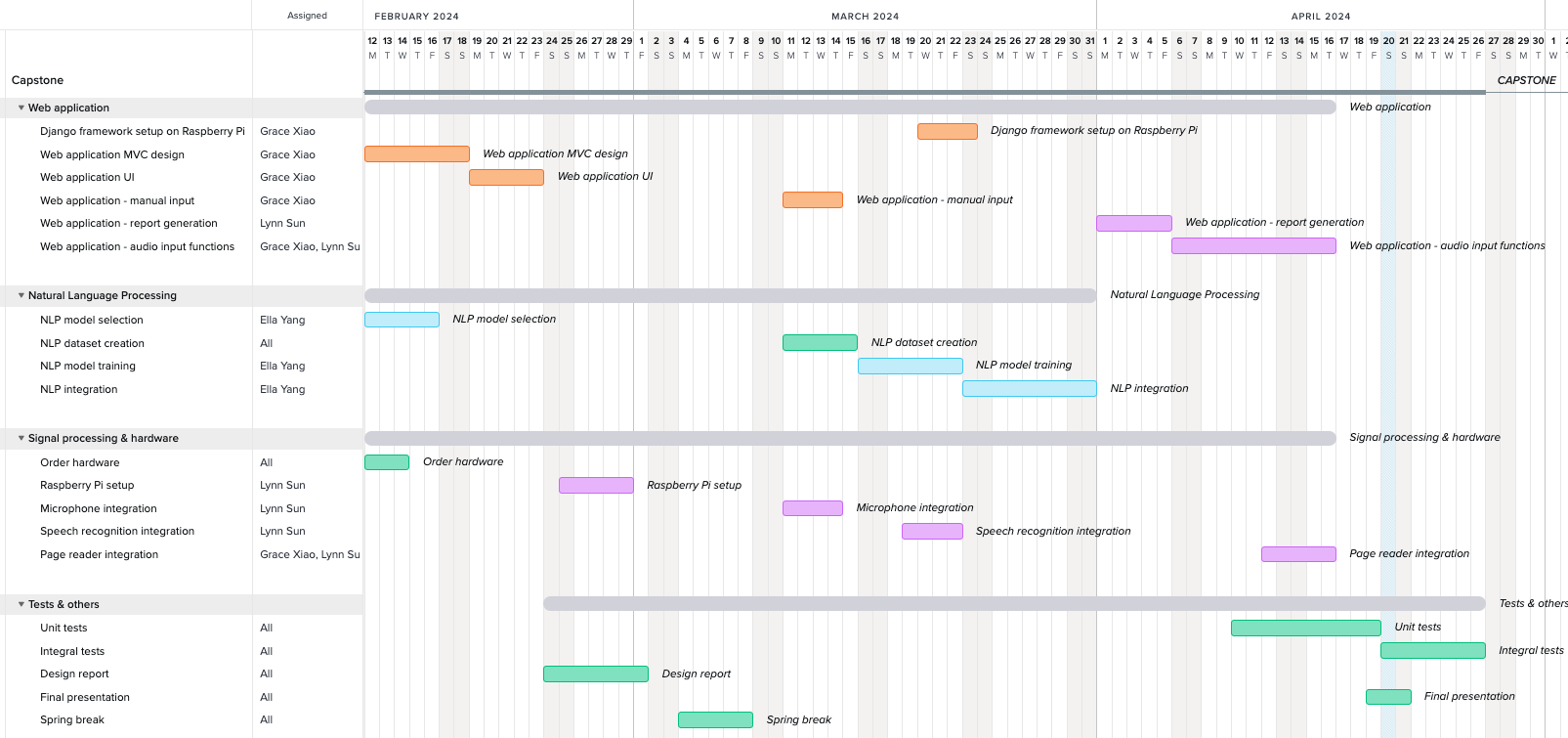

Updated Schedule

The updated schedule is as reflected in the final presentation.

List of all tests

Unit tests

| Test | Expected Performance | Actual Performance |

| Latency (voice command) | 4 seconds to render the page | Average 4.52 seconds to render the page |

| Battery life | Consumes less than 50% of power with monitor on for 1 hour | Power drops from 100% to 77%, consuming 23% of total power |

| Portability | 500 grams | 500 grams |

| Accessibility | 32% of right half of screen | 35.45% of right half of screen |

| Noise reduction | 90% accuracy of integral test under 70dB environment | 9 out of 10 commands work as expected, 90% accuracy |

| Test | Expected Performance | Actual Performance |

| Audio to text | Less than 20% of word error rate (WER) | 98.3% accuracy, edge case exists |

| Text to command (NLP) | Identify verb with 100% accuracy;

Identify item name, money, date, and number with 95% accuracy; NLP process takes less than 3s |

Identify verb, money, date, and number with 100% accuracy;

Identify item name with 96% accuracy NLP process takes about 2.5s |

| Item classification (Glove) | 90% of item names correctly classified | 18 out of 20 item names are correctly classified, 90% accuracy |

| Voice response | All audio requests should be assisted with a voice response | The app will read out the content of the response (entries, report, etc.) |

System tests

User Experience Test: Each of the 5 volunteers would have half of the day to interact with our product without our interference. Volunteers are expected to give feedback about what they like and dislike about the product.

Overall Latency: Test different commands (e.g. record/change/delete entry, view entry, generate report, etc.) Expect <15s from pressing the button to rendering the page for all commands.

Overall Accuracy: Expect >95% accuracy for the whole system.

Test Findings

There are some improvements that could be made about the user interface. The font could be larger for normal users. We support “remove” command but not “delete” command, while there are delete buttons which could be misleading. The audio instruction is too long for some user to remember what commands are supported. It might be necessary to tell the user to strictly follow the instructions, or the accuracy of commands will be very low. We will make corresponding improvements before the final demo.