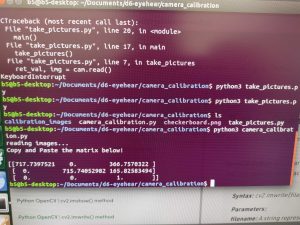

This week, I spent most of my time trying to build OpenCV with GStreamer correctly. I made a lot of small and avoidable mistakes, such as not properly uninstalling other versions, but everything ended up working out.

Once I got OpenCV installed and working with Python 3, I updated the previous code I was using to work with the Jetson TX2’s included CSI camera. I calibrated the camera and also used putText to overlay angle text over the detected people in the camera’s video output. It’s a little hard to see, but the image below shows the red text on the right of the video.

There were a few oddities that I also spent time working out. By installing OpenCV with GStreamer, the default backend for VideoCapture changed for the webcam. Once I learned what was going on, the fix was simply to change the backend to V4L when using the webcam. I also struggled with making the CSI camera output feel as responsive as the webcam was. A tip online suggested setting the GStreamer appsink to drop buffers, which seemed to help.

Once I got the CSI camera working reasonably, I began investigating how to use the IBM Watson Speech-to-Text API. I did not get very far this week beyond making an account and copying some sample code.

I believe that I am still on schedule for my personal progress. Our group progress, however, may be behind schedule since we have not yet fully figured out any sort of beamforming. I will try to continue making steady progress on my part of the project, and I will also try to support my team members with ideas for the audio processing portion.

Next week, I hope to have speech-to-text working and somewhat integrated with the camera system. I want to be able to overlay un-separated real-time captions onto people in the video. If I cannot get the real-time captions working, I want to at least overlay captions from pre-recorded audio onto a real-time video.