Link to Final Video: Google Drive Link

Team Status Report for 4-30

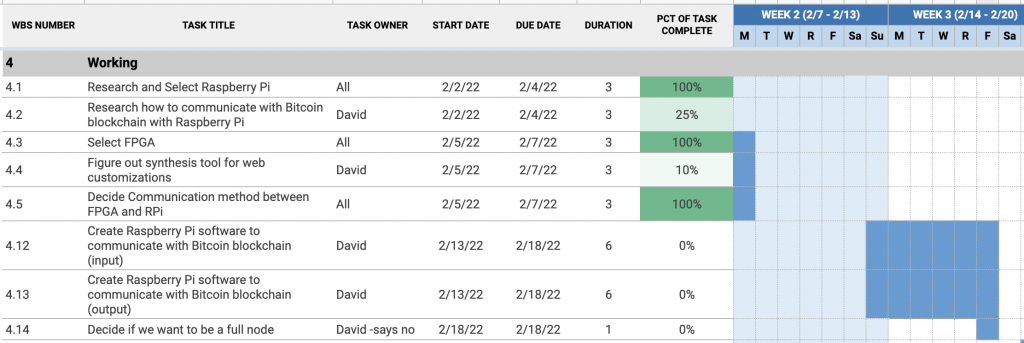

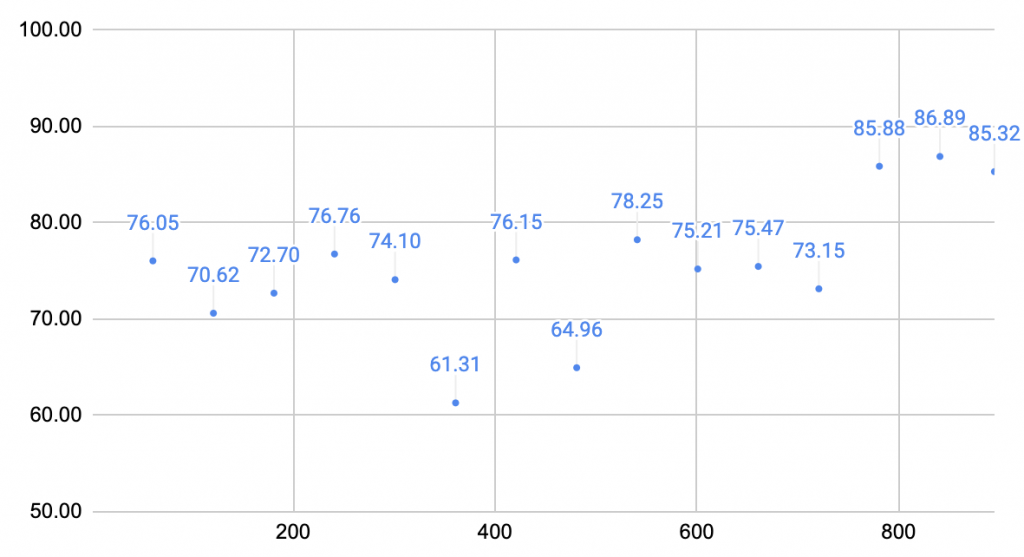

The accuracy measures that we had in our presentation was made for 2 boards, which included all of the graphs and other metrics. The accuracy metrics have been updated to include different number of boards and more ways to try to improve the robustness of the switching algorithm. The algorithms tested are for favoring equal split, linear peak, exponential peak, and equal splits. Accuracy number for this will continue to be analyzed to confirm the best state that our choosing algorithm can be in.

Another risk in our project lies in the memory limitations of the FPGAs when mining Ethereum. Ethash is a memory hard hashing algorithm which means that it was designed to prevent ASICs and other specialized hardware from gaining an advantage over GPUs and other systems. Thus, the limited memory space on the FPGAs make it difficult to efficiently mine Ethereum. Although there does exist ASICs that mine Ethereum today, these boards are more expensive and more complex than the systems that we can create on the DE0-CV FPGA board. To mitigate this risk, we will attempt to only store the 16MB Ethereum cache and if this also does not work, we will create multiple smaller scale caches that provide the same functionality.

David’s Status Report for 4-30

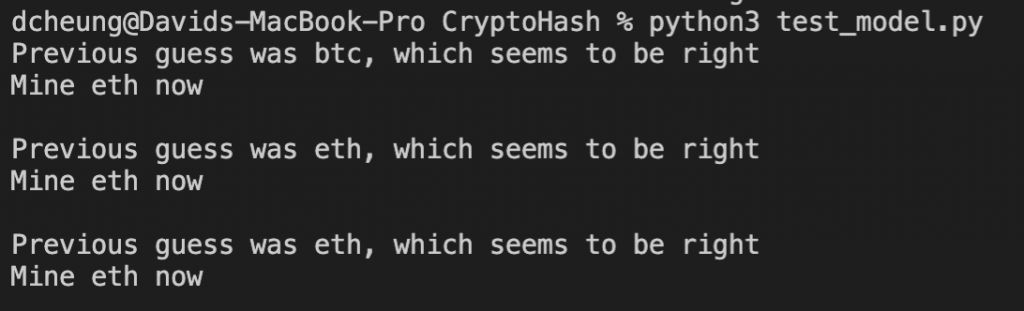

For the week, I had to present our final presentation. I worked on my portion of the slides, which included work left to be done and verification and testing. I asked my teammates to leave me some things to say so I could present their slides, but Lulu failed to include anything. Luckily, half of her slides were very similar to slides I had made for previous presentations so I was able to come up with things to say on the spot because she had not informed me that she left me with nothing. After seeing all of the interesting presentations that the other teams had put together, I focused in on continuing my testing of the system. I ran longer tests to get a better grasp of the true accuracy (however close I can understand of it) of the decision making process. I expanded my testing to include spreads of more than 2 FPGAs, including 6 boards and 10 boards, changing the scaling up process to 2-6-10 instead of 2-5-10. This made more sense as we can start from an equilibrium state regardless of the number of FPGAs. I also ran tests on the thresholds with which we decide to change configurations to better improve the overall robustness of the system. I came up with different ways to make the algorithm more robust to sudden volatility spikes that may change the configuration and make the FPGAs reset unnecessarily. I also made plans with the team to work on the poster, but Lulu remains inactive, so might have to work on that only with William. I also await Lulu’s response to my asking of her status update on the receiving code.

In terms of my schedule, I am on time. I conducted tests and will analyze my data with better numbers from lessons I learned during my own presentation. Since Lulu is unresponsive, I can only try to keep helping William with the Ethereum miner implementation.

We also have to begin writing the final report in addition to finishing the poster.

Attaching a small sample of the many graphs I’ve generated and analyzed

Team Status Report for 4-23

The accuracy measure for the entire system has been changed. Initially, the accuracy measure was how often the predictor was predicting to mine the coin that had the higher price change. The accuracy now is measured based on switching and scaling up the system. The profitability metric is conducted based on the expected revenue the mining pool will generate based on the hash rate that our system provides. The largest risk that lies with this change is that the mining pool data for revenue is technically given in 24 hour increments which is a much coarser granularity than the switching time that we are using for our system. This will be risk mitigated by keeping track of the different price points and using Gaussian noise to extrapolate the expected revenue. We can then create an expected revenue for what we would believe to be an ideal switching, with and without the 10 second switching.

David’s Status Report for 4-23

For the week, I focused on the decision algorithm where I started to play around with the weights and seeing how they affected the decision making. This is where the bulk of my time lies. Different inputs have different weights in how they affect the spread. I also had to change our accuracy metric because the way we were measuring the accuracy was flawed. We were at first measuring the accuracy by considering the price changes and the price percent change. This was giving our predictor with accuracy ranging from 80-94%. We are now changing the accuracy rating where we scale up our mining rate and check the expected revenue from the mining pool. We also worked on the presentation that’s due Sunday. I worked on my slides and told the rest of the team to finish their slides by tonight so that we can meet tomorrow and have a meeting where we can prepare myself for the presentation next week. The ticker used for all the data was also reverted back. Using BUSD for the tickets didn’t fix the issue, instead the fix was to keep the client inside all of the api call helper functions so that it would pull the most recent data each call.

In terms of my schedule, I think that I’m relatively on time, I’m just still waiting on receiving to work so that I can more accurately have testing metrics. It is hard to have accurate metrics to present, but we have relative numbers that we can extrapolate and present.

I will continue to work with my teammates to implement Etereum and finish fixing receiving code. I know that Lulu has met with a TA regarding this issue and maybe has a workaround. Hopefully she can get this working soon.

David’s Status Report for 4-16

For the week, I focused on my parts in scaling the design up to support Ethereum mining. The communication packets are different and need to be stored differently, but everything should be working in that regard. There was an issue with the ETHUSDT ticker for the Binance API where the data was incorrect, the tickers have since been updated to use Binance’s own stablecoin as the second coin in the ticker pairing. This should be enough for longevity unless Binance decides to remove support for their own stablecoin. The historical data comes in through kline data and is processed as such, but inference was not happening with kline data, it has since been changed to use kline data. This allows the added metric of volume of transactions to be used in predicting. I have it set such that when outputting a spread, it will favor choosing the coin with more transaction volume.

In terms of my schedule, I think I’m still overall behind, but in pace with the progress that the rest of my team has made. Most of the work I have to do now has to deal with testing and metric evaluation, which is hard to do without the rest of the project done.

I will continue to work with my teammates to get their parts done. I know that receiving from the FPGAs is still a work in progress that is very important to the communication so I will focus my efforts to helping Lulu with that. I will also work on my own part, perhaps even trying different machine learning models if my team doesn’t require my help. This will also include finding more attributes to help prediction.

Team Status Report for 4-10

Following the demo, we have put together most of the system. The largest part missing is the receiving code that the Raspberry Pi runs to accept nonces from the FPGA. Lulu is responsible for this, but we will try to assist her in finishing this part as much as we can while we work on our own parts. We now have access to information about the trading volume so we can make our decision on that as well.

While SHA-256 is a well known hashing algorithm with plenty of documentation online on how to implement it in SystemVerilog, our other cryptocurrency choice, Ethereum, uses Keccak-256 which is less well-documented. There is a risk that we will have to pivot to using another cryptocurrency or devote more time into implementing Keccak-256 which takes time away from other aspects of the project. The Bitcoin miner which uses SHA-256 is close to completion but the Ethereum one is still being tested and debugged. If we cannot get it to work, we will try to find existing publicly available hardware implementations of Keccak-256 or choose a different cryptocurrency with a different hash algorithm.

David’s Status Report for 4-10

I have worked with my teammates to make sure that their modules were working as intended. With Lulu’s sending and receiving functions, I changed it to match the clock that the FPGAs run on so we sync up the communication. William and I debugged the sending part such that we currently have a sending function working and is received by the FPGAs. I have instructed her to finish up the receiving function, which should wait for the nonces from the FPGAs. William and I also found a way to get around the remote server idea by simplifying using github where we push and pull `mine.txt` regularly.

Aside from teamwork, I have found a way to get information from the Binance API that tells us the trade volume, which was one of the metrics Professor Kim recommended us to look into.

David’s Status Report for 4/2

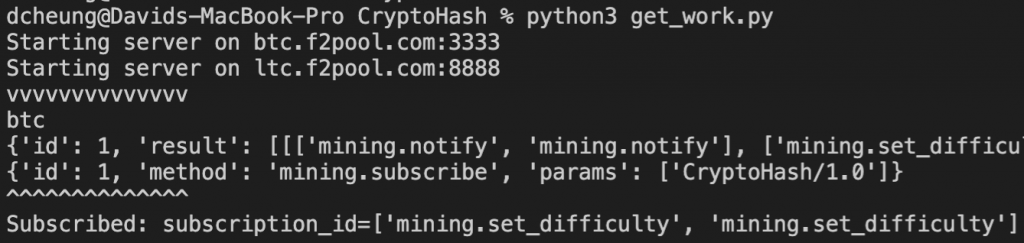

My focus for the week has been to figure out how exactly to link together all of my modules. Some components that I had were dependent on the Python version like using the Binance API or how the socket communication was configured. I resolved the version issues and changed the Miner so that it would connect to multiple mining pools at the same time. All information obtained from the mining pool is ready to be sent over, but I continue to wait for that part of the project to be completed. I have been able to link the decision process and communication with the mining pool together. There is a main miner object that connects to the different mining pools, currently 2 of them for different coins on different ports. Before this, it trains on the historical data to have its model ready for inference. After subscribing to the mining pools, it spawns jobs from their replies in threads so that the main thread can sleep every 10 seconds determining when to switch. Our way of switching has changed because of OS difficulties, so it currently outputs its prediction into a file to be transported to and fro remote servers. It waits for replies from the GPIO wires (waiting for this to be done) and sends this back to the mining pools.

![]()

I will continue to improve the decision process with suggestions from Professor Kim about using the number of transactions (trades) and integrating more real time data like number of shares found and difficulty. I will also try and see if I can find a way to funnel in the amount of money we get into the decision making process. I know the demo is coming up, so this may take a backseat as I try to help my other teammates to get back on track with what they’re doing, or perhaps figure out the details on the remote server communication.

Team Status Report for 4/2

The largest change in plans has to deal with configuration switching on the FPGAs. We can change the synthesized design on the boards via command line with Quartus Programmer, but Quartus only runs on x86 processors and the Raspberry Pi has an ARM processor. This means that the Raspberry Pi will not be able to tell the FPGAs to switch from one cryptocurrency to another. Our current solution to mitigate this risk is to upload a configuration file to a remote server accessible by both the Raspberry Pi and code running locally on one of our laptops. Using this, we can parse the remote file to reconfigure the FPGA boards. The design for this new solution has incurred an additional cost in the purchase of a ten-to-one USB hub so that all of the boards can be reconfigured from a central location.

The decision making process and the code that talks to the mining pool is largely complete. The decision tree can be heavily improved, but that will be put on the back burner for a little bit so that the entire project can come to life in time for the demo.

The most significant risk lies in the GPIO communication where we continue to wait on progress there.

David’s Status Report for 3-26

In terms of the Raspberry Pi, we still need to consult somebody on how to set it up properly on CMU Secure, but currently, we can work on it at home with an ethernet connection. I have since created a module to create the miner and client to communicate with the mining pools. There is a slight oddity with how bytes and strings are dealt with with Python3, so the client is currently written for Python2 where there isn’t as much of a distinction between bytes and strings, which is easier to understand the data that is sent to the mining pool and the data that we get back from the pool. The mining pool in addition to giving work also gives the previous hash and changes to the difficulty. The last step now is to send the data and receive answers via the GPIO Pins. The decision tree has also been updated to include another attribute of volatility that is currently crudely calculated with an average threshold. Currently, I take the averages of the differences of the high and low prices and divide it by the average of the open and close prices. This in some sense gives a metric of how volatile it was from a generalized mean value. All of these mean values are averaged to get an overall threshold value that the get_data method will use to update the label generation. In addition, the decision tree inference currently conducts a majority vote at the node it traverses to, but the vote has now been changed such that it takes in external variables which currently include shares found and difficulty. Future work involves expanding all of these and especially on Sunday and Monday I want to make the modules to hook up the system so that we can push to have a prototype that doesn’t necessarily have to mine yet, but can at least communicate so that we can set up/hook up everything else during the week.

Team Status Report for 3-26

The overall design remains constant, but the attributes and inputs to the decision tree continue to be changed. The communication between the RPI and mining pool seems to have been isolated and the link in the spotlight now is between the RPI and the FPGAs. We will begin to link up the entire system to test the communication modules to make sure that everything is ready to be hooked up. The largest risk here is just in the modules not working as intended and the management of the risk is exactly as we’re doing, preparing the system and testing it to make sure that it works before moving on.

We had planned on having the FPGA send the number of hashes it completed to the Raspberry Pi in order to get an accurate picture of what the system hash rate is. However, this component is at risk because the timing of when to send this information is still up in the air. We want to minimize all communication with the Raspberry Pi to critical information only. This way, the system is able to scale up to 10+ FPGAs. We intend to mitigate this risk by having the Raspberry Pi calculate this hash rate instead of it being transmitted. Each FPGA has a unique ID and each hashing module on the FPGA has a set numerical bound from which it iterates through nonces. We intend to write software that takes the correct nonce, deduces the bounds that this nonce is in, and calculates the number of hashes the system ran through to reach this nonce.

Team Status Report for 3-19

The hashing rate of the FPGA can be increased by having a greater number of hashing modules. However, with each Bitcoin module taking up a cell area of ~13,000 units, we are limited by the number of logic elements on the FPGA. If this is a problem for reaching our target hash rate of 5Mh/s, we will need to optimize the hashing module further. There are additional pipelining techniques that we can use to cut down on the idle time of the hashing module. Additionally, some parts of the block header are being hashed without any modifications. We can eliminate more than a billion redundant hashes by caching this somewhere.

Communication method between FPGA and the web application has not been set and tested yet. For the mitigation plan, we will have the FPGAs send over dummy data to the web app and the web app will then display the data on the website to make sure the communication protocol between these two components are set.

The accuracy of the decision tree is not very high on preliminary tests, but this is with the framework of only 2 attributes both stemming from price changes in the past 10 seconds. The tree was also trained to be rather shallow with the hyperparameter being relatively low. This is only a short term risk and should be bettered later on when we introduce more attributes and deepen the tree to improve inference accuracy.

Caching part of the block header hash will affect how the Raspberry Pi communicates with the FPGA. If we decide to implement this change, it wouldn’t make sense for the Raspberry Pi to send the full 80 byte block header. Instead, out communication protocol will have to be changed to send a 256 bit hash followed by the remaining 36 bytes of the block header. Implementing this change will cut down on our communication costs and allow the system to scale better.

We will no longer be using the stratum mining proxy in tandem with the getwork function to communicate with the bitcoin blockchain, instead we have directly migrated to communicating with mining pools with the stratum protocol. This should make communication more streamlined and understandable while also widening the pool of currencies that we can mine. This does create a overhead where we have to understand the protocol to use it, but we have examples from other available miners to base our implementation off of.

David’s Status Report for 3-19

Since the last status report, many hours were put into the mining proxy that we will not get back. The original plan was to use the same getwork protocol that is used in the Open Source FPGA Miner and bridge the protocol connection with the stratum mining proxy. This path was filled with dependency issues and different work arounds since that miner is meant for Windows and relies on root command, something I don’t have control over on the ECE machines and limited by my own resources locally for tools and dependencies. Even when I got the mining proxy to finally run on the ECE machines, the miner wasn’t able to connect to the proxy, perhaps with some ip address issues, so instead of driving more dead hours into debugging this, we moved on. However, the ultimate reason we decided to no longer continue with this getwork bridging was because this was only going to solve the communication problem for Bitcoin. The same solution was not going to work with Etereum, or any other currency for that matter. Instead, I decided to directly communicate with the stratum protocol, something I guess could be argued that we did in the first place. We didn’t because nobody had experience with the stratum protocol or its parent protocol, JSON RPC. I was able to find some documentation about the protocol and some miner implementations that use the protocol. So at this point in time, we have a very basic communication protocol that can at least receive requests from the mining pool.

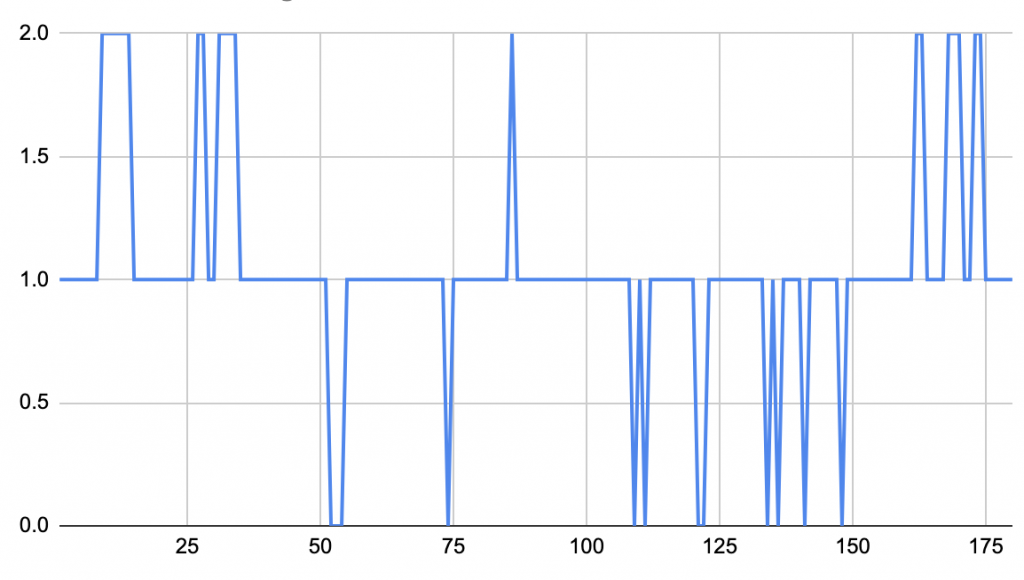

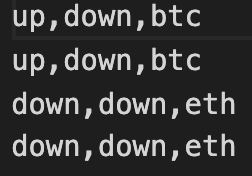

Aside from that, I also worked on the decision making process, constructing the decision tree and the scripts necessary to gather the data for training and inference. The decision tree is built upon the historical data we pull from the Binance API. I took the historical klines code from the webapp and pulled as much data as I could to create the training file. The data is formatted currently as price_change_btc, price_change_eth, coin. The coin is ultimately what the miner should have mined. This decision is currently crudely made saying that the miner should mine bitcoin if the price change for bitcoin is up and ethereum otherwise. The model is then used every 10 seconds for inference. Work to be done here is to add more attributes for the decision tree to split on, improve the label generation for training, and process the decision more to not output a coin specifically, but a spread of which coins to mine (probably with some mechanism to prevent it from always predicting the same spread).

Setting up the Raspberry Pi has proven to be a little more problematic than I would have hoped. Setting up the internet with my home wifi is worse than with CMU-DEVICE since there is at least some documentation about how to do it on CMU-DEVICE, my home wifi uses some third party that has no documentation. However, I did try to set it up on campus with the guide, but to no avail. I have another plan to set up the internet since William was able to ssh into the pi, but that will have to wait after the status report. Additionally for work for next week, I hope to finally create the modules to send data from the RPI to the FPGAs to finally start bridging between our different parts. I guess as an administrative thing, as a group we finished the design review document and we each also separately did our ethics assignment.

Some inferences after training:

Sample training data (Data Format):

Team Status Report for 2-26

The unavailability of our Raspberry Pi continues to plague us. We have received our Pi Wedge, but we continue to not hear back about the Raspberry Pi. In an attempt to mitigate this risk, we are trying to enter into the Hackberry Pi hackathon in an attempt to get a RPI from that. The largest blocker in testing mining locally has largely been solved through the discovery of a stratum mining proxy that can effectively bridge the HTTP and stratum protocols for mining. Now, to create our RPI communication protocol with mining pools, we just need to research how other established miners such as cpuminer-multi and bfgminer connect to pools. The only potential risk here is that the stratum mining proxy does require sudo access so the computers in the lab room may not suffice.

We continue to work on the FPGA modules controlling both the communication and hashing. The communication part of the system is now able to store the input Bitcoin/Ethereum puzzles and replace them with new puzzles if needed. These puzzles are recreated on the FPGA through a single bitstream sent by the Raspberry Pi. One of the risks in this method is that a cryptocurrency with a large puzzle size may take up too much hardware area on our FPGA. This would limit the amount of area available for hashing. We are researching ways to cut down on the puzzle input size and only send the necessary information.

David’s Status Report for 2-26

Last week, I encountered a blocker where I was unable to connect to mining pools because they no longer support HTTP and getwork, but instead use the stratum protocol. In working around this, I have decided to use a stratum mining proxy that will bridge the gap between the protocols. This was the result of first researching the deprecated getwork method and postulating how to request work from pools. After some literature review of another project using the same FPGA miner, I decided to use their solution to the same problem. The mining proxy is more generally for testing mining when using a computer as the middleman with a mining pool. However, we want to use a Raspberry Pi as that middle connection, so I also looked into multiple existing miners that either use FPGAs or CPUs so that I can use them to figure out how we want to communicate to mining pools. These include repositories of bfgminer, cpuminer-multi, and btcfpgaminer. For my task to create and test a module for the RPI to communicate with a mining pool, we are still waiting to receive our Raspberry Pi. I also have the task in figuring out web synthesis, but we have since decided to pass the configuration as an input to the FPGAs, so we don’t need to resynthesize anything. I worked on my slides for the design review presentation and provided a script to the presenter. I also began working on the design review report. I am now slightly behind on my task to simulate mining locally because I had only recently discovered the work around, but I have access to the mining proxy now, I just have to figure out either how to get Quartus on my mac or how to sudo on the 240 machines. In regards to other tasks, I am pretty much on track.

David’s Status Report for 2-19

This week, I dabbled in many different aspects of our project that also happened to strike a good balance between solo work and team work. In our team sessions, the most important things that William and I did for our group was we fleshed out our communication protocol and choosing algorithm to an extent. We determined our packet structures and the different types of packets we would be sending. William and I also did some research on the differences between our requested Raspberry Pi 4 and the RockPi that Professor Tamal suggested we should take a look into. We ultimately decided to go with our original request and I put in another request for 5 more FPGA boards to make sure we can guarantee having enough boards for our final product. On the solo work side, we had two main large issues in figuring out how to maneuver around the Binance API and how the mining would actually take place, so I decided to research into both of these. For the Binance API, I read into their online documentation. For the mining, I tried to configure the open source fpga miner I found during research, but I kept running into various issues like trying to run windows commands on linux, quartus not working properly, different compiling issues, etc. I managed to work past all of these issues, but I ran into a different issue where that source code used a deprecated way of getting work, so I need to find an alternative to test or create my own before testing. Overall, I’d say I’m on track where I’m a little ahead on some tasks but behind on some other tasks. We also had a team meeting where William and I basically wrote the team status report and delegated slides for the upcoming Design Review presentation.

David’s Status Report for 2-12

This week was generally more heavily focused on researching both the hardware specifications of the board we wanted to use and researching the software connections between our modules. It is imperative that we perform sufficient research into this because our aim is to maintain a low hardware cost while achieving the same performance. Settling upon the DE0-CV boards, I successfully requested and picked up some of these boards and located an area in HH 1307 to store them after giving them a quick inventory check. After delegating tasks to the other members, I ended up with the task to figure out how to connect to the Bitcoin blockchain with the Raspberry Pi, to create skeletal modules to perform the communication, and to figure out how synthesis would operate. The bulk of my time was focused on figuring out the connection, so only barebone modules were created, but equipped with the knowledge of these connections, I hope to catch up in the next week and create a functional module to communicate with the blockchain via our Raspberry Pi and to outline how synthesis will work so my teammates and I can refer to it as we all collectively forge ahead.