We received all of our supplies that we requested, so we can now try to see how all of our parts fit together. I am still currently working on the UI because I am not yet satisfied with how everything is arranged. I am playing around with the button shapes and locations, and how to best show the sections of the grill through the camera feed. Getting the camera feed itself to show up has been a little bit of an issue as well, but I do not think it will be too complicated to fix this. I think that the main issue will be having the UI, robotic arm, and CV interact correctly with each other, but we will make it work by the time the demo comes around.

Team Status Report for 3/19

For spring break and the first-week back, we started developing each of our respective subsystems. Raymond has made progress on the CV algorithm and is currently trying to get his Blob Detection Algorithm working. Jasper has started working on the UI and deciding on what information to put. Joseph has finished the mechanical robotic arm, as well as the electrical subsystem of the arm. He has also started work on the lower embedded software control of the various stepper and servo motors. Our parts were all delivered and picked up this week. We are all at a decent pace to have the robotic arm and our respective subsystems working by the interim demo. We have not started working on the software controller, but we believe it should be done as part of the integration process, as well as after the interim demo. We have also each worked on the ethics assignment and are ready to discuss it with other groups. We will update our progress in our schedule accordingly.

Raymond Ngo’s Status Report for 3/19

I was able to modify test images to isolate red portions of the image. From there, I was also able to easily obtain the outer edges using the canny edge detector. Isolating red portions of the image is necessary for the development of the computer vision system because the meat is red and the canny edge detection on an unmodified image does not return a clean outer border around the meat. (shown below)

The main issue I need to get solved by next week to remain on track is to figure out why the blob detector cv function does not work well with the processed binary image (shown below). Otherwise, I am on track to complete a significant portion of the width detection and blob detection algorithms.

The reason preprocessing needs to be done on the image is that meat usually is not on a white background like this. Therefore, necessary distractions must be removed for a more accurate detection of the material.

By next week, some form of blob detection must work on this test image, and ideally one other more crowded image.

Joseph Jang’s Status Report for 3/19

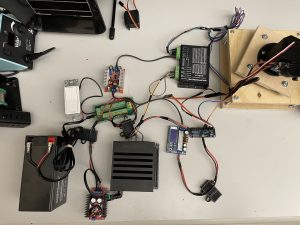

I have made a good amount of progress on the robotic arm subsystem. With the 3D printed links and wooden base I created before the start of the break, the electrical parts our team borrowed from the inventory, and the electrical parts and motors I already owned, I was able to complete about 80% of both the electrical subsystem and the mechanical parts of the robotic arm during spring break. Below are pictures of that progress.

With the arrival of most parts on Monday, I was able to complete and test the electrical subsystem on Monday and completed the mechanics and heat-proofing of the robotic arm on Tuesday. Below are pictures of the completed electrical subsystem and robotic arm.

With the arrival of most parts on Monday, I was able to complete and test the electrical subsystem on Monday and completed the mechanics and heat-proofing of the robotic arm on Tuesday. Below are pictures of the completed electrical subsystem and robotic arm.

During the rest of the week, I configured the Jetson AGX Xavier and set up the software environment. I programmed the 5 servo motors to properly turn with certain inputs, and I was also able to program the stepper motor to turn properly at the appropriate speed and direction. Below is a video of that. I will now have to work on the computer vision for the robotic arm using the camera that we bought. My plan is to now work on and complete the inverse kinematics software in two weeks at the earliest, and three weeks at the latest (which will take up one week in April). I am at a good pace to complete the robotic arm subsystem before integration.

Team Status Report for 2/26

This week, we completed our designs for each subsystem and compiled all of this information in our design review presentation. Additionally, we have placed our order for parts that we will need for the project and hope to integrate them into our development as soon as we can. The group has also done some work regarding the actual development of our respective parts. Joseph has done work on the robotic arm, including creating CAD models of pieces of the robotic arm that we are making and 3D printing some of those parts. He has also split up work for writing the design report. Raymond has done some work and research on blob detection, including finding a possible new system to increase the accuracy of our CV. Jasper has been considering different ways for us to integrate the touch screen UI into our system, but will have to wait until our parts come to decide what will be best. Finally, as a whole, we seem to be on schedule for a successful project.

Raymond Ngo’s Status Report for 2/26

For the deliverables I promised last week, I am not able to provide them because the schedule timing has been tighter than I anticipated, and as a result I was not able to properly tune the parameters for the blob detection. The most it could do was to detect the shadow in the corner. Ideally, a blob detector with better parameters is the deliverable by the deadline next week.

The main reason was the tight schedule between the presentation and the design report. During the past week (from last Saturday to 2/26), a lot of work was done on the design presentation, mainly having to communicate between the team members on the exact design requirements of the project. Questions involving the discovery of a temperature probe that could withstand 500F or higher popped up, as well as questions on the best type of object detection. Furthermore, as I was the one presenting the design slides, it was my responsibility and work to practice every point in the presentation, and to make sure everyone’s slides and information was aligned. In addition, as it was not I but Joseph who possessed robotics knowledge, I had to ask him and do my own research on what the specific design requirements are.

This week, I also conducted research on other classification systems after the feedback from our presentation. Our two main issues are the lack of enough images to form a coherent dataset, hence our lower classification accuracy metrics, and a selection of using a neural network to classify images ahead of other classification systems. One possible risk mitigation took I found was using a different system to identify objects, perhaps using SIFT. That, however, would require telling the user to leave food in a predetermined position (for example, specifically not having thicker slabs of meat rolled up).

We are on schedule for class assignments, but I am a bit behind in configuring the blob detection algorithm. I am personally not too worried about this development because blob detection algorithm is actually me working ahead of schedule anyways.

Joseph Jang’s Status Report for 2/25

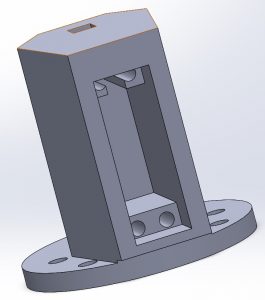

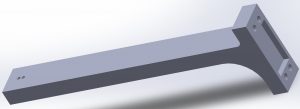

The schedule has been tighter than expected for me, so the robotic arm development has been tough. However, I have already 3D printed the 1st and 3rd links of the Robotic Arm. It has some small size defects, so I have adjusted the CAD model and will attempt to reprint them this Sunday. Also, I have submitted the 3D printing of link 2 to FBS so that a larger printer can be used to 3D print the part. I am hoping that the parts I have ordered will come in soon before spring break starts so that I could bring the robot parts on the plane back with me and assemble them at home. Fabrication of the robotic arm should not take more than a total of 24 hours if no issues occur.

I have also started working on the design report, and hope to have it finished by the deadline. I have planned a way for us to separate the work for the report. We will each write parts of the report that we have made slides for in the presentation, plus some additional topics that need to go in the report, such as design studies. We will include all the diagrams and a couple of additional tables and pictures in our design report as well as touch upon the fabrication and assembly process of our robotic system. We will also discuss more on integration in our report, which we have all started planning.

As suggested during our design presentation, I have also looked more into how to use the available ROS libraries to implement inverse kinematics for a 4DOF complex robot such as the one I will be making. I am reviewing the math for inverse kinematics, and have looked into ROS tutorials for IKFast Kinematic Solver. My plan is to fully delve into developing the inverse kinematics software during spring break, where hopefully I will have most if not all of the robot arm assembled.

Team Status Report for 2/19

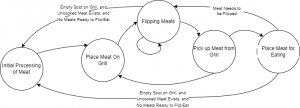

This week was our busiest week so far. We had discussions to finalize our design choices for the overall system states and architecture. We estimate that we are each about 70-80% finished with designing our own respective subsystems. We will complete our designs in time to submit the design report. We have not yet documented the fabrication and assembly process of our design, which we will do in our report. We also have to meet again to discuss what the interfaces will be between each of the respective subsystems we are designing and also start thinking about how we will proceed with system integration. We have updated the Gantt chart to show our progress in our design phase. We added slack to our schedule to include more system integration and start our development phase when spring break starts. We decided Raymond will present for our Design Presentation, which is mostly finished and ready to be submitted. Although we are all still busy in the Design phase, it is worth noting that we should look ahead and start discussing the Development phase of this project next week. We hope to continue this pace throughout our project.

Joseph Jang’s Status Report for 2/19

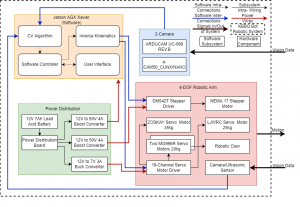

Most of this week was spent creating and finalizing our design decisions. I spent my time creating the System Architecture, System States, and Electrical System Diagrams. The CAD models of our 3 robotic links were also created by me. I also created a parts list of everything that our team currently owns, borrowed, or needs to buy. I will start submitting parts for order next week after our design is reviewed through our presentation. I have also updated the Gantt Chart to show our progression and add more slack. All these diagrams and documents are crucial for the design presentation, design report, and the next stage of development. The creation of all these diagrams helped me consolidate design choices. This was a particularly busy week for me as I had to complete most of the robotic arm design for the presentation. I expect that next week will be more relaxed as I start creating the report and finalizing any design questions. Here are the pics of what I have worked on this week. Please look at our Design presentation slides for better quality pics.

Jasper Lessiohadi’s Status Report for 2/19

This week, I worked more with python in order to better familiarize myself with it and prepare myself for when I get to work with the touch screen hardware that we will be working with. I have also looked more into UI design to give the best user experience I can. The overall planning for the project and design of it is almost done and I am very happy with the direction it is going. We have decided to divide the grill into four sections with one piece of meat on each. The touch screen UI will then have an overhead camera feed of the grill which the user can then use to manually interact with the meat if they so choose. I am happy with what my rough draft of the UI for now, however I will definitely want to add colors that match the theme of the project. I am thinking they will be medium/dark browns and/or other similar colors. I will have to figure out what looks best once the UI is more developed. For now, I am on track with our projected plan and I am excited to see how the project evolves over time.