The most significant risk as of now is that our team is slightly behind schedule and should be working on completing the build of the robot base and individual component testing along with the implementation using the RPi. To manage these risks, we will use some of the slack time delegated to catch up on these tasks and ensure that our project is still overall on track. Following the completion of the design report, we were able to map the trajectory of each individual task. Some minor changes were also made to the design, with the removal of the todo-list feature on the WA because it felt non-essential and was a one-sided feature on the WebApp, and the neck of the robot having only rotational motion response along the x-axis for audio cue, and y-axis (up and down) translation for a win during RPS Game. We decided to change this because we wanted to reduce the range of motion for our servo horn that connects the servo mount bracket to the DCI display. By focusing on the specified movements, our servo motor system will be more streamlined and even more precise in turning towards the direction of the audio cues.

Part A is written by Shannon Yang

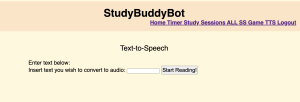

The StudyBuddyBot (SBB) is designed to meet the global need for accessible, personalized learning by being a study companion that can help structure/regulate study sessions and incorporate tools like text-to-speech (TTS) for auditory learners. The accompanying WebApp to the robot ensures that it can be accessed globally by anyone with an internet connection, without requiring users to download or install complex software or paying exorbitant fees. This accessibility factor helps make SBB a universal solution for learners from different socioeconomic backgrounds.

With the rise of online education platforms and global initiatives to support remote learning, tools like the StudyBuddyBot fill a crucial gap by helping students manage their time and enhance focus regardless of geographic location. If something similar to the pandemic were to happen again, our robot would allow students to continue learning and studying from the comfort of their home while mimicking the effect of them studying with friends.

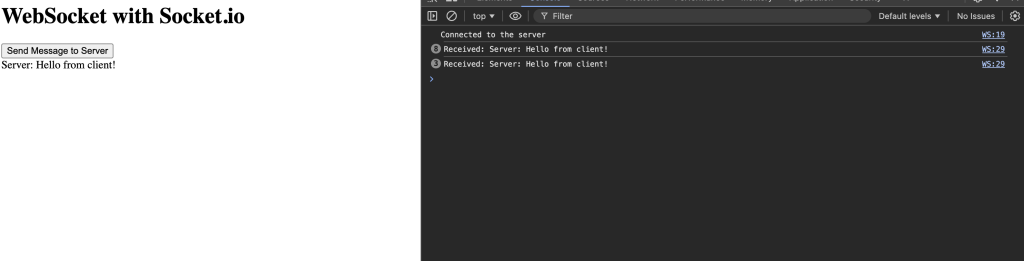

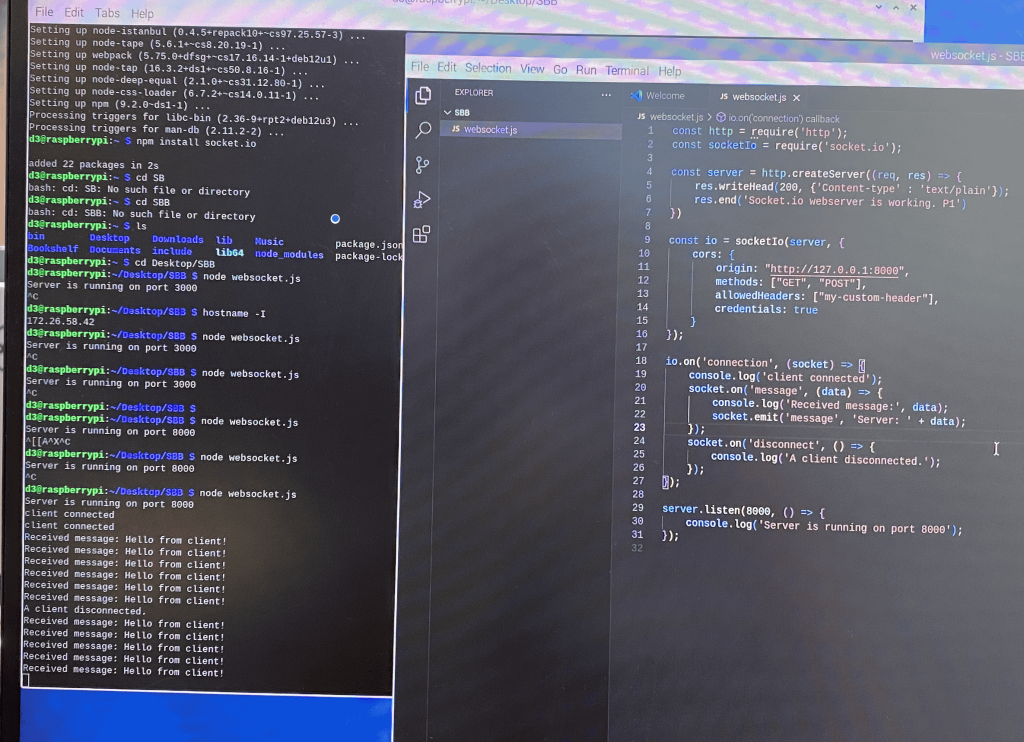

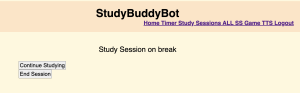

Additionally, as mental health awareness grows worldwide, the robot’s ability to suggest breaks can help to address the global issue of burnout among students. The use of real-time interaction via WebSockets allows SBB to be responsive and adaptive, ensuring it can cater to students across different time zones and environments without suffering from delays or a lack of interactivity.

Overall, by considering factors like technological accessibility, global learning trends, and the increasing focus on mental health, SBB can address the needs of a broad, diverse audience.

Part B is written by Mahlet Mesfin

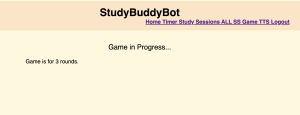

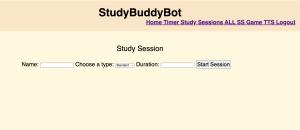

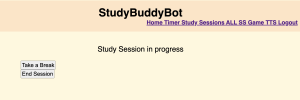

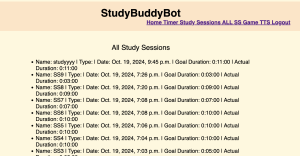

Every student has different study habits, and some struggle to stay focused and manage their break times, making it challenging to balance productivity and relaxation. Our product, StudyBuddyBot (SBB), is designed to support students who face difficulties in maintaining effective study habits. With features such as timed study session management, text-to-speech (TTS) for reading aloud, a short and interactive Rock-Paper-Scissors game, and human-like responses to audio cues, SBB will help motivate and engage students. These personalized interactions keep students focused on their tasks, making study sessions more efficient and enjoyable. In addition, SBB uses culturally sensitive dialogue for its greeting features, ensuring that interactions are respectful and inclusive.

Study habits vary across different cultures. For example, some cultures prioritize longer study hours with fewer breaks, while others value more frequent breaks to maintain focus. To accommodate these differences, SBB offers two different session styles. The first is the Pomodoro technique, which allows users to set both study and break intervals, and the second is a “Normal” session, where students can only set their study durations. Throughout the process, SBB promotes positive moral values by offering encouragement and motivation during study sessions. Additionally, the presence of SBB creates a collaborative environment, providing a sense of company without distractions. This promotes a more focused and productive study atmosphere.

Part C was written by Jeffrey Jehng

The SBB was designed to minimize its environmental impact while still being an effective tool for users. We focus on SBB’s impact on humans and the environment, as well as how its design promotes sustainability.

The design was created to be modular, so a part that wears out can be replaced as opposed to replacing the whole SBB. Key components, such as the DCI display screen and the microcontroller (RPi), were selected for their low power consumption and long life span, to reduce the need for replacement parts. To be even more energy efficient, we will implement conditional sleep states to the SBB to ensure that power is used only when needed.

Finally, we have an emphasis on using recyclable materials, such as acrylic for the base, and eco-friendly plastics for the buttons, that reduce the carbon footprint of the SBB. By considering modularity, energy efficiency, and sustainability of parts, the SBB can be effective at assisting users and balancing its functionality with supporting these environmental concerns.