As we approach the final presentation of our project, my main focus has been preparing for the presentation, as I will be presenting the coming week.

In addition to this, I have assembled the robot’s body, and made necessary modifications to the body to make sure every component is placed correctly. Below are a few pictures of the changes so far.

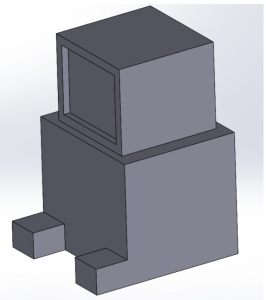

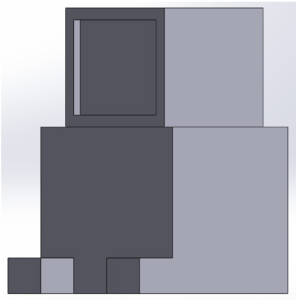

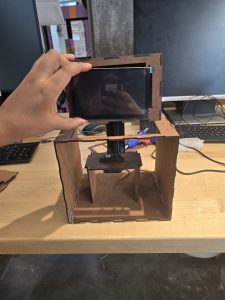

I have modified the robot’s face so that it can encase the display screen. Previously, the head was a solid box. The servo to head mount is now properly assembled. The head is well balanced using the stand I used to mount the motor to. This way there is space to place the Arduino, speaker and RaspberryPi accordingly. I have also mounted the microphones to the corners as desired.

Before picture:

After picture:

Mounted microphones on to the robot’s body

Mounted microphones on to the robot’s body

Assembled Body of the robot

Assembled Body of the robot

Assembled body of the robot including the display screen

Assembled body of the robot including the display screen

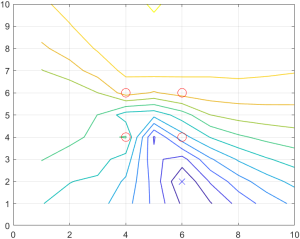

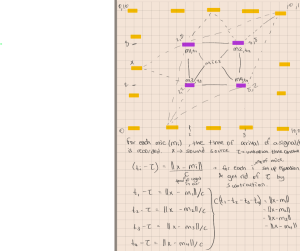

I have been able to detect a clap cue using the microphone, by identifying the threshold of a loud enough clap detectable by the microphone. I do this processing in the raspberry pi, and once the RPi detects the clap, it runs the signal through the direction estimate function, which spits out the angle. This angle is then sent to the Arduino to modify the motor to turn the robot’s head. Due to the late arrival of our motor parts, I haven’t been able to test the integration of the motor with the audio input. This put me a little behind, but using the slack time we allocated, I plan to finalize this portion of the project within the coming week.

Another thing I worked on is implementing the software aspect of the RPS game, and once the keypad inputs are appropriately detected, I will meet with Jeffrey to integrate these two functionalities.

I briefly worked with Shannon to make sure the audio output for the TTS through the speaker attached to the RPi works properly.

Next week:

- Finalize the integration and testing of audio detection + motor rotation

- Finalize the RPS game with keypad inputs by meeting with the team.

- Finalize the overall integration of our system with the team.

Some new things I learned during this capstone project is how to use serial communication between Arduino and a raspberry pi. I used some online Arduino resources that clearly teach how to do this. I also learned how to perform signal analysis on audio inputs to localize the source of a sound within a range. I learned how to use the concept of time difference of arrival to get my system working. I used some online resources about signal processing, and by discussed with my professors to clarify any misunderstandings I had towards my approach. I also learned from online resources, Shannon and Jeffrey how a WebSocket works. Even though my focus was not really on the web app to RPi communication, it was good learning how their systems work.