This week, I focused mainly on the design report aspect. After my team and I had a meeting, we split up the different sections of the report fairly, and proceeded to work on the deliverables.

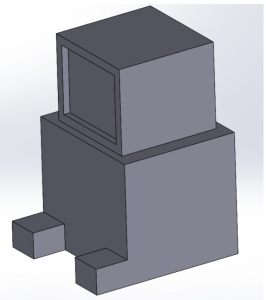

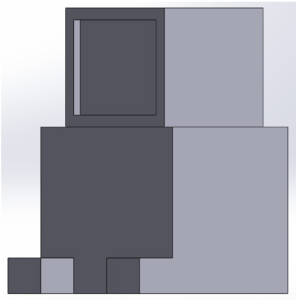

I worked mainly on the audio triangulation, robot neck motion(components included) and the robot base design. In the design report, I worked on the use-case requirements of the audio response and the robot base. I made the final block diagram for our project. After this, I worked on the design requirements for the robot’s dimensions, and the audio cue response mechanism. After identifying these, I worked on the essential tradeoffs for choosing to use some of our components such as the Raspberry Pi, the servo response, choice of material for our robot’s body, and microphone for audio input. After this, I worked on the system implementation for the audio cue response and unit and integration testing of all these components.

Following our discussion, I finalized the bill of materials, and provided risk mitigation plans for our systems and components.

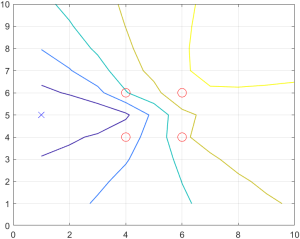

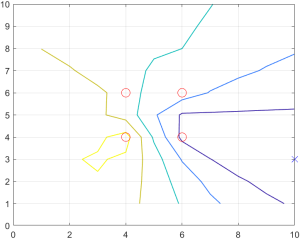

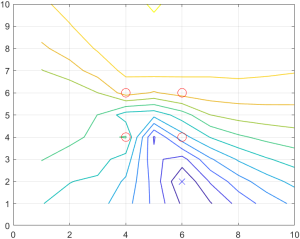

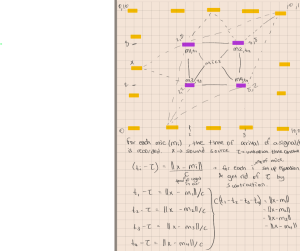

In addition to this, I was able to spend some time discussing the audio response methodology and approach with Professor Bain. After implementing the forward audio detection system (i.e. knowing the location of the audio source and the location of the microphone receivers), my goal was to work backwards, without knowing the location of the audio source. From this meeting, and further research, I concluded my approach as follows, and will be working on it in the coming week. More detail on this implementation can be found on the design report.

The system detects a double clap by continuously recording and analyzing audio from each microphone in 1.5-second segments. It focuses on a 1-second window, dividing it into two halves and performing a correlation to identify two distinct claps with similar intensities. A bandpass filter (2.2 kHz to 2.8 kHz) is applied to eliminate background noise, and audio is processed in 100ms intervals.

Once a double clap is detected, the system calculates the time difference of arrival (TDOA) between microphones using cross-correlation. With four microphones, it computes six time differences to triangulate the sound direction. The detection range is limited to 3 feet, ensuring the robot only responds to nearby sounds. The microphones are synchronized through a shared clock, enabling accurate TDOA calculations, allowing the robot to turn its head toward the detected clap.

I am a little behind on schedule as parts are not here yet. I will be working on the Robot base building with Jeffrey once that is complete, and do testing on the audio triangulation with microphones and RPi after performing the necessary preparations within the coming week.