This week myself and the rest of the group spent basically all of our time integrating each of the components we’ve been working on into one unified system. Aside from the integration work, I made a few changes to the controller handles two drumsticks as opposed to 1, altered the way we handle detecting the drum pads at the start of the session, and cut out the actual rubber drum pads to their specific diameters for testing. Prior to this week, we had the following separate components:

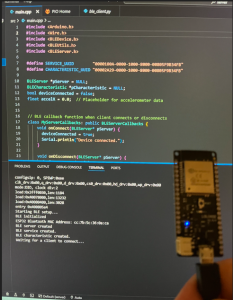

1.) A BLE system capable of transmitting accelerometer data to the paired laptop

2.) A dedicated CV module for detecting the drum rings at the start of the playing session. This function was triggered by clicking a button on the webapp, which used an API to initiate the detection process.

3.) A CV module responsible for continually tracking the tip of a drumstick and storing the predicted drum pad the tip was on for the 20 most recent frames.

4.) An audio playback module responsible for quickly playing audios on detected impacts.

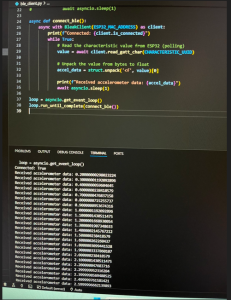

We split our integration process into two steps; the first was to connect the BLE/accelerometer code to the audio playback module, omitting the object tracking. To do this, Elliot had to change some of the BLE module so it could successfully be used in our system controller, and I needed to change the way we were previously reading in accelerometer data in the system controller. I was under the impression that the accelerometer/ESP32 system would continuously transmit the accelerometer data, regardless of whether any acceleration was occurring (i.e. transmit 0 acceleration if not accelerating). However in reality, the system only sends data when acceleration is detected. Thus, I changed the system controller to read a globally set acceleration variable from the Bluetooth module every iteration of the while loop, and then compare this to the predetermined acceleration threshold to decide whether an impact has occurred of not. After Elliot and I completed the necessary changes for integration, we tested the partially integrated system by swinging the accelerometer around to trigger an impact event, then assigning a random index [1,4] (since we hadn’t integrated the object tracking module yet), and playing the according sound. The system functioned very well with surprisingly low latency.

The second step in the integration process was to combine the partially integrated accelerometer/BLE/Playback system with the object tracking code. This again required me to change how the system controller worked. Because Belle’s code needs to independently run continuously and populate our 20 frame buffer of predicted drum pads, we needed a new thread for each drum stick that starts as soon as the session begins. The object tracking code treated drum pad metadata as an array of length 4 of tuples in the form (x, y, r). I was storing drum pad meta data (x, y, r) in a dictionary where each value was associated with a key. Thus, I changed the way we this information to coincide with Belle’s code. At this point, we combined all the logic needed for 1 drumstick’s operation and proceeded to testing. Though obviously it didn’t work on the first try, after a few further modifications and changes, we were successful in producing a system the tracks the drumstick’s location, transmits accelerometer data to the laptop, and plays the corresponding sound of a drum pad when an impact occurs. This was a huge step in our projects progression, as we have a basic, working version of what we proposed to do, all while maintaining a low latency (measuring exactly what the latency is was difficult since its sound based, but just from using it, its clear that the current latency is far below 100ms).

Outside of this integration process, I also started o think about and work on how we would handle two drumsticks as opposed to 1, which we already had working. The key realization were that we need to CV threads to continuously and independently track the location of each drum stick. We would also need two BLE threads, one for each drum sticks acceleration transmission. Lastly, we would need two threads running the system controller code which handles reading in acceleration data, identifying what drum pad the stick was in during an impact, and triggering the audio playback. Though we haven’t yet tested the system with two drum sticks, the system controller is now set up so that once we do want to test it, we can easily spawn corresponding threads for the second drum stick. This involved re-writing the functions to case on the color of each drum sticks tip. This is primarily needed because the object tracking module needs to know which drum stick to track, but is also used in the BLE code to store acceleration data for each stick independently.

Lastly, I spent some time carefully cutting out the drum pads from the rubber sheets at the diameters 15.23, 17.78, 20.32, 22.86 (cm) so we could proceed with testing. Below is an image of the whole setup including the camera stand, webcam, drum pads, and drum sticks.

We are definitely on schedule and hope to continue progressing at this rate for the next few weeks. Next week, I’d like to do two things: 1.) I want to refine the overall system, making sure we have accurate acceleration thresholds and assigning the correct sounds to the correct drum pads from the webapp, and 2.) testing the system with two drum sticks at once. The only worry we have is that since we’ll have two ESP32’s transmitting concurrently, they could interfere with one another and cause packet loss.