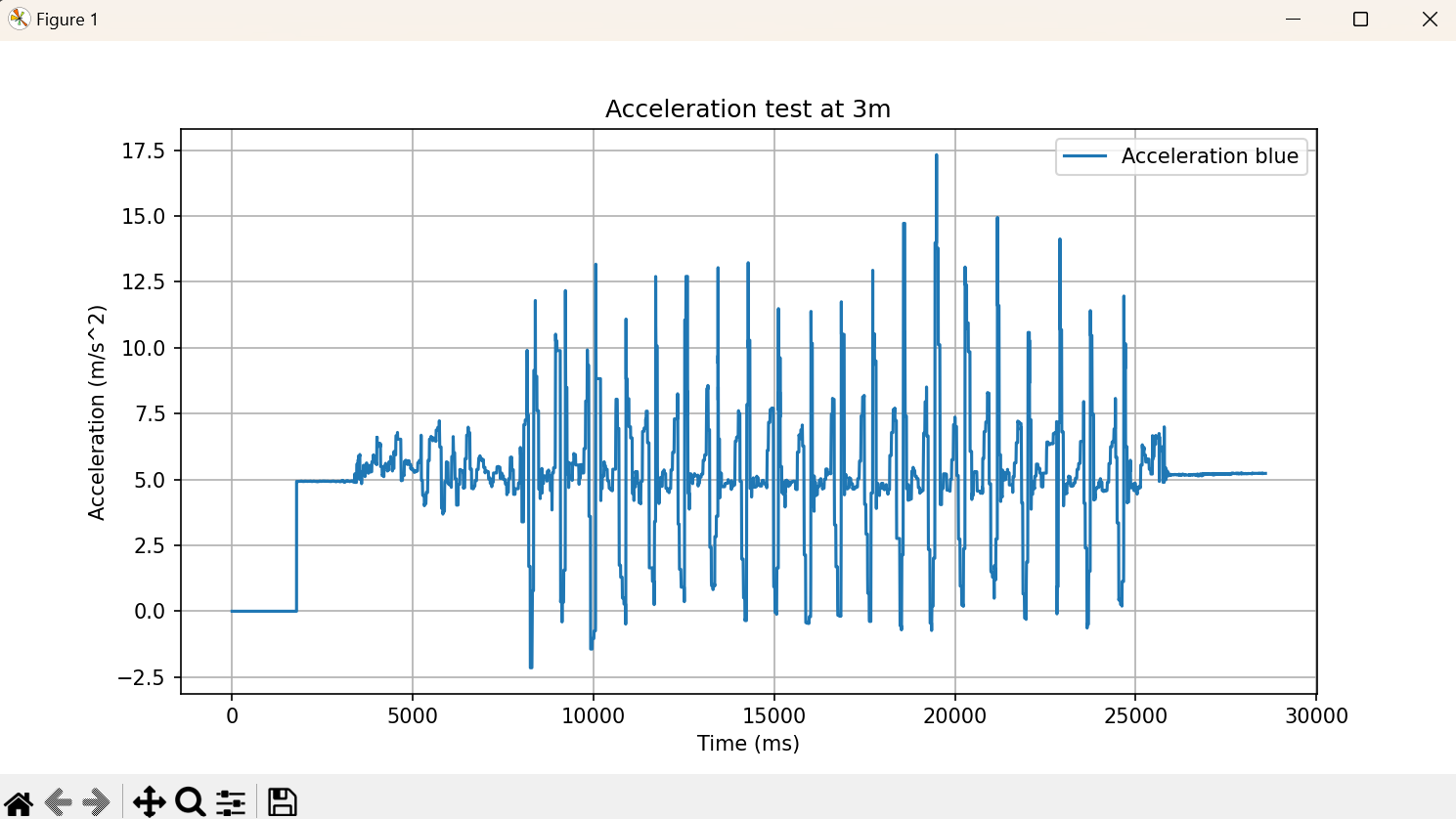

This week I worked on determining better threshold values for what qualifies as an impact. Prior to this week we had a functional system where the accelerometer ESP32 system would successfully relay x, y, z acceleration data to the user’s laptop, and trigger a sound to play. However, we would essentially treat any acceleration as an impact and thus trigger a sound. We configured our accelerometer to align its Y-axis parallel to the drumstick, the X-axis to be parallel to the floor and perpendicular to the drumstick (left right motion), and the Z-axis to be perpendicular to the floor and perpendicular to the drumstick (up-down motion). This is shown in the image below:

Thus, we have two possible ways to retrieve relevant accelerometer data: 1.) reading just the Z-axis acceleration, and 2.) reading the average of the X and Z axis acceleration, since these are the two axis with relevant motion. So on top of finding better threshold values for what constitutes an impact, I needed to determine what axis to use when reading and storing the acceleration. To determine both of these factors I ran a series of tests where I mounted the accelerometer/ESP32 system to the drumstick as shown in the image above and ran two test sequences. In the first test I used just the Z-axis acceleration values and in the second I used the average of the X and Z-axis acceleration. For each test sequence, I recorded 25 clear impacts on a rubber pad on my table. Before starting the tests, I did preformed a few sample impacts so I could see what the readings for an impact resembled. I noted that when an impact occurs (the stick hits the pad) the acceleration reading for that moment is relatively low. So for the tests purposes, an impact was identifiable by seeing a long chain of constant accelerometer data (just holding the stick in the air), followed by an increase in acceleration, followed by a low acceleration reading.

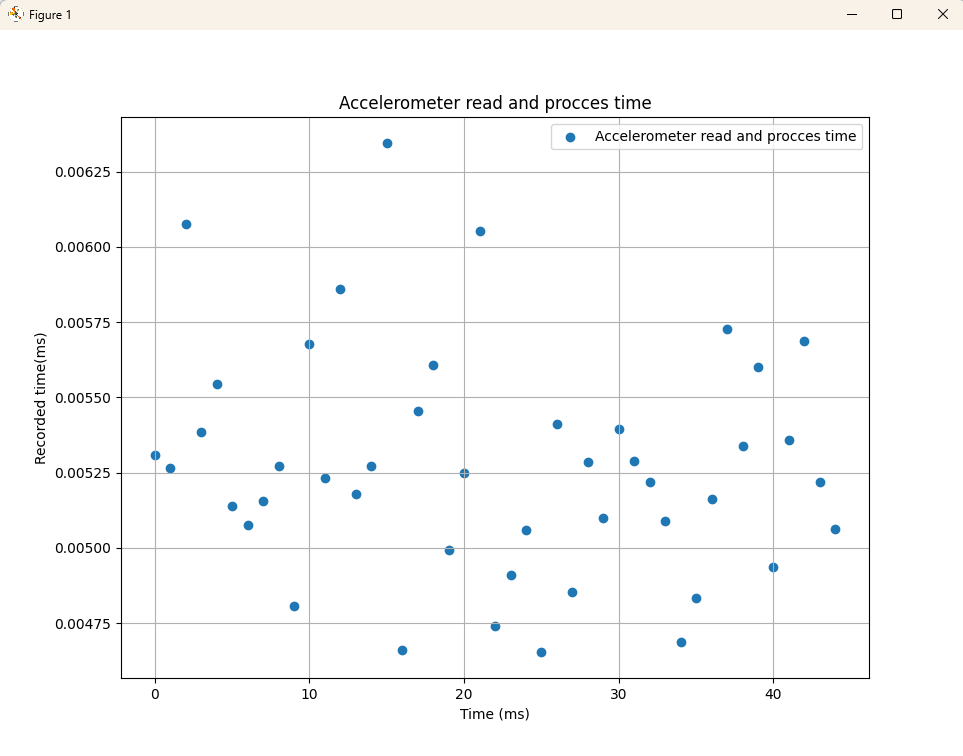

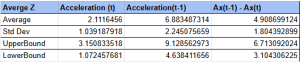

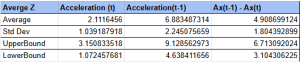

Once I established this, I started collecting samples, first for the test using just the Z-Axis. I performed a series of hits, recording the 25 readings where I could clearly discern an impact had occurred from the output data stream. Cases where an impact was not clearly identifiable from the data were discarded and that sample was repeated. For each sample, I stored the acceleration at the impact, the acceleration just prior to the impact, and the difference between the two (i.e. A(t-1) – A(t)). I then determined the mean and standard deviation for the acceleration, prior acceleration, and the difference between the two acceleration readings. Additionally, I calculated what I termed the upper bound (mean + 1 StdDev) and the lower bound (mean – 1 StdDev). The values are displayed below:

I repeated the same process for the second test, but this time using the average of the X and Z-axis acceleration. The results for this test sequence are shown below:

As you can see, almost unanimously, the values calculated from just the Z-axis acceleration are higher than when using the X,Z average. To then determine what the best threshold values to use would be I proceeded with 4 more tests:

1.) I set the system to use just the Z-axis acceleration and detected a impact with the following condition:

if accel < 3.151 and accel > 1.072: play sound

Here I was just casing on the acceleration to detect an impact, using the upper and lower bound for Acceleration(t).

2.) I set the system to use just the Z-axis acceleration and detected an impact with the following condition:

if (prior_Accel - Accel) < 5.249 and (prior_Accel - Accel) > 2.105: play sound

Here, I was casing on the difference of the previous acceleration reading and the current acceleration reading, using the upper and lower bounds for Ax(t-1) – Ax(t)

3.) I set the system to use the average of the X and Z-axis accelerations and detected an impact with the following condition:

if accel < 2.401 and accel > 1.031: play sound

Here I was casing on just the current acceleration reading, using the upper and lower bounds for Acceleration(t).

4.) I set the system to use the average of the X and Z-axis accelerations and detected an impact with the following condition:

if (prior_Accel - Accel) < 5.249 and (prior_Accel - Accel) > 2.105: play sound

Here I cased on the difference of the prior acceleration minus the current acceleration and used the upper and lower bounds for Ax(t-1) – Ax(t).

After testing each configuration out, I determined two things:

1.) The thresholds taken using the average of the X and Z-axis accelerations resulted in higher correct impact detection than just using the Z-axis acceleration, regardless of whether casing on the (prior_Accel – Accel) or just Accel.

2.) Using the difference between the previous acceleration reading and the current one resulted in better detection of impacts.

Thus, the thresholds we are now using are defined by the upper and lower bound of the difference between the prior acceleration and the current acceleration (Ax(t-1) – Ax(t)), so the condition listed in test 4.) above.

While this system is now far better at not playing sounds when the user is just moving the drumstick about in the air, it still needs to be further tuned to detect impacts. From my experience testing the system out, it seems that about 75% of the instances when I hit the drum pad correctly register as impacts, while ~25% do not. We will need to conduct further tests as well as some trial and error in order to find better thresholds that more accurately detect impacts.

My progress is on schedule this week. In the coming week, I want to focus on getting better threshold values for the accelerometer so impacts are detected more accurately. Additionally, I want to work on the refinement of the integration of all of our systems. As I said last week, we now have a functional system for one drumstick. However, we recently constructed the second drumstick and need to make sure that the additional three threads that need to run concurrently (One controller thread, one BLE thread, and one CV thread) work correctly and do not interfere with one another. Make sure this process goes smoothly will be a top priority in the coming weeks.