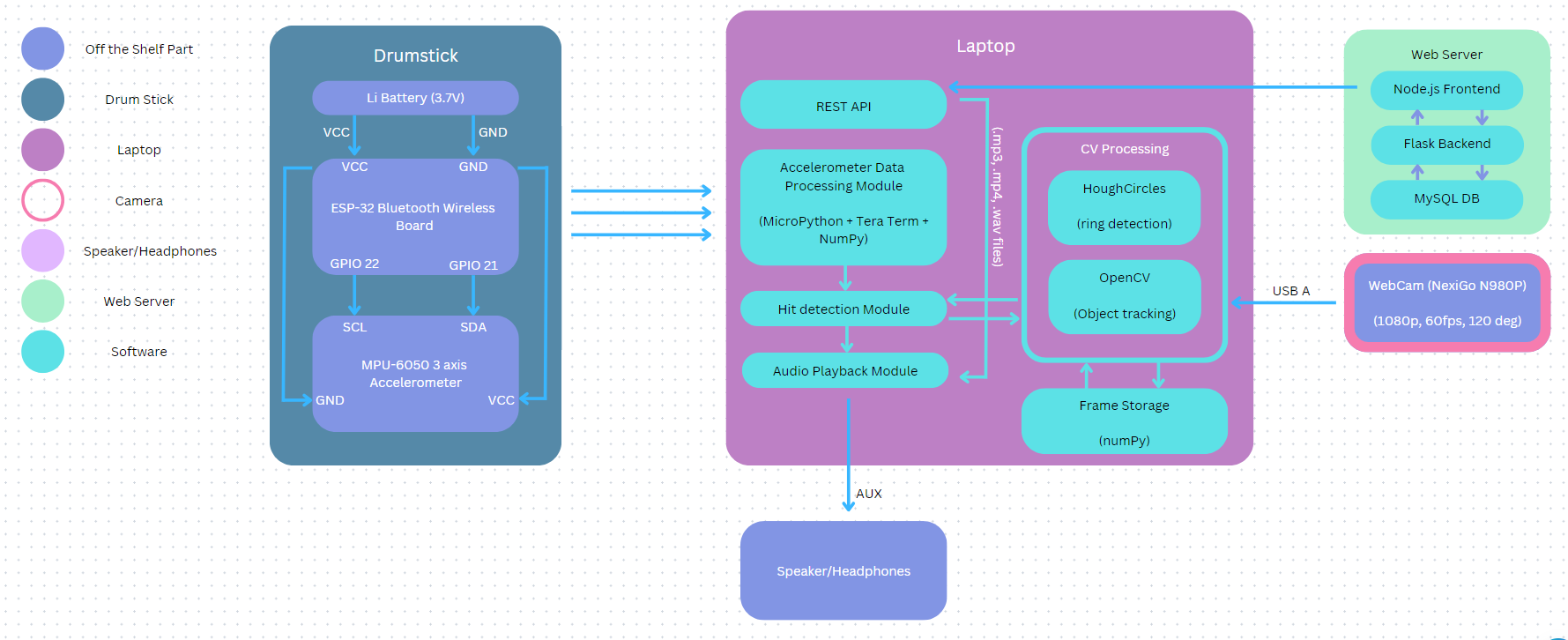

Following our final presentation on Monday, I spent this week working on integrations, making minor fixes to the system all around, and working with Elliot to resolve out lag issues when using two drumsticks. I also updated our frontend to reflect the actual way our system is intended to be used. Lastly, I made a modification to the object detection code that prevents swinging motions outside of the drum pads from triggering an audio event. I’ll go over my contributions this week individually below:

1.) I implemented a sorting system the correctly maps the detected drum pads to the corresponding sound via the radius detected. The webapp allows the user to configure their sounds based on the diameter of the drum pads, so its important that when the drum pads are detected at the start of playing session, they are correctly assigned to loaded sounds, instead of being randomly paired based on the order they are encountered. This entailed writing a helper function that scales and orders the drums based on their diameters, organizing them in order of ascending radius.

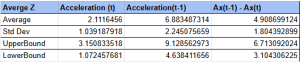

2.) Elliot and I spent a considerable amount of time on Wednesday trying to resolve our two stick lag issues. He had written some new code to be flashed to the drumsticks that should have eliminated the lad by fixing an error we had initially coded where we were at time sending more data than the window could handle. E.g. we were trying to send readings every millisecond but only had a window of 20ms which resulted in received notifications being queued and processed after some delay. We had some luck with this new implementation, but after testing the system multiple times, we realized that the performance seemed to be deteriorating with time. We are now under the impression that this is a result of diminishing power in our battery cells, and are now planning on using fresh batteries to see if that resolves our issue. Furthermore, during these tests I realized that our range for what constitutes an impact was too narrow, which often times resulted in a perceived lag because audio wasn’t being triggered following an impact. To resolve this, we tested with a few new values, settling on a range of 0.3 m/s^2 to 20m/s^2 as a valid impact.

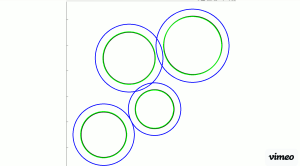

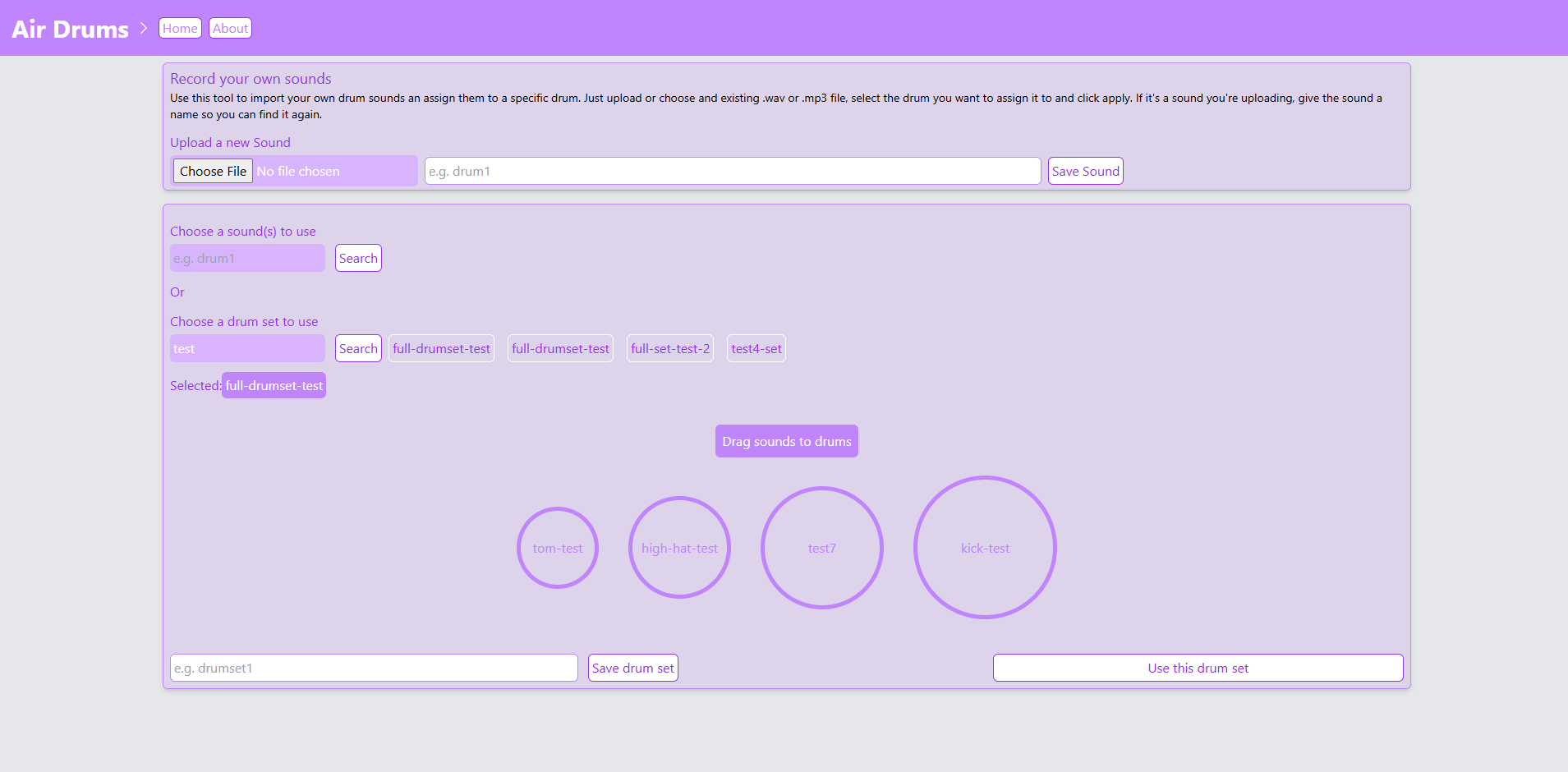

3.) Our frontend had a bunch of relics from our initial design which either needed to be removed or reworked. The main two issues were that the drum pads were being differentiated via color as opposed to radius, and that we still had a button saying “identify my drum set” on the webapp. The first issue was resolved by changing the radii of the circles representing the drum pads on the website and ordering them in a way that corresponds with how the API endpoint orders sounds and detected drum pads at the start of the session. The second issue regarding the “identify drum set” button was easily resolved by removing the button that triggers the endpoint for starting the drum pad detection script. The code housed in this endpoint is still used, but instead of being triggered via the webapp, we set our system up to run the script as soon as a playing session starts. I thought this design made more sense and made the experience of using our system much more simple by eliminating the need to switch back and fourth between the controller system and the webapp during a playing session. Below is the current updated frontend design:

4.) As it was prior to this week, our object tracking system had a major flaw which had previously gone unnoticed: when the script identified that the drum stick tip was not within the bounds of one of the drum rings, it simple continued to the next iteration of the frame processing loop, not updating our green/blue drum stick location prediction variables. This resulted in the following behavior.:

a.) impact occurs in drum pad 3.

b.) prediction variable is updated to index 3 and sound for drum pad 3 plays.

c.) impact occurs outside of any drum pad.

d.) prediction variable is not updated with a -1 value and thus plays the last know drum pad’s corresponding sound, i.e. sound 3

This is an obvious flaw that causes the system to register invalid impacts as valid impacts and play the previously triggered sound. To remedy this, I changed the CV script to update the prediction variables with the -1 (not in a drum pad) value, and updated the sound player module to only play a sound if the index provided ins in the range [0,3] (i.e. one of the valid drum pad indices). Our system now only plays a sound when the user hits one of the drum pads, playing nothing is the drum stick is swung our hit outside of a drum pad.

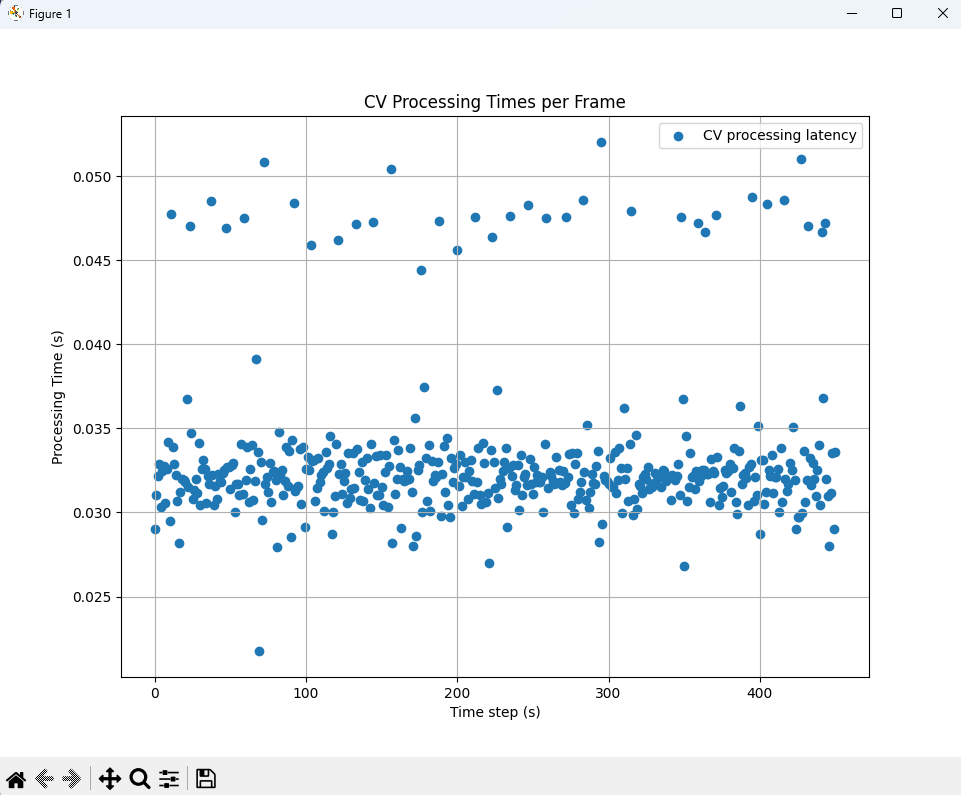

I also started working on our poster, which needs to be ready a little earlier than anticipated given our invitation to the Tech Spark Expo. This entails collecting all our graphs/test metrics and figuring out how to present then in a way that conveys meaning to the audience given our use case. For instance, I needed to figure out how to explain that a graph showing 20 accelerometer spikes verifies our BLE reliability within a 3m radius of our receiving laptop.

Overall, I am on schedule and plan on spending the coming weekend/week refining our system and working on the poster, video, and final report. I expect to have a final version of the poster done by Monday so that we can get it printed in time for the Expo on Wednesday.