For this week, our team worked mainly on the writeup for our design report to fully plan out our final product. We took the time to tackle a few edge cases from our initial blueprint, specifically focusing on the more nuanced details of our design requirements and implementation strategies so that we can better explain our architecture to any reader of the design report. Our schedule remains the same, with Ben developing the web application, Elliot handling the Bluetooth data processing, and Belle covering the computer vision computation onboard the host; we chose, however, to split this week’s stage of our design process differently, with each member focusing on a specific section of the report. We delegated the introduction and requirements to Ben, the architecture and implementation to Elliot, and the testing and tradeoffs to Belle. We decided that this would result in a more well-rounded final product by giving each team member an opportunity to view the project from a holistic perspective before we begin to integrate our modules together. Having each team member dive into other components of the block diagram brought up a few potential concerns we hadn’t considered prior, each of which we then created a mitigation plan for. Some details we worked out this week included the following:

1.) 30mm scalability requirement: As outlined in our proposal and design presentations, one of our use case requirements is to provide the user a 30mm error zone to account for the rubber drumheads deviating from their original position upon impact from the drumsticks. The design requirement we mapped to it for traceability involved deriving a fixed scaling factor to apply to the gathered radii upon detection with the HoughCircles library. We realized, however, that a single scaling factor across all four drums would not achieve a constant 30mm margin for each drum (as they differ in size), and that the relative diameters in pixels between the drumheads would not be sufficient to determine a scaling factor (an absolute metric is required if our solution is to be applicable for varying camera heights). Hence, our new implementation is to store the absolute sizes of each ring within an internal array and scale based on these known sizes. We can then detect the rings based on their relative sizes, map them to their stored dimensions, and apply a simple separate scaling factor to the radii accordingly. This will prove to be a less error-prone approach as opposed to a purely relative solution where we may have encountered issues if the user did not place all rings in view of the camera, or if the camera was too far from the table to detect small variances in the diameters.

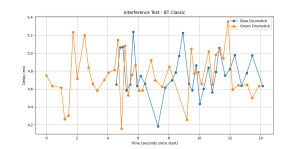

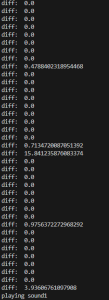

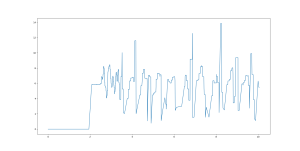

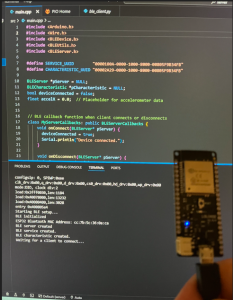

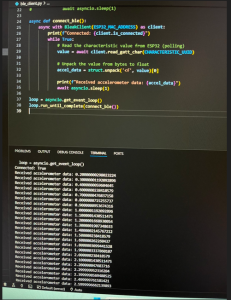

2.) Reliability of BLE packet transmission: Another one of our use case requirements was to ensure a reliable connection within 3m of the laptop, for which we decided to aim for a packet loss of under 2%. Given our original research on the Bluetooth stack and the specifications for the ESP32’s performance, we figured that 2% would be a very reasonable goal. With the second microcontroller also transmitting accelerometer data, however, we run the risk of interference and packet loss, for which we had not developed a mitigation plan. This week, Ben searched for options to lower the packet loss in the event that we do not meet this requirement, eventually landing on the solution of raising the connection interval. Elliot then explored the firmware libraries available in Arduino and confirmed our ability to increase the connection interval with the host device at the cost of over-the-air latency.

3.) Audio output delay: One element we completely overlooked was the main thread’s method of playing audio files, for which we chose to use the pygame mixer. This week, however, our team discovered that this library introduces an unacceptable amount of output latency–we decided to pivot to the use of PyAudio, which is optimized with smaller audio buffers to achieve a much lower processing delay.

4.) Camera specifications: This week, while exploring strategies to most efficiently deploy our computer vision model, we evaluated the effect that a 120 degree field of view camera would have on our CV calculations. We found that wide angle cameras could potentially introduce a form of optical distortion, resulting in stretched pixels and slightly elliptical drumheads, and therefore less precise detection altogether under our framework. We also came to a decision regarding our sliding window, where we chose to now take 0.33 seconds worth of frames before the relevant accelerometer timestamp, since anything higher could lead to potentially false readings. Given these new requirements, we set out to find a high framerate, approximately 90 degree FOV camera, for which we plan to make an order early next week. Below is a diagram we created to help us map out how we’ll use this new field of view:

Next week we plan to stay on schedule and begin working with the physical components we ordered. By Friday, we intend to have a complete report for describing our requirements, strategies, and conscious design decisions in creating our CV-based drumset.