This week, I mostly worked with Ben and Elliot to continue integrating & fine-tuning various components of DrumLite to prepare for the Interim Demo happening this upcoming week.

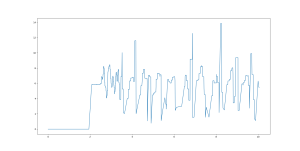

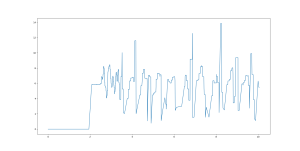

In particular, my main contribution focused on fine-tuning the accelerometer readings. To refine our accelerometer threshold values, we utilized Matplotlib to continuously plot accelerometer data in real-time during testing. In these plots, the x-value represented time, and the y-value represented the average of the x and z components of the accelerometer output. This visualization helped us identify a distinct pattern: each drumstick hit produced a noticeable upward spike, followed by a downward spike in the accelerometer readings (as per the sample output screenshot below, which was created after hitting a machined drumstick on a drum pad four times):

Initially, we attempted to detect these hits by capturing the “high” value, followed by the “low” value. However, upon further analysis, we determined that simply calculating the difference between the two values would be sufficient for reliable detection. To implement this, we introduced a short delay of 1ms between sampling, which allowed us to consistently measure the low-high difference. Additionally, we decided to incorporated the sign of the z-component of the accelerometer output rather than taking its absolute value. This helped us better account for behaviors such as upward flicks of the wrist, which were sometimes mistakenly identified as downward drumstick hits (and were therefore incorrectly triggering a drum sound to be played). Thus, we were able to filter out other similar movements that weren’t downward drumstick swipes onto the drum pad/a solid surface, further refining the precision and reliability of our hit detection logic.

To address lighting inconsistencies from previous tests, we acquired another lamp, ensuring the testing desk is now fully illuminated. This adjustment will significantly improved the consistency of our drumstick tip detection, reducing the impact of shadows and uneven lighting. While we are still in the process of testing this 2-lamp setup, I currently believe using a YOLO/SSD model for object detection is unnecessary. These models are great for complex environments with many objects, but the simplicity of our current setup — with (mostly) controlled lighting and focused object tracking — is key. Also, implementing YOLO/SSD models would introduce significant computational overhead, which we aim to avoid given our desired sub-100ms-latency use case requirement. Therefore, I would prefer for this to remain as a last-resort solution to the lighting issue.

As per our timeline, since we should be fine-tuning/integrating different project components and are essentially done setting the accelerometer threshold values, we are indeed on track. Currently, specifically picking an HSV value for each drumstick is a bit cumbersome and unpredictable, especially in areas with a large amount of ambient lighting. Therefore, next week, I aim to further test drumstick tip detection under varying lighting conditions and try to simplify the aforementioned process, as I believe it is the least-solid aspect of our implementation at the moment.