In the days after the deign presentation I fell slightly behind schedule on implementing the infrastructure of our audio playback module as I outlined as a task for myself last week. The primary reason for this was due to an unexpected amount of work in my three other technical classes, all of which have exams coming up this week. I was unable to actually create a useful interface to trigger a sequence of sounds and delays, but did spend a significant amount of time researching what libraries to use as well as investigating latency related to audio playback. I now know how to approach our playback module and can anticipate what problems we will likely encounter. We previously planned on using PyGame as the main way to initiate, control, and sequence audio playback. However, my investigation showed that latency with PyGame can easily reach 20ms and even up to 30ms for a given sound. Since we want our overall system latency to remain below 100ms (ideally closer to 60ms), this will not do. This was an oversight as we assumed audio playback to be one of the more trivial aspects of the project. After further research it seems that PyAudio would be a far superior library to utilize for audio playback as it offers a far greater level of control when it comes to specifying sampling rate and buffer size (in samples). The issue with pyGame was that it used a buffer size of 4096 sample. Since playback latency is highly dependent upon buffer latency, a large buffer size like this introduces latency we can’t handle. Buffer latency is calculated as follows:

Buffer Latency (seconds) = (Buffer size {samples}) / (sampling rate {samples per second})

So at the standard audio sampling rate of 44.1kHz, this results in 92.87ms of just buffer latency. This would basically encompass all the latency we can afford. However, by using a lower buffer size of 128 samples and the same sampling rate (since changing the sampling rate could introduce latency in the form of sample rate conversion {SRC} latency) we could achieve just 2.9ms of buffer latency. Reducing the buffer means that fewer audio frames are stored prior to playback. While in standard audio playback scenarios this could introduce gaps in sound when processing a set of frames takes longer than the rest, in our case, since sound files for drums are inherently very short, a small buffer size shouldn’t have much of a negative effect. The other major source of audio playback latency is the OS and driver latency. These will be harder to mitigate but through the use a low latency driver like ASIO (for windows) we may be able to bring this down too. It would allow us to bypass the default windows audio stack and interact directly with the sound card. All in all, it still seems achievable to maintain sub 10ms audio playback latency, but will require more work than anticipated.

Outside of my research into audio playback, I worked on figuring out how we would apply the 30mm margin around each of the detected rings. To do so, we plan on storing each of the actual radii of the drum rings in mm; then when we run our circle detection algorithm (either cv2 ‘s HoughCircles or Contours) which return pixel diameters and compute a scaling ratio = (r_px)/(r_mm). We can then apply the 30mm margin by adding it to the mm unit radius of a given drum and multiplying the result by the scaling factor to get its pixel value.

i.e. adjusted radius (px) = (r_mm + 30mm) * (scaling factor)

This allows us to dynamically add a pixel equivalent of 30mm to each drum’s radius regardless of the camera’s height and perceived ring diameters.

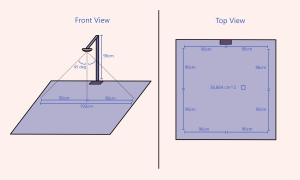

I also spent some time figuring out what our max drum set area would be given camera height and lens angle, and came up with a graphic to demonstrate this. Using a 90 degree lens, we have a maximum achievable layout of 36,864 cm^2, which is 35% greater than that of a standard drum set. Since our project is met to make drumming portable and versatile, it is important that it can both be shrunk down to a very small footprint and expanded to at least the size of a real drum set.

In the coming week I plan on looking further into actually implementing our audio playback module using PyAudio. As aforementioned, this will be significantly more involved than previously thought. Ideally, by the end of the week I’ll have developed a module capable of audio playback with under 10ms of latency, which will involve installing and figuring out exactly how to use both PyAudio and most likely ASIO to moderate driver latency. As I also mentioned at the start of my report, this coming week is very packed for me and I will need to dedicate a lot of time to preparing for my three exams. However, if I am already planning on spending a considerable amount of time over Fall break in order to both catch up my schedule and make some headway both on the audio playback front and integrating it with out existing object detection code such that when a red dot is detected in a certain ring, the corresponding sound plays.